Viability of Optical Coherence Tomography for Iris Presentation Attack Detection

Auto-TLDR; Optical Coherence Tomography Imaging for Iris Presentation Attack Detection

Similar papers

Generalized Iris Presentation Attack Detection Algorithm under Cross-Database Settings

Mehak Gupta, Vishal Singh, Akshay Agarwal, Mayank Vatsa, Richa Singh

Auto-TLDR; MVNet: A Deep Learning-based PAD Network for Iris Recognition against Presentation Attacks

Abstract Slides Poster Similar

MixNet for Generalized Face Presentation Attack Detection

Nilay Sanghvi, Sushant Singh, Akshay Agarwal, Mayank Vatsa, Richa Singh

Auto-TLDR; MixNet: A Deep Learning-based Network for Detection of Presentation Attacks in Cross-Database and Unseen Setting

Abstract Slides Poster Similar

Local Attention and Global Representation Collaborating for Fine-Grained Classification

He Zhang, Yunming Bai, Hui Zhang, Jing Liu, Xingguang Li, Zhaofeng He

Auto-TLDR; Weighted Region Network for Cosmetic Contact Lenses Detection

Abstract Slides Poster Similar

One-Shot Representational Learning for Joint Biometric and Device Authentication

Auto-TLDR; Joint Biometric and Device Recognition from a Single Biometric Image

Abstract Slides Poster Similar

Face Anti-Spoofing Using Spatial Pyramid Pooling

Lei Shi, Zhuo Zhou, Zhenhua Guo

Auto-TLDR; Spatial Pyramid Pooling for Face Anti-Spoofing

Abstract Slides Poster Similar

A Cross Domain Multi-Modal Dataset for Robust Face Anti-Spoofing

Qiaobin Ji, Shugong Xu, Xudong Chen, Shan Cao, Shunqing Zhang

Auto-TLDR; Cross domain multi-modal FAS dataset GREAT-FASD and several evaluation protocols for academic community

Abstract Slides Poster Similar

Detection of Makeup Presentation Attacks Based on Deep Face Representations

Christian Rathgeb, Pawel Drozdowski, Christoph Busch

Auto-TLDR; An Attack Detection Scheme for Face Recognition Using Makeup Presentation Attacks

Abstract Slides Poster Similar

How Unique Is a Face: An Investigative Study

Michal Balazia, S L Happy, Francois Bremond, Antitza Dantcheva

Auto-TLDR; Uniqueness of Face Recognition: Exploring the Impact of Factors such as image resolution, feature representation, database size, age and gender

Abstract Slides Poster Similar

Are Spoofs from Latent Fingerprints a Real Threat for the Best State-Of-Art Liveness Detectors?

Roberto Casula, Giulia Orrù, Daniele Angioni, Xiaoyi Feng, Gian Luca Marcialis, Fabio Roli

Auto-TLDR; ScreenSpoof: Attacks using latent fingerprints against state-of-art fingerprint liveness detectors and verification systems

Face Anti-Spoofing Based on Dynamic Color Texture Analysis Using Local Directional Number Pattern

Junwei Zhou, Ke Shu, Peng Liu, Jianwen Xiang, Shengwu Xiong

Auto-TLDR; LDN-TOP Representation followed by ProCRC Classification for Face Anti-Spoofing

Abstract Slides Poster Similar

Disentangled Representation Based Face Anti-Spoofing

Zhao Liu, Zunlei Feng, Yong Li, Zeyu Zou, Rong Zhang, Mingli Song, Jianping Shen

Auto-TLDR; Face Anti-Spoofing using Motion Information and Disentangled Frame Work

Abstract Slides Poster Similar

Fine-Tuning Convolutional Neural Networks: A Comprehensive Guide and Benchmark Analysis for Glaucoma Screening

Amed Mvoulana, Rostom Kachouri, Mohamed Akil

Auto-TLDR; Fine-tuning Convolutional Neural Networks for Glaucoma Screening

Abstract Slides Poster Similar

Hyperspectral Imaging for Analysis and Classification of Plastic Waste

Jakub Kraśniewski, Łukasz Dąbała, Lewandowski Marcin

Auto-TLDR; A Hyperspectral Camera for Material Classification

Abstract Slides Poster Similar

Finger Vein Recognition and Intra-Subject Similarity Evaluation of Finger Veins Using the CNN Triplet Loss

Georg Wimmer, Bernhard Prommegger, Andreas Uhl

Auto-TLDR; Finger vein recognition using CNNs and hard triplet online selection

Abstract Slides Poster Similar

Video Face Manipulation Detection through Ensemble of CNNs

Nicolo Bonettini, Edoardo Daniele Cannas, Sara Mandelli, Luca Bondi, Paolo Bestagini, Stefano Tubaro

Auto-TLDR; Face Manipulation Detection in Video Sequences Using Convolutional Neural Networks

ResMax: Detecting Voice Spoofing Attacks with Residual Network and Max Feature Map

Il-Youp Kwak, Sungsu Kwag, Junhee Lee, Jun Ho Huh, Choong-Hoon Lee, Youngbae Jeon, Jeonghwan Hwang, Ji Won Yoon

Auto-TLDR; ASVspoof 2019: A Lightweight Automatic Speaker Verification Spoofing and Countermeasures System

Abstract Slides Poster Similar

Exemplar Guided Cross-Spectral Face Hallucination Via Mutual Information Disentanglement

Haoxue Wu, Huaibo Huang, Aijing Yu, Jie Cao, Zhen Lei, Ran He

Auto-TLDR; Exemplar Guided Cross-Spectral Face Hallucination with Structural Representation Learning

Abstract Slides Poster Similar

Exploring Seismocardiogram Biometrics with Wavelet Transform

Po-Ya Hsu, Po-Han Hsu, Hsin-Li Liu

Auto-TLDR; Seismocardiogram Biometric Matching Using Wavelet Transform and Deep Learning Models

Abstract Slides Poster Similar

Detection and Correspondence Matching of Corneal Reflections for Eye Tracking Using Deep Learning

Soumil Chugh, Braiden Brousseau, Jonathan Rose, Moshe Eizenman

Auto-TLDR; A Fully Convolutional Neural Network for Corneal Reflection Detection and Matching in Extended Reality Eye Tracking Systems

Abstract Slides Poster Similar

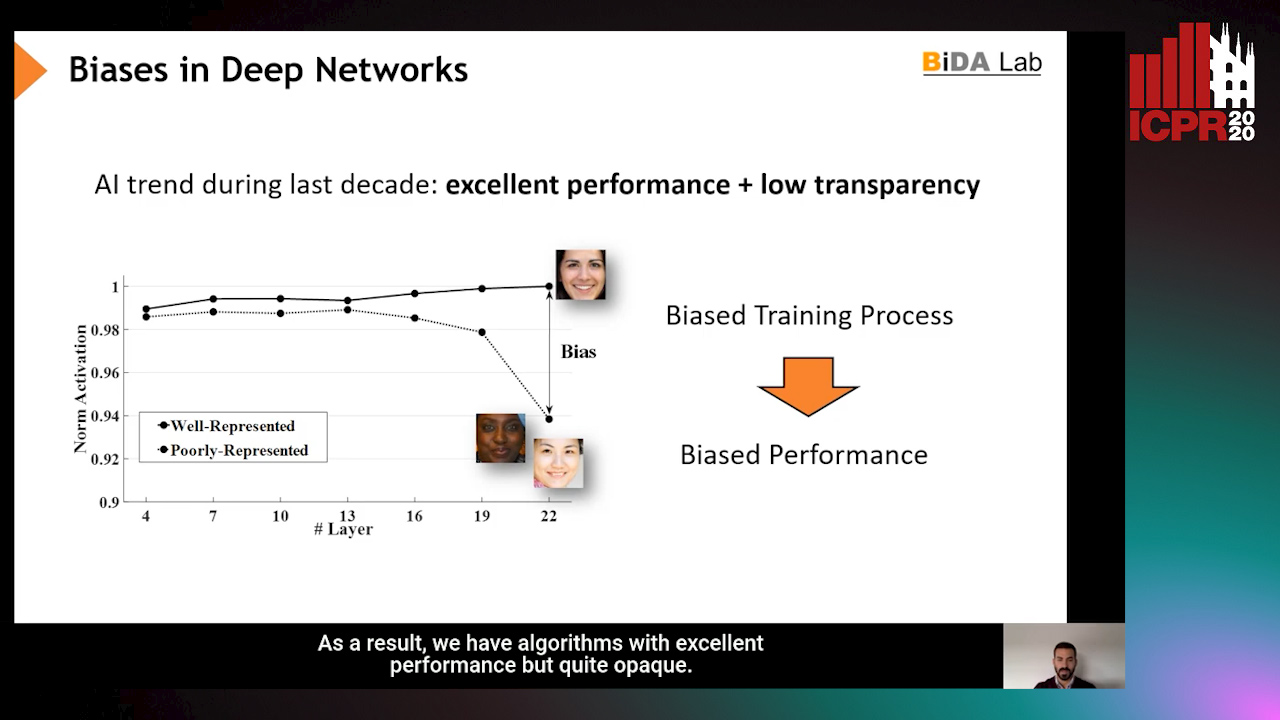

InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics

Ignacio Serna, Alejandro Peña Almansa, Aythami Morales, Julian Fierrez

Auto-TLDR; InsideBias: Detecting Bias in Deep Neural Networks from Face Images

Abstract Slides Poster Similar

A Versatile Crack Inspection Portable System Based on Classifier Ensemble and Controlled Illumination

Milind Gajanan Padalkar, Carlos Beltran-Gonzalez, Matteo Bustreo, Alessio Del Bue, Vittorio Murino

Auto-TLDR; Lighting Conditions for Crack Detection in Ceramic Tile

Abstract Slides Poster Similar

Cross-spectrum Face Recognition Using Subspace Projection Hashing

Hanrui Wang, Xingbo Dong, Jin Zhe, Jean-Luc Dugelay, Massimo Tistarelli

Auto-TLDR; Subspace Projection Hashing for Cross-Spectrum Face Recognition

Abstract Slides Poster Similar

Deep Fusion of RGB and NIR Paired Images Using Convolutional Neural Networks

Auto-TLDR; Deep Fusion of RGB and NIR paired images in low light condition using convolutional neural networks

Abstract Slides Poster Similar

Two-Level Attention-Based Fusion Learning for RGB-D Face Recognition

Hardik Uppal, Alireza Sepas-Moghaddam, Michael Greenspan, Ali Etemad

Auto-TLDR; Fused RGB-D Facial Recognition using Attention-Aware Feature Fusion

Abstract Slides Poster Similar

Near-Infrared Depth-Independent Image Dehazing using Haar Wavelets

Sumit Laha, Ankit Sharma, Shengnan Hu, Hassan Foroosh

Auto-TLDR; A fusion algorithm for haze removal using Haar wavelets

Abstract Slides Poster Similar

Documents Counterfeit Detection through a Deep Learning Approach

Darwin Danilo Saire Pilco, Salvatore Tabbone

Auto-TLDR; End-to-End Learning for Counterfeit Documents Detection using Deep Neural Network

Abstract Slides Poster Similar

Dynamically Mitigating Data Discrepancy with Balanced Focal Loss for Replay Attack Detection

Yongqiang Dou, Haocheng Yang, Maolin Yang, Yanyan Xu, Dengfeng Ke

Auto-TLDR; Anti-Spoofing with Balanced Focal Loss Function and Combination Features

Abstract Slides Poster Similar

Classifying Eye-Tracking Data Using Saliency Maps

Shafin Rahman, Sejuti Rahman, Omar Shahid, Md. Tahmeed Abdullah, Jubair Ahmed Sourov

Auto-TLDR; Saliency-based Feature Extraction for Automatic Classification of Eye-tracking Data

Abstract Slides Poster Similar

Can You Really Trust the Sensor's PRNU? How Image Content Might Impact the Finger Vein Sensor Identification Performance

Dominik Söllinger, Luca Debiasi, Andreas Uhl

Auto-TLDR; Finger vein imagery can cause the PRNU estimate to be biased by image content

Abstract Slides Poster Similar

Real-Time Drone Detection and Tracking with Visible, Thermal and Acoustic Sensors

Fredrik Svanström, Cristofer Englund, Fernando Alonso-Fernandez

Auto-TLDR; Automatic multi-sensor drone detection using sensor fusion

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

Attack-Agnostic Adversarial Detection on Medical Data Using Explainable Machine Learning

Matthew Watson, Noura Al Moubayed

Auto-TLDR; Explainability-based Detection of Adversarial Samples on EHR and Chest X-Ray Data

Abstract Slides Poster Similar

Real Time Fencing Move Classification and Detection at Touch Time During a Fencing Match

Cem Ekin Sunal, Chris G. Willcocks, Boguslaw Obara

Auto-TLDR; Fencing Body Move Classification and Detection Using Deep Learning

A Flatter Loss for Bias Mitigation in Cross-Dataset Facial Age Estimation

Ali Akbari, Muhammad Awais, Zhenhua Feng, Ammarah Farooq, Josef Kittler

Auto-TLDR; Cross-dataset Age Estimation for Neural Network Training

Abstract Slides Poster Similar

Rotation Detection in Finger Vein Biometrics Using CNNs

Bernhard Prommegger, Georg Wimmer, Andreas Uhl

Auto-TLDR; A CNN based rotation detector for finger vein recognition

Abstract Slides Poster Similar

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures

An Experimental Evaluation of Recent Face Recognition Losses for Deepfake Detection

Yu-Cheng Liu, Chia-Ming Chang, I-Hsuan Chen, Yu Ju Ku, Jun-Cheng Chen

Auto-TLDR; Deepfake Classification and Detection using Loss Functions for Face Recognition

Abstract Slides Poster Similar

Attribute-Based Quality Assessment for Demographic Estimation in Face Videos

Fabiola Becerra-Riera, Annette Morales-González, Heydi Mendez-Vazquez, Jean-Luc Dugelay

Auto-TLDR; Facial Demographic Estimation in Video Scenarios Using Quality Assessment

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

Complex-Object Visual Inspection: Empirical Studies on a Multiple Lighting Solution

Maya Aghaei, Matteo Bustreo, Pietro Morerio, Nicolò Carissimi, Alessio Del Bue, Vittorio Murino

Auto-TLDR; A Novel Illumination Setup for Automatic Visual Inspection of Complex Objects

Abstract Slides Poster Similar

Bridging the Gap between Natural and Medical Images through Deep Colorization

Lia Morra, Luca Piano, Fabrizio Lamberti, Tatiana Tommasi

Auto-TLDR; Transfer Learning for Diagnosis on X-ray Images Using Color Adaptation

Abstract Slides Poster Similar

Detecting Manipulated Facial Videos: A Time Series Solution

Zhang Zhewei, Ma Can, Gao Meilin, Ding Bowen

Auto-TLDR; Face-Alignment Based Bi-LSTM for Fake Video Detection

Abstract Slides Poster Similar

Human or Machine? It Is Not What You Write, but How You Write It

Luis Leiva, Moises Diaz, M.A. Ferrer, Réjean Plamondon

Auto-TLDR; Behavioral Biometrics via Handwritten Symbols for Identification and Verification

Abstract Slides Poster Similar

A Systematic Investigation on Deep Architectures for Automatic Skin Lesions Classification

Pierluigi Carcagni, Marco Leo, Andrea Cuna, Giuseppe Celeste, Cosimo Distante

Auto-TLDR; RegNet: Deep Investigation of Convolutional Neural Networks for Automatic Classification of Skin Lesions

Abstract Slides Poster Similar

ISP4ML: The Role of Image Signal Processing in Efficient Deep Learning Vision Systems

Patrick Hansen, Alexey Vilkin, Yury Khrustalev, James Stuart Imber, Dumidu Sanjaya Talagala, David Hanwell, Matthew Mattina, Paul Whatmough

Auto-TLDR; Towards Efficient Convolutional Neural Networks with Image Signal Processing

Abstract Slides Poster Similar

Lightweight Low-Resolution Face Recognition for Surveillance Applications

Yoanna Martínez-Díaz, Heydi Mendez-Vazquez, Luis S. Luevano, Leonardo Chang, Miguel Gonzalez-Mendoza

Auto-TLDR; Efficiency of Lightweight Deep Face Networks on Low-Resolution Surveillance Imagery

Abstract Slides Poster Similar

Single View Learning in Action Recognition

Gaurvi Goyal, Nicoletta Noceti, Francesca Odone

Auto-TLDR; Cross-View Action Recognition Using Domain Adaptation for Knowledge Transfer

Abstract Slides Poster Similar

A Novel Disaster Image Data-Set and Characteristics Analysis Using Attention Model

Fahim Faisal Niloy, Arif ., Abu Bakar Siddik Nayem, Anis Sarker, Ovi Paul, M Ashraful Amin, Amin Ahsan Ali, Moinul Islam Zaber, Akmmahbubur Rahman

Auto-TLDR; Attentive Attention Model for Disaster Classification

Abstract Slides Poster Similar