Rotation Detection in Finger Vein Biometrics Using CNNs

Bernhard Prommegger,

Georg Wimmer,

Andreas Uhl

Auto-TLDR; A CNN based rotation detector for finger vein recognition

Similar papers

Finger Vein Recognition and Intra-Subject Similarity Evaluation of Finger Veins Using the CNN Triplet Loss

Georg Wimmer, Bernhard Prommegger, Andreas Uhl

Auto-TLDR; Finger vein recognition using CNNs and hard triplet online selection

Abstract Slides Poster Similar

A Local Descriptor with Physiological Characteristic for Finger Vein Recognition

Liping Zhang, Weijun Li, Ning Xin

Auto-TLDR; Finger vein-specific local feature descriptors based physiological characteristic of finger vein patterns

Abstract Slides Poster Similar

Can You Really Trust the Sensor's PRNU? How Image Content Might Impact the Finger Vein Sensor Identification Performance

Dominik Söllinger, Luca Debiasi, Andreas Uhl

Auto-TLDR; Finger vein imagery can cause the PRNU estimate to be biased by image content

Abstract Slides Poster Similar

Level Three Synthetic Fingerprint Generation

Andre Wyzykowski, Mauricio Pamplona Segundo, Rubisley Lemes

Auto-TLDR; Synthesis of High-Resolution Fingerprints with Pore Detection Using CycleGAN

Abstract Slides Poster Similar

Fingerprints, Forever Young?

Roman Kessler, Olaf Henniger, Christoph Busch

Auto-TLDR; Mated Similarity Scores for Fingerprint Recognition: A Hierarchical Linear Model

Abstract Slides Poster Similar

How Unique Is a Face: An Investigative Study

Michal Balazia, S L Happy, Francois Bremond, Antitza Dantcheva

Auto-TLDR; Uniqueness of Face Recognition: Exploring the Impact of Factors such as image resolution, feature representation, database size, age and gender

Abstract Slides Poster Similar

A Cross Domain Multi-Modal Dataset for Robust Face Anti-Spoofing

Qiaobin Ji, Shugong Xu, Xudong Chen, Shan Cao, Shunqing Zhang

Auto-TLDR; Cross domain multi-modal FAS dataset GREAT-FASD and several evaluation protocols for academic community

Abstract Slides Poster Similar

Detection of Makeup Presentation Attacks Based on Deep Face Representations

Christian Rathgeb, Pawel Drozdowski, Christoph Busch

Auto-TLDR; An Attack Detection Scheme for Face Recognition Using Makeup Presentation Attacks

Abstract Slides Poster Similar

HP2IFS: Head Pose Estimation Exploiting Partitioned Iterated Function Systems

Carmen Bisogni, Michele Nappi, Chiara Pero, Stefano Ricciardi

Auto-TLDR; PIFS based head pose estimation using fractal coding theory and Partitioned Iterated Function Systems

Abstract Slides Poster Similar

Super-Resolution Guided Pore Detection for Fingerprint Recognition

Syeda Nyma Ferdous, Ali Dabouei, Jeremy Dawson, Nasser M. Nasarabadi

Auto-TLDR; Super-Resolution Generative Adversarial Network for Fingerprint Recognition Using Pore Features

Abstract Slides Poster Similar

Are Spoofs from Latent Fingerprints a Real Threat for the Best State-Of-Art Liveness Detectors?

Roberto Casula, Giulia Orrù, Daniele Angioni, Xiaoyi Feng, Gian Luca Marcialis, Fabio Roli

Auto-TLDR; ScreenSpoof: Attacks using latent fingerprints against state-of-art fingerprint liveness detectors and verification systems

Inner Eye Canthus Localization for Human Body Temperature Screening

Claudio Ferrari, Lorenzo Berlincioni, Marco Bertini, Alberto Del Bimbo

Auto-TLDR; Automatic Localization of the Inner Eye Canthus in Thermal Face Images using 3D Morphable Face Model

Abstract Slides Poster Similar

One-Shot Representational Learning for Joint Biometric and Device Authentication

Auto-TLDR; Joint Biometric and Device Recognition from a Single Biometric Image

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

Spatial Bias in Vision-Based Voice Activity Detection

Kalin Stefanov, Mohammad Adiban, Giampiero Salvi

Auto-TLDR; Spatial Bias in Vision-based Voice Activity Detection in Multiparty Human-Human Interactions

Polarimetric Image Augmentation

Marc Blanchon, Fabrice Meriaudeau, Olivier Morel, Ralph Seulin, Desire Sidibe

Auto-TLDR; Polarimetric Augmentation for Deep Learning in Robotics Applications

Documents Counterfeit Detection through a Deep Learning Approach

Darwin Danilo Saire Pilco, Salvatore Tabbone

Auto-TLDR; End-to-End Learning for Counterfeit Documents Detection using Deep Neural Network

Abstract Slides Poster Similar

User-Independent Gaze Estimation by Extracting Pupil Parameter and Its Mapping to the Gaze Angle

Auto-TLDR; Gaze Point Estimation using Pupil Shape for Generalization

Abstract Slides Poster Similar

Deep Gait Relative Attribute Using a Signed Quadratic Contrastive Loss

Yuta Hayashi, Shehata Allam, Yasushi Makihara, Daigo Muramatsu, Yasushi Yagi

Auto-TLDR; Signal-Contrastive Loss for Gait Attributes Estimation

Exploring Seismocardiogram Biometrics with Wavelet Transform

Po-Ya Hsu, Po-Han Hsu, Hsin-Li Liu

Auto-TLDR; Seismocardiogram Biometric Matching Using Wavelet Transform and Deep Learning Models

Abstract Slides Poster Similar

Recovery of 2D and 3D Layout Information through an Advanced Image Stitching Algorithm Using Scanning Electron Microscope Images

Aayush Singla, Bernhard Lippmann, Helmut Graeb

Auto-TLDR; Image Stitching for True Geometrical Layout Recovery in Nanoscale Dimension

Abstract Slides Poster Similar

Generic Document Image Dewarping by Probabilistic Discretization of Vanishing Points

Gilles Simon, Salvatore Tabbone

Auto-TLDR; Robust Document Dewarping using vanishing points

Abstract Slides Poster Similar

Pose-Robust Face Recognition by Deep Meta Capsule Network-Based Equivariant Embedding

Fangyu Wu, Jeremy Simon Smith, Wenjin Lu, Bailing Zhang

Auto-TLDR; Deep Meta Capsule Network-based Equivariant Embedding Model for Pose-Robust Face Recognition

ID Documents Matching and Localization with Multi-Hypothesis Constraints

Guillaume Chiron, Nabil Ghanmi, Ahmad Montaser Awal

Auto-TLDR; Identity Document Localization in the Wild Using Multi-hypothesis Exploration

Abstract Slides Poster Similar

Wireless Localisation in WiFi Using Novel Deep Architectures

Peizheng Li, Han Cui, Aftab Khan, Usman Raza, Robert Piechocki, Angela Doufexi, Tim Farnham

Auto-TLDR; Deep Neural Network for Indoor Localisation of WiFi Devices in Indoor Environments

Abstract Slides Poster Similar

3D Dental Biometrics: Automatic Pose-Invariant Dental Arch Extraction and Matching

Auto-TLDR; Automatic Dental Arch Extraction and Matching for 3D Dental Identification using Laser-Scanned Plasters

Abstract Slides Poster Similar

Two-Level Attention-Based Fusion Learning for RGB-D Face Recognition

Hardik Uppal, Alireza Sepas-Moghaddam, Michael Greenspan, Ali Etemad

Auto-TLDR; Fused RGB-D Facial Recognition using Attention-Aware Feature Fusion

Abstract Slides Poster Similar

Feasibility Study of Using MyoBand for Learning Electronic Keyboard

Auto-TLDR; Autonomous Finger-Based Music Instrument Learning using Electromyography Using MyoBand and Machine Learning

Abstract Slides Poster Similar

Face Anti-Spoofing Using Spatial Pyramid Pooling

Lei Shi, Zhuo Zhou, Zhenhua Guo

Auto-TLDR; Spatial Pyramid Pooling for Face Anti-Spoofing

Abstract Slides Poster Similar

Better Prior Knowledge Improves Human-Pose-Based Extrinsic Camera Calibration

Olivier Moliner, Sangxia Huang, Kalle Åström

Auto-TLDR; Improving Human-pose-based Extrinsic Calibration for Multi-Camera Systems

Abstract Slides Poster Similar

Joint Learning Multiple Curvature Descriptor for 3D Palmprint Recognition

Lunke Fei, Bob Zhang, Jie Wen, Chunwei Tian, Peng Liu, Shuping Zhao

Auto-TLDR; Joint Feature Learning for 3D palmprint recognition using curvature data vectors

Abstract Slides Poster Similar

Rotational Adjoint Methods for Learning-Free 3D Human Pose Estimation from IMU Data

Caterina Emilia Agelide Buizza, Yiannis Demiris

Auto-TLDR; Learning-free 3D Human Pose Estimation from Inertial Measurement Unit Data

Hybrid Approach for 3D Head Reconstruction: Using Neural Networks and Visual Geometry

Oussema Bouafif, Bogdan Khomutenko, Mohammed Daoudi

Auto-TLDR; Recovering 3D Head Geometry from a Single Image using Deep Learning and Geometric Techniques

Abstract Slides Poster Similar

Face Anti-Spoofing Based on Dynamic Color Texture Analysis Using Local Directional Number Pattern

Junwei Zhou, Ke Shu, Peng Liu, Jianwen Xiang, Shengwu Xiong

Auto-TLDR; LDN-TOP Representation followed by ProCRC Classification for Face Anti-Spoofing

Abstract Slides Poster Similar

RISEdb: A Novel Indoor Localization Dataset

Carlos Sanchez Belenguer, Erik Wolfart, Álvaro Casado Coscollá, Vitor Sequeira

Auto-TLDR; Indoor Localization Using LiDAR SLAM and Smartphones: A Benchmarking Dataset

Abstract Slides Poster Similar

P2D: A Self-Supervised Method for Depth Estimation from Polarimetry

Marc Blanchon, Desire Sidibe, Olivier Morel, Ralph Seulin, Daniel Braun, Fabrice Meriaudeau

Auto-TLDR; Polarimetric Regularization for Monocular Depth Estimation

Abstract Slides Poster Similar

Understanding When Spatial Transformer Networks Do Not Support Invariance, and What to Do about It

Lukas Finnveden, Ylva Jansson, Tony Lindeberg

Auto-TLDR; Spatial Transformer Networks are unable to support invariance when transforming CNN feature maps

Abstract Slides Poster Similar

Position-Aware and Symmetry Enhanced GAN for Radial Distortion Correction

Yongjie Shi, Xin Tong, Jingsi Wen, He Zhao, Xianghua Ying, Jinshi Hongbin Zha

Auto-TLDR; Generative Adversarial Network for Radial Distorted Image Correction

Abstract Slides Poster Similar

Writer Identification Using Deep Neural Networks: Impact of Patch Size and Number of Patches

Akshay Punjabi, José Ramón Prieto Fontcuberta, Enrique Vidal

Auto-TLDR; Writer Recognition Using Deep Neural Networks for Handwritten Text Images

Abstract Slides Poster Similar

ResMax: Detecting Voice Spoofing Attacks with Residual Network and Max Feature Map

Il-Youp Kwak, Sungsu Kwag, Junhee Lee, Jun Ho Huh, Choong-Hoon Lee, Youngbae Jeon, Jeonghwan Hwang, Ji Won Yoon

Auto-TLDR; ASVspoof 2019: A Lightweight Automatic Speaker Verification Spoofing and Countermeasures System

Abstract Slides Poster Similar

Lookalike Disambiguation: Improving Face Identification Performance at Top Ranks

Auto-TLDR; Lookalike Face Identification Using a Disambiguator for Lookalike Images

Viability of Optical Coherence Tomography for Iris Presentation Attack Detection

Auto-TLDR; Optical Coherence Tomography Imaging for Iris Presentation Attack Detection

Abstract Slides Poster Similar

Sequential Non-Rigid Factorisation for Head Pose Estimation

Stefania Cristina, Kenneth Patrick Camilleri

Auto-TLDR; Sequential Shape-and-Motion Factorisation for Head Pose Estimation in Eye-Gaze Tracking

Abstract Slides Poster Similar

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures

Transfer Learning through Weighted Loss Function and Group Normalization for Vessel Segmentation from Retinal Images

Abdullah Sarhan, Jon Rokne, Reda Alhajj, Andrew Crichton

Auto-TLDR; Deep Learning for Segmentation of Blood Vessels in Retinal Images

Abstract Slides Poster Similar

Learning Non-Rigid Surface Reconstruction from Spatio-Temporal Image Patches

Matteo Pedone, Abdelrahman Mostafa, Janne Heikkilä

Auto-TLDR; Dense Spatio-Temporal Depth Maps of Deformable Objects from Video Sequences

Abstract Slides Poster Similar

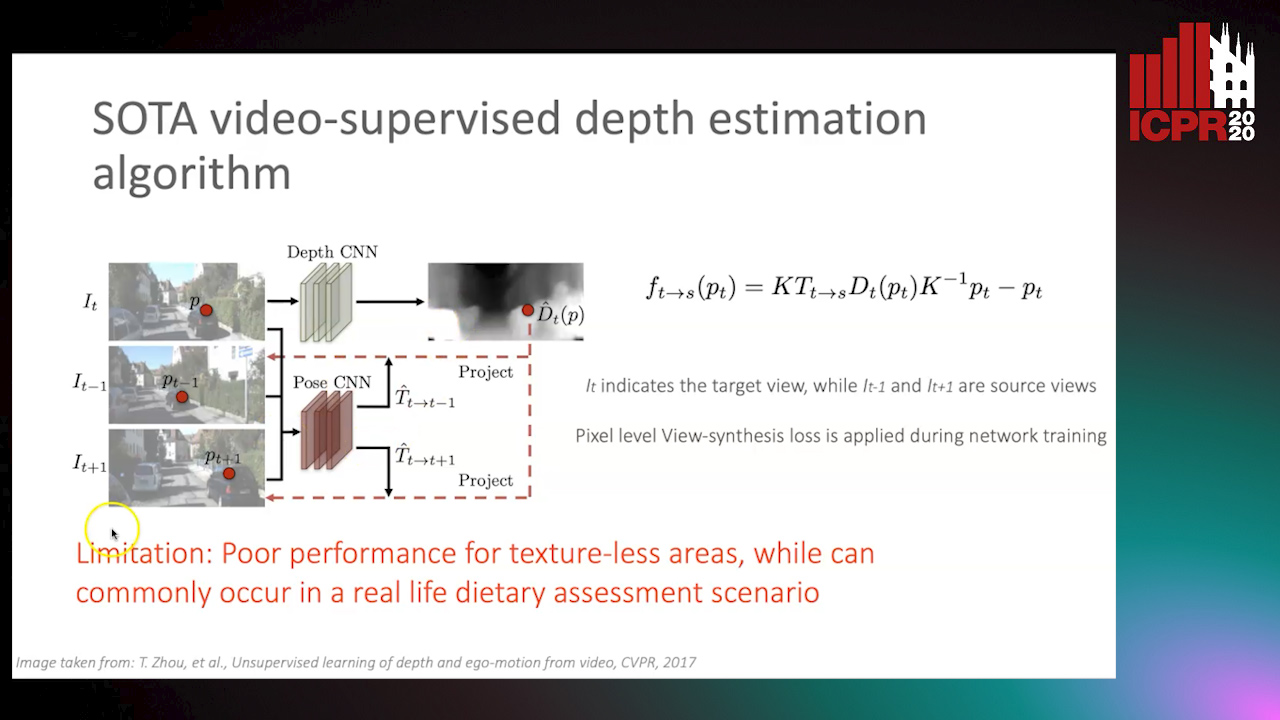

Partially Supervised Multi-Task Network for Single-View Dietary Assessment

Ya Lu, Thomai Stathopoulou, Stavroula Mougiakakou

Auto-TLDR; Food Volume Estimation from a Single Food Image via Geometric Understanding and Semantic Prediction

Abstract Slides Poster Similar

PolyLaneNet: Lane Estimation Via Deep Polynomial Regression

Talles Torres, Rodrigo Berriel, Thiago Paixão, Claudine Badue, Alberto F. De Souza, Thiago Oliveira-Santos

Auto-TLDR; Real-Time Lane Detection with Deep Polynomial Regression

Abstract Slides Poster Similar