Deep Gait Relative Attribute Using a Signed Quadratic Contrastive Loss

Yuta Hayashi,

Shehata Allam,

Yasushi Makihara,

Daigo Muramatsu,

Yasushi Yagi

Auto-TLDR; Signal-Contrastive Loss for Gait Attributes Estimation

Similar papers

Gait Recognition Using Multi-Scale Partial Representation Transformation with Capsules

Alireza Sepas-Moghaddam, Saeed Ghorbani, Nikolaus F. Troje, Ali Etemad

Auto-TLDR; Learning to Transfer Multi-scale Partial Gait Representations using Capsule Networks for Gait Recognition

Abstract Slides Poster Similar

Part-Based Collaborative Spatio-Temporal Feature Learning for Cloth-Changing Gait Recognition

Lingxiang Yao, Worapan Kusakunniran, Qiang Wu, Jian Zhang, Jingsong Xu

Auto-TLDR; Part-based Spatio-Temporal Feature Learning for Gait Recognition

Abstract Slides Poster Similar

A Flatter Loss for Bias Mitigation in Cross-Dataset Facial Age Estimation

Ali Akbari, Muhammad Awais, Zhenhua Feng, Ammarah Farooq, Josef Kittler

Auto-TLDR; Cross-dataset Age Estimation for Neural Network Training

Abstract Slides Poster Similar

Multi-Label Contrastive Focal Loss for Pedestrian Attribute Recognition

Xiaoqiang Zheng, Zhenxia Yu, Lin Chen, Fan Zhu, Shilong Wang

Auto-TLDR; Multi-label Contrastive Focal Loss for Pedestrian Attribute Recognition

Abstract Slides Poster Similar

Learning Natural Thresholds for Image Ranking

Somayeh Keshavarz, Quang Nhat Tran, Richard Souvenir

Auto-TLDR; Image Representation Learning and Label Discretization for Natural Image Ranking

Abstract Slides Poster Similar

Space-Time Domain Tensor Neural Networks: An Application on Human Pose Classification

Konstantinos Makantasis, Athanasios Voulodimos, Anastasios Doulamis, Nikolaos Doulamis, Nikolaos Bakalos

Auto-TLDR; Tensor-Based Neural Network for Spatiotemporal Pose Classifiaction using Three-Dimensional Skeleton Data

Abstract Slides Poster Similar

Deep Top-Rank Counter Metric for Person Re-Identification

Chen Chen, Hao Dou, Xiyuan Hu, Silong Peng

Auto-TLDR; Deep Top-Rank Counter Metric for Person Re-identification

Abstract Slides Poster Similar

Video Analytics Gait Trend Measurement for Fall Prevention and Health Monitoring

Lawrence O'Gorman, Xinyi Liu, Md Imran Sarker, Mariofanna Milanova

Auto-TLDR; Towards Health Monitoring of Gait with Deep Learning

Abstract Slides Poster Similar

Attentive Part-Aware Networks for Partial Person Re-Identification

Lijuan Huo, Chunfeng Song, Zhengyi Liu, Zhaoxiang Zhang

Auto-TLDR; Part-Aware Learning for Partial Person Re-identification

Abstract Slides Poster Similar

Gender Classification Using Video Sequences of Body Sway Recorded by Overhead Camera

Takuya Kamitani, Yuta Yamaguchi, Shintaro Nakatani, Masashi Nishiyama, Yoshio Iwai

Auto-TLDR; Spatio-Temporal Feature for Gender Classification of a Standing Person Using Body Stance Using Time-Series Signals

Abstract Slides Poster Similar

SL-DML: Signal Level Deep Metric Learning for Multimodal One-Shot Action Recognition

Raphael Memmesheimer, Nick Theisen, Dietrich Paulus

Auto-TLDR; One-Shot Action Recognition using Metric Learning

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

Learning Semantic Representations Via Joint 3D Face Reconstruction and Facial Attribute Estimation

Zichun Weng, Youjun Xiang, Xianfeng Li, Juntao Liang, Wanliang Huo, Yuli Fu

Auto-TLDR; Joint Framework for 3D Face Reconstruction with Facial Attribute Estimation

Abstract Slides Poster Similar

An Unsupervised Approach towards Varying Human Skin Tone Using Generative Adversarial Networks

Debapriya Roy, Diganta Mukherjee, Bhabatosh Chanda

Auto-TLDR; Unsupervised Skin Tone Change Using Augmented Reality Based Models

Abstract Slides Poster Similar

Siamese-Structure Deep Neural Network Recognizing Changes in Facial Expression According to the Degree of Smiling

Kazuaki Kondo, Taichi Nakamura, Yuichi Nakamura, Shin'Ichi Satoh

Auto-TLDR; A Siamese-Structure Deep Neural Network for Happiness Recognition

Abstract Slides Poster Similar

How Unique Is a Face: An Investigative Study

Michal Balazia, S L Happy, Francois Bremond, Antitza Dantcheva

Auto-TLDR; Uniqueness of Face Recognition: Exploring the Impact of Factors such as image resolution, feature representation, database size, age and gender

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

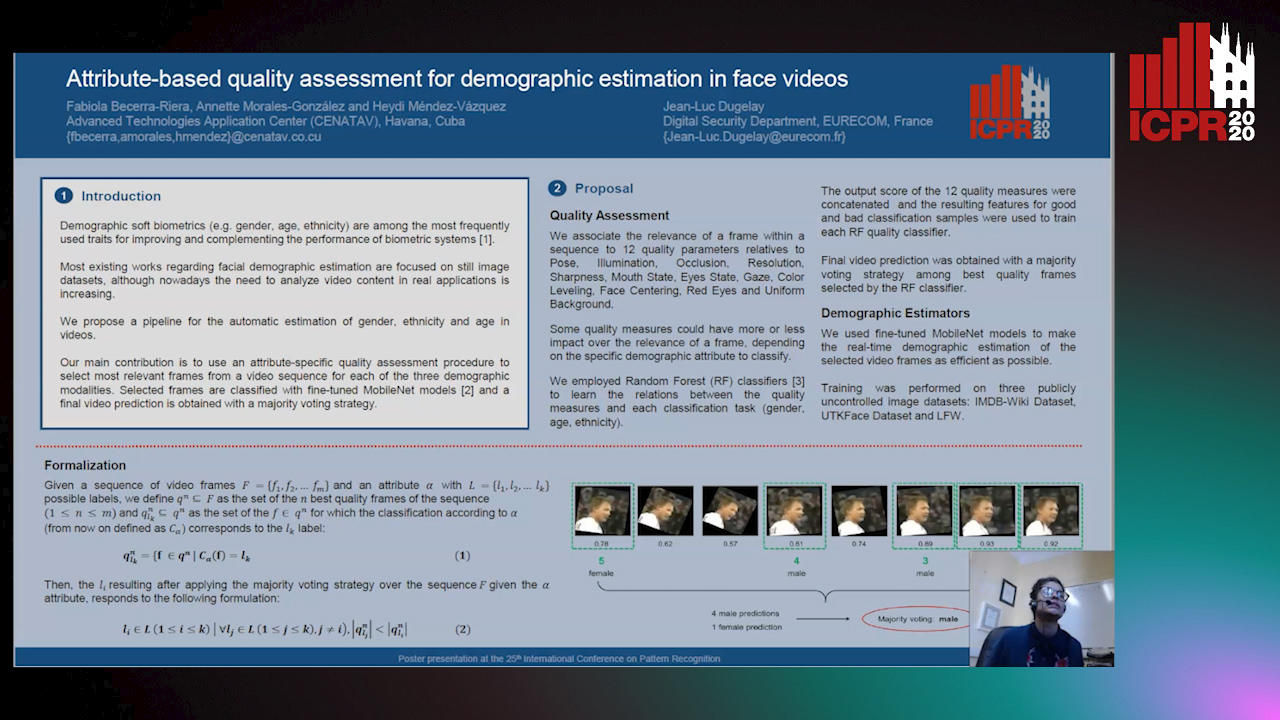

Attribute-Based Quality Assessment for Demographic Estimation in Face Videos

Fabiola Becerra-Riera, Annette Morales-González, Heydi Mendez-Vazquez, Jean-Luc Dugelay

Auto-TLDR; Facial Demographic Estimation in Video Scenarios Using Quality Assessment

Open-World Group Retrieval with Ambiguity Removal: A Benchmark

Ling Mei, Jian-Huang Lai, Zhanxiang Feng, Xiaohua Xie

Auto-TLDR; P2GSM-AR: Re-identifying changing groups of people under the open-world and group-ambiguity scenarios

Abstract Slides Poster Similar

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures

What and How? Jointly Forecasting Human Action and Pose

Yanjun Zhu, Yanxia Zhang, Qiong Liu, Andreas Girgensohn

Auto-TLDR; Forecasting Human Actions and Motion Trajectories with Joint Action Classification and Pose Regression

Abstract Slides Poster Similar

Rethinking ReID:Multi-Feature Fusion Person Re-Identification Based on Orientation Constraints

Mingjing Ai, Guozhi Shan, Bo Liu, Tianyang Liu

Auto-TLDR; Person Re-identification with Orientation Constrained Network

Abstract Slides Poster Similar

LFIR2Pose: Pose Estimation from an Extremely Low-Resolution FIR Image Sequence

Saki Iwata, Yasutomo Kawanishi, Daisuke Deguchi, Ichiro Ide, Hiroshi Murase, Tomoyoshi Aizawa

Auto-TLDR; LFIR2Pose: Human Pose Estimation from a Low-Resolution Far-InfraRed Image Sequence

Abstract Slides Poster Similar

Rank-Based Ordinal Classification

Auto-TLDR; Ordinal Classification with Order

Abstract Slides Poster Similar

Vision-Based Multi-Modal Framework for Action Recognition

Djamila Romaissa Beddiar, Mourad Oussalah, Brahim Nini

Auto-TLDR; Multi-modal Framework for Human Activity Recognition Using RGB, Depth and Skeleton Data

Abstract Slides Poster Similar

Attentive Visual Semantic Specialized Network for Video Captioning

Jesus Perez-Martin, Benjamin Bustos, Jorge Pérez

Auto-TLDR; Adaptive Visual Semantic Specialized Network for Video Captioning

Abstract Slides Poster Similar

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

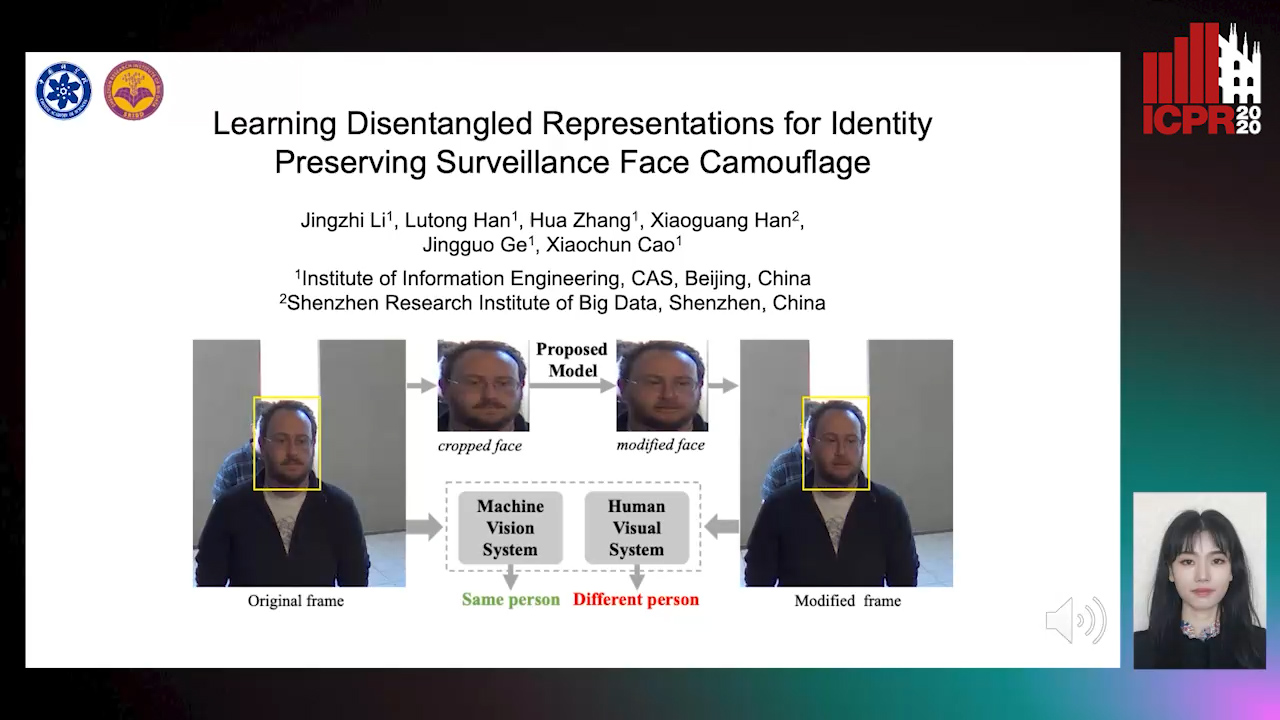

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

A Prototype-Based Generalized Zero-Shot Learning Framework for Hand Gesture Recognition

Jinting Wu, Yujia Zhang, Xiao-Guang Zhao

Auto-TLDR; Generalized Zero-Shot Learning for Hand Gesture Recognition

Abstract Slides Poster Similar

A Multi-Task Neural Network for Action Recognition with 3D Key-Points

Rongxiao Tang, Wang Luyang, Zhenhua Guo

Auto-TLDR; Multi-task Neural Network for Action Recognition and 3D Human Pose Estimation

Abstract Slides Poster Similar

One-Shot Representational Learning for Joint Biometric and Device Authentication

Auto-TLDR; Joint Biometric and Device Recognition from a Single Biometric Image

Abstract Slides Poster Similar

Self-Supervised Joint Encoding of Motion and Appearance for First Person Action Recognition

Mirco Planamente, Andrea Bottino, Barbara Caputo

Auto-TLDR; A Single Stream Architecture for Egocentric Action Recognition from the First-Person Point of View

Abstract Slides Poster Similar

Pose Variation Adaptation for Person Re-Identification

Lei Zhang, Na Jiang, Qishuai Diao, Yue Xu, Zhong Zhou, Wei Wu

Auto-TLDR; Pose Transfer Generative Adversarial Network for Person Re-identification

Abstract Slides Poster Similar

Not 3D Re-ID: Simple Single Stream 2D Convolution for Robust Video Re-Identification

Auto-TLDR; ResNet50-IBN for Video-based Person Re-Identification using Single Stream 2D Convolution Network

Abstract Slides Poster Similar

RGB-Infrared Person Re-Identification Via Image Modality Conversion

Huangpeng Dai, Qing Xie, Yanchun Ma, Yongjian Liu, Shengwu Xiong

Auto-TLDR; CE2L: A Novel Network for Cross-Modality Re-identification with Feature Alignment

Abstract Slides Poster Similar

Adaptive L2 Regularization in Person Re-Identification

Xingyang Ni, Liang Fang, Heikki Juhani Huttunen

Auto-TLDR; AdaptiveReID: Adaptive L2 Regularization for Person Re-identification

Abstract Slides Poster Similar

Classifying Eye-Tracking Data Using Saliency Maps

Shafin Rahman, Sejuti Rahman, Omar Shahid, Md. Tahmeed Abdullah, Jubair Ahmed Sourov

Auto-TLDR; Saliency-based Feature Extraction for Automatic Classification of Eye-tracking Data

Abstract Slides Poster Similar

Person Recognition with HGR Maximal Correlation on Multimodal Data

Yihua Liang, Fei Ma, Yang Li, Shao-Lun Huang

Auto-TLDR; A correlation-based multimodal person recognition framework that learns discriminative embeddings of persons by joint learning visual features and audio features

Abstract Slides Poster Similar

DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Gaoang Wang, Chen Lin, Tianqiang Liu, Mingwei He, Jiebo Luo

Auto-TLDR; DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Abstract Slides Poster Similar

Top-DB-Net: Top DropBlock for Activation Enhancement in Person Re-Identification

Auto-TLDR; Top-DB-Net for Person Re-Identification using Top DropBlock

Abstract Slides Poster Similar

Single View Learning in Action Recognition

Gaurvi Goyal, Nicoletta Noceti, Francesca Odone

Auto-TLDR; Cross-View Action Recognition Using Domain Adaptation for Knowledge Transfer

Abstract Slides Poster Similar

A Two-Stream Recurrent Network for Skeleton-Based Human Interaction Recognition

Qianhui Men, Edmond S. L. Ho, Shum Hubert P. H., Howard Leung

Auto-TLDR; Two-Stream Recurrent Neural Network for Human-Human Interaction Recognition

Abstract Slides Poster Similar

Age Gap Reducer-GAN for Recognizing Age-Separated Faces

Daksha Yadav, Naman Kohli, Mayank Vatsa, Richa Singh, Afzel Noore

Auto-TLDR; Generative Adversarial Network for Age-separated Face Recognition

Abstract Slides Poster Similar

VSB^2-Net: Visual-Semantic Bi-Branch Network for Zero-Shot Hashing

Xin Li, Xiangfeng Wang, Bo Jin, Wenjie Zhang, Jun Wang, Hongyuan Zha

Auto-TLDR; VSB^2-Net: inductive zero-shot hashing for image retrieval

Abstract Slides Poster Similar

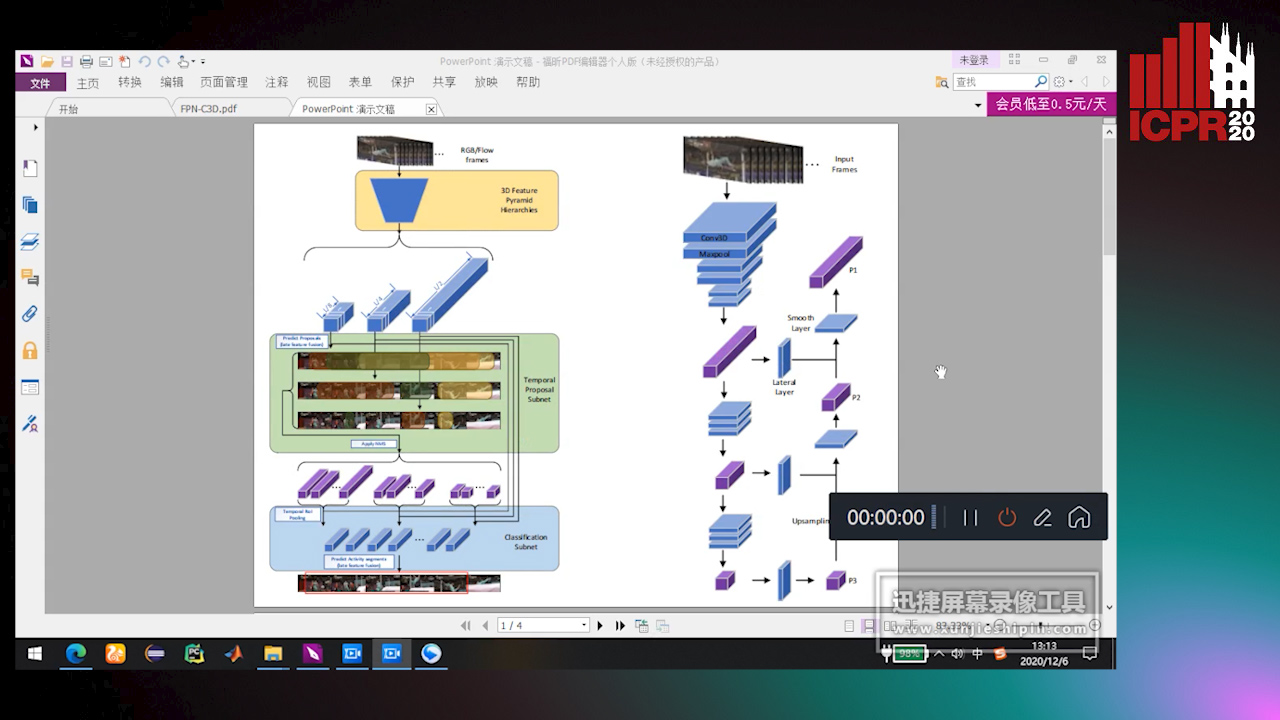

Feature Pyramid Hierarchies for Multi-Scale Temporal Action Detection

Auto-TLDR; Temporal Action Detection using Pyramid Hierarchies and Multi-scale Feature Maps

Abstract Slides Poster Similar

Confidence Calibration for Deep Renal Biopsy Immunofluorescence Image Classification

Federico Pollastri, Juan Maroñas, Federico Bolelli, Giulia Ligabue, Roberto Paredes, Riccardo Magistroni, Costantino Grana

Auto-TLDR; A Probabilistic Convolutional Neural Network for Immunofluorescence Classification in Renal Biopsy

Abstract Slides Poster Similar

Progressive Learning Algorithm for Efficient Person Re-Identification

Zhen Li, Hanyang Shao, Liang Niu, Nian Xue

Auto-TLDR; Progressive Learning Algorithm for Large-Scale Person Re-Identification

Abstract Slides Poster Similar

Using Scene Graphs for Detecting Visual Relationships

Anurag Tripathi, Siddharth Srivastava, Brejesh Lall, Santanu Chaudhury

Auto-TLDR; Relationship Detection using Context Aligned Scene Graph Embeddings

Abstract Slides Poster Similar