Classifying Eye-Tracking Data Using Saliency Maps

Shafin Rahman,

Sejuti Rahman,

Omar Shahid,

Md. Tahmeed Abdullah,

Jubair Ahmed Sourov

Auto-TLDR; Saliency-based Feature Extraction for Automatic Classification of Eye-tracking Data

Similar papers

A General End-To-End Method for Characterizing Neuropsychiatric Disorders Using Free-Viewing Visual Scanning Tasks

Hong Yue Sean Liu, Jonathan Chung, Moshe Eizenman

Auto-TLDR; A general, data-driven, end-to-end framework that extracts relevant features of attentional bias from visual scanning behaviour and uses these features

Abstract Slides Poster Similar

GazeMAE: General Representations of Eye Movements Using a Micro-Macro Autoencoder

Louise Gillian C. Bautista, Prospero Naval

Auto-TLDR; Fast and Slow Eye Movement Representations for Sentiment-agnostic Eye Tracking

Abstract Slides Poster Similar

Collaborative Human Machine Attention Module for Character Recognition

Chetan Ralekar, Tapan Gandhi, Santanu Chaudhury

Auto-TLDR; A Collaborative Human-Machine Attention Module for Deep Neural Networks

Abstract Slides Poster Similar

Fully Convolutional Neural Networks for Raw Eye Tracking Data Segmentation, Generation, and Reconstruction

Wolfgang Fuhl, Yao Rong, Enkelejda Kasneci

Auto-TLDR; Semantic Segmentation of Eye Tracking Data with Fully Convolutional Neural Networks

Abstract Slides Poster Similar

Saliency Prediction on Omnidirectional Images with Brain-Like Shallow Neural Network

Zhu Dandan, Chen Yongqing, Min Xiongkuo, Zhao Defang, Zhu Yucheng, Zhou Qiangqiang, Yang Xiaokang, Tian Han

Auto-TLDR; A Brain-like Neural Network for Saliency Prediction of Head Fixations on Omnidirectional Images

Abstract Slides Poster Similar

User-Independent Gaze Estimation by Extracting Pupil Parameter and Its Mapping to the Gaze Angle

Auto-TLDR; Gaze Point Estimation using Pupil Shape for Generalization

Abstract Slides Poster Similar

Translating Adult's Focus of Attention to Elderly's

Onkar Krishna, Go Irie, Takahito Kawanishi, Kunio Kashino, Kiyoharu Aizawa

Auto-TLDR; Elderly Focus of Attention Prediction Using Deep Image-to-Image Translation

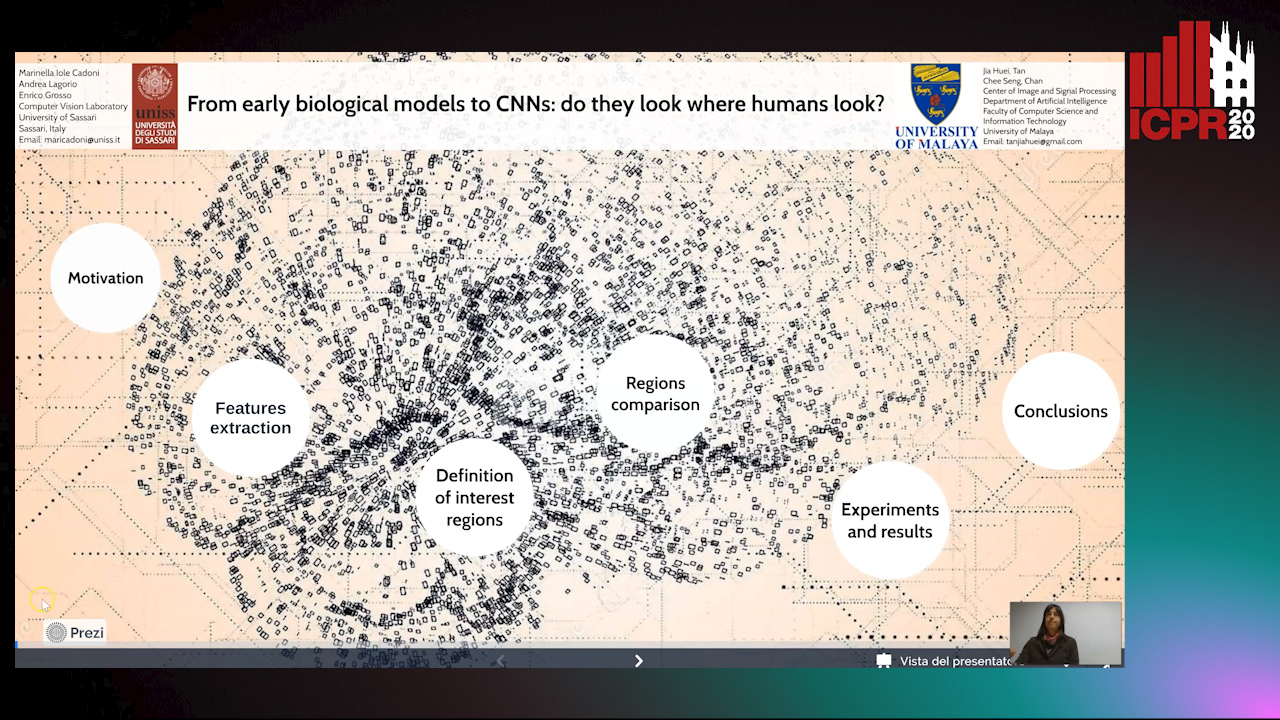

From Early Biological Models to CNNs: Do They Look Where Humans Look?

Marinella Iole Cadoni, Andrea Lagorio, Enrico Grosso, Jia Huei Tan, Chee Seng Chan

Auto-TLDR; Comparing Neural Networks to Human Fixations for Semantic Learning

Abstract Slides Poster Similar

FastSal: A Computationally Efficient Network for Visual Saliency Prediction

Auto-TLDR; MobileNetV2: A Convolutional Neural Network for Saliency Prediction

Abstract Slides Poster Similar

Responsive Social Smile: A Machine-Learning Based Multimodal Behavior Assessment Framework towards Early Stage Autism Screening

Yueran Pan, Kunjing Cai, Ming Cheng, Xiaobing Zou, Ming Li

Auto-TLDR; Responsive Social Smile: A Machine Learningbased Assessment Framework for Early ASD Screening

Adaptive Feature Fusion Network for Gaze Tracking in Mobile Tablets

Yiwei Bao, Yihua Cheng, Yunfei Liu, Feng Lu

Auto-TLDR; Adaptive Feature Fusion Network for Multi-stream Gaze Estimation in Mobile Tablets

Abstract Slides Poster Similar

TSMSAN: A Three-Stream Multi-Scale Attentive Network for Video Saliency Detection

Jingwen Yang, Guanwen Zhang, Wei Zhou

Auto-TLDR; Three-stream Multi-scale attentive network for video saliency detection in dynamic scenes

Abstract Slides Poster Similar

PrivAttNet: Predicting Privacy Risks in Images Using Visual Attention

Chen Zhang, Thivya Kandappu, Vigneshwaran Subbaraju

Auto-TLDR; PrivAttNet: A Visual Attention Based Approach for Privacy Sensitivity in Images

Abstract Slides Poster Similar

Explainable Online Validation of Machine Learning Models for Practical Applications

Wolfgang Fuhl, Yao Rong, Thomas Motz, Michael Scheidt, Andreas Markus Hartel, Andreas Koch, Enkelejda Kasneci

Auto-TLDR; A Reformulation of Regression and Classification for Machine Learning Algorithm Validation

Abstract Slides Poster Similar

Utilising Visual Attention Cues for Vehicle Detection and Tracking

Feiyan Hu, Venkatesh Gurram Munirathnam, Noel E O'Connor, Alan Smeaton, Suzanne Little

Auto-TLDR; Visual Attention for Object Detection and Tracking in Driver-Assistance Systems

Abstract Slides Poster Similar

Detection and Correspondence Matching of Corneal Reflections for Eye Tracking Using Deep Learning

Soumil Chugh, Braiden Brousseau, Jonathan Rose, Moshe Eizenman

Auto-TLDR; A Fully Convolutional Neural Network for Corneal Reflection Detection and Matching in Extended Reality Eye Tracking Systems

Abstract Slides Poster Similar

Audio-Video Detection of the Active Speaker in Meetings

Francisco Madrigal, Frederic Lerasle, Lionel Pibre, Isabelle Ferrané

Auto-TLDR; Active Speaker Detection with Visual and Contextual Information from Meeting Context

Abstract Slides Poster Similar

Electroencephalography Signal Processing Based on Textural Features for Monitoring the Driver’s State by a Brain-Computer Interface

Giulia Orrù, Marco Micheletto, Fabio Terranova, Gian Luca Marcialis

Auto-TLDR; One-dimensional Local Binary Pattern Algorithm for Estimating Driver Vigilance in a Brain-Computer Interface System

Abstract Slides Poster Similar

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

Attribute-Based Quality Assessment for Demographic Estimation in Face Videos

Fabiola Becerra-Riera, Annette Morales-González, Heydi Mendez-Vazquez, Jean-Luc Dugelay

Auto-TLDR; Facial Demographic Estimation in Video Scenarios Using Quality Assessment

Understanding Integrated Gradients with SmoothTaylor for Deep Neural Network Attribution

Gary Shing Wee Goh, Sebastian Lapuschkin, Leander Weber, Wojciech Samek, Alexander Binder

Auto-TLDR; SmoothGrad: bridging Integrated Gradients and SmoothGrad from the Taylor's theorem perspective

Deep Gait Relative Attribute Using a Signed Quadratic Contrastive Loss

Yuta Hayashi, Shehata Allam, Yasushi Makihara, Daigo Muramatsu, Yasushi Yagi

Auto-TLDR; Signal-Contrastive Loss for Gait Attributes Estimation

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

Real-Time Driver Drowsiness Detection Using Facial Action Units

Malaika Vijay, Nandagopal Netrakanti Vinayak, Maanvi Nunna, Subramanyam Natarajan

Auto-TLDR; Real-Time Detection of Driver Drowsiness using Facial Action Units using Extreme Gradient Boosting

Abstract Slides Poster Similar

Quantified Facial Temporal-Expressiveness Dynamics for Affect Analysis

Md Taufeeq Uddin, Shaun Canavan

Auto-TLDR; quantified facial Temporal-expressiveness Dynamics for quantified affect analysis

Assessing the Severity of Health States Based on Social Media Posts

Shweta Yadav, Joy Prakash Sain, Amit Sheth, Asif Ekbal, Sriparna Saha, Pushpak Bhattacharyya

Auto-TLDR; A Multiview Learning Framework for Assessment of Health State in Online Health Communities

Abstract Slides Poster Similar

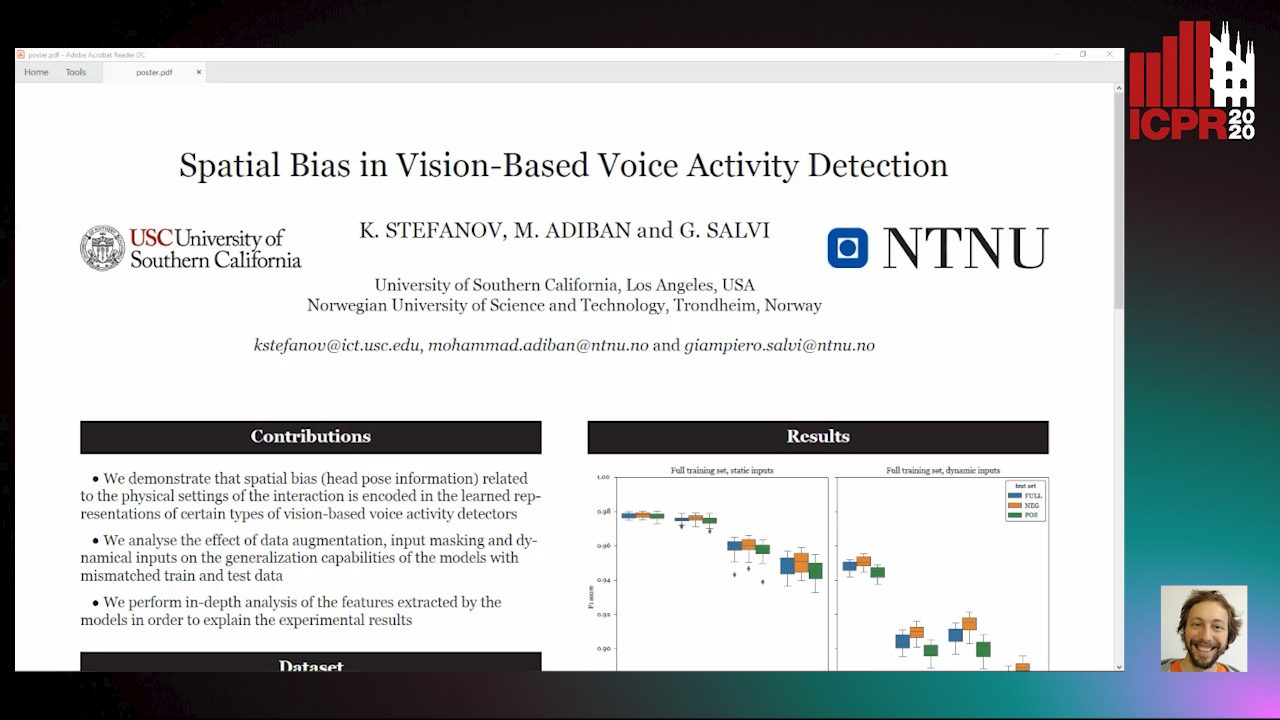

Spatial Bias in Vision-Based Voice Activity Detection

Kalin Stefanov, Mohammad Adiban, Giampiero Salvi

Auto-TLDR; Spatial Bias in Vision-based Voice Activity Detection in Multiparty Human-Human Interactions

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

MRP-Net: A Light Multiple Region Perception Neural Network for Multi-Label AU Detection

Yang Tang, Shuang Chen, Honggang Zhang, Gang Wang, Rui Yang

Auto-TLDR; MRP-Net: A Fast and Light Neural Network for Facial Action Unit Detection

Abstract Slides Poster Similar

Video Face Manipulation Detection through Ensemble of CNNs

Nicolo Bonettini, Edoardo Daniele Cannas, Sara Mandelli, Luca Bondi, Paolo Bestagini, Stefano Tubaro

Auto-TLDR; Face Manipulation Detection in Video Sequences Using Convolutional Neural Networks

Pose-Aware Multi-Feature Fusion Network for Driver Distraction Recognition

Mingyan Wu, Xi Zhang, Linlin Shen, Hang Yu

Auto-TLDR; Multi-Feature Fusion Network for Distracted Driving Detection using Pose Estimation

Abstract Slides Poster Similar

Depth Videos for the Classification of Micro-Expressions

Ankith Jain Rakesh Kumar, Bir Bhanu, Christopher Casey, Sierra Cheung, Aaron Seitz

Auto-TLDR; RGB-D Dataset for the Classification of Facial Micro-expressions

Abstract Slides Poster Similar

Exposing Deepfake Videos by Tracking Eye Movements

Meng Li, Beibei Liu, Yujiang Hu, Yufei Wang

Auto-TLDR; A Novel Approach to Detecting Deepfake Videos

Abstract Slides Poster Similar

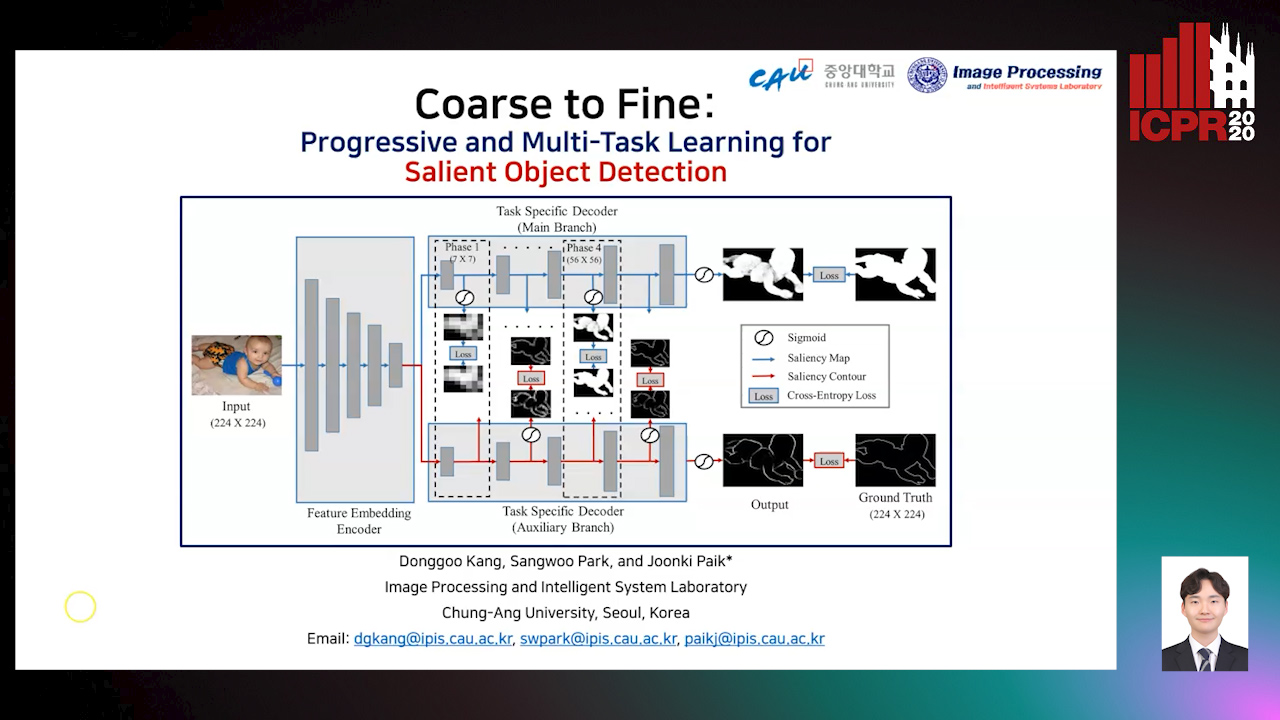

Coarse to Fine: Progressive and Multi-Task Learning for Salient Object Detection

Dong-Goo Kang, Sangwoo Park, Joonki Paik

Auto-TLDR; Progressive and mutl-task learning scheme for salient object detection

Abstract Slides Poster Similar

An Experimental Evaluation of Recent Face Recognition Losses for Deepfake Detection

Yu-Cheng Liu, Chia-Ming Chang, I-Hsuan Chen, Yu Ju Ku, Jun-Cheng Chen

Auto-TLDR; Deepfake Classification and Detection using Loss Functions for Face Recognition

Abstract Slides Poster Similar

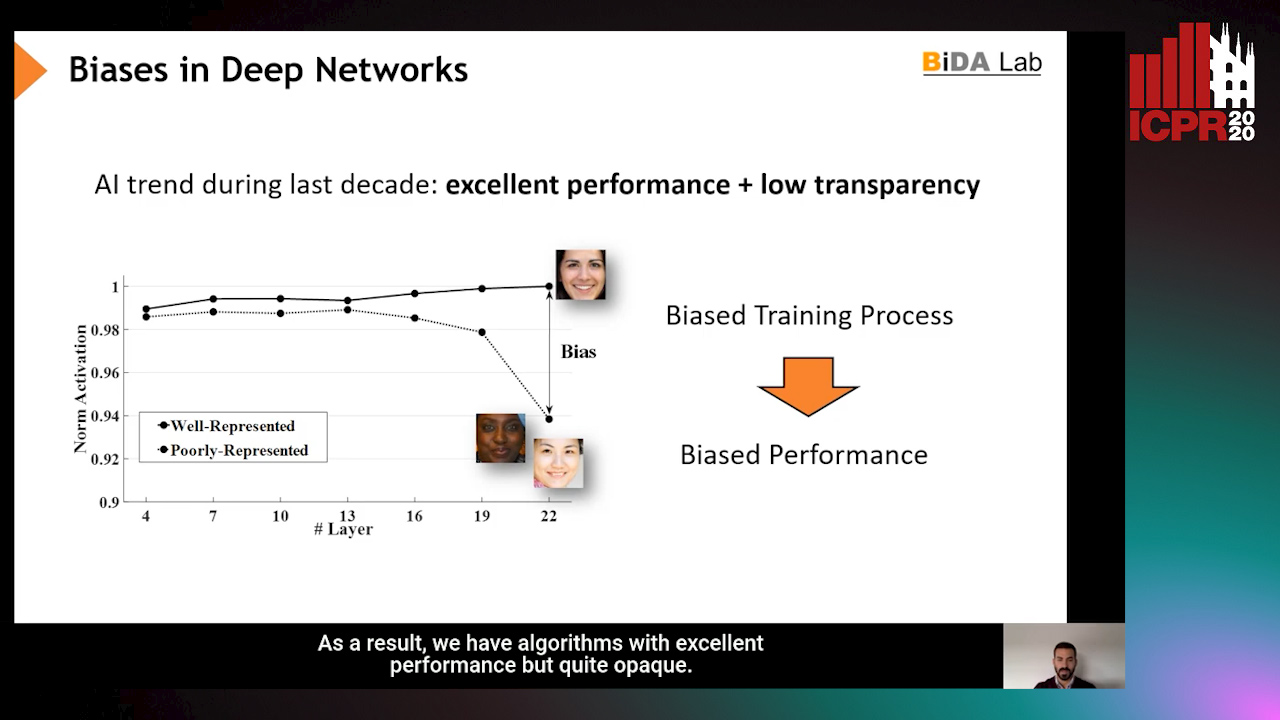

InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics

Ignacio Serna, Alejandro Peña Almansa, Aythami Morales, Julian Fierrez

Auto-TLDR; InsideBias: Detecting Bias in Deep Neural Networks from Face Images

Abstract Slides Poster Similar

EEG-Based Cognitive State Assessment Using Deep Ensemble Model and Filter Bank Common Spatial Pattern

Debashis Das Chakladar, Shubhashis Dey, Partha Pratim Roy, Masakazu Iwamura

Auto-TLDR; A Deep Ensemble Model for Cognitive State Assessment using EEG-based Cognitive State Analysis

Abstract Slides Poster Similar

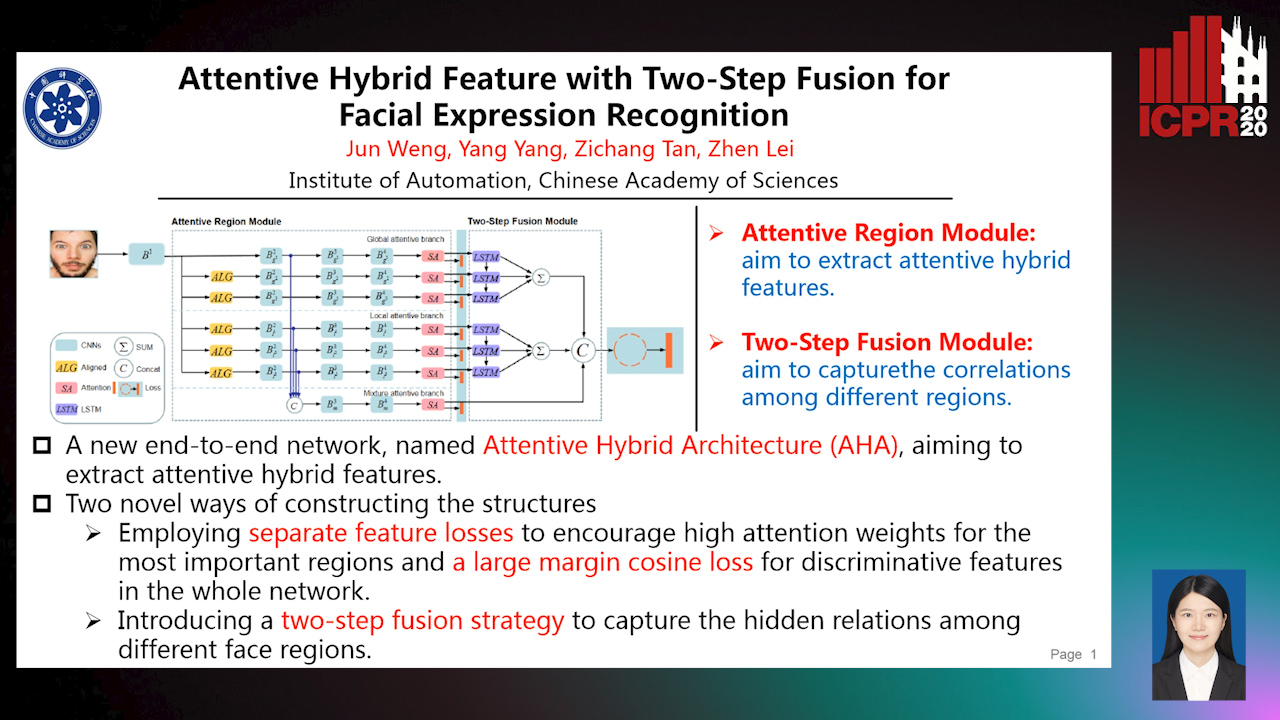

Attentive Hybrid Feature Based a Two-Step Fusion for Facial Expression Recognition

Jun Weng, Yang Yang, Zichang Tan, Zhen Lei

Auto-TLDR; Attentive Hybrid Architecture for Facial Expression Recognition

Abstract Slides Poster Similar

A Systematic Investigation on Deep Architectures for Automatic Skin Lesions Classification

Pierluigi Carcagni, Marco Leo, Andrea Cuna, Giuseppe Celeste, Cosimo Distante

Auto-TLDR; RegNet: Deep Investigation of Convolutional Neural Networks for Automatic Classification of Skin Lesions

Abstract Slides Poster Similar

Estimating Gaze Points from Facial Landmarks by a Remote Spherical Camera

Auto-TLDR; Gaze Point Estimation from a Spherical Image from Facial Landmarks

Abstract Slides Poster Similar

Facial Expression Recognition Using Residual Masking Network

Luan Pham, Vu Huynh, Tuan Anh Tran

Auto-TLDR; Deep Residual Masking for Automatic Facial Expression Recognition

Abstract Slides Poster Similar

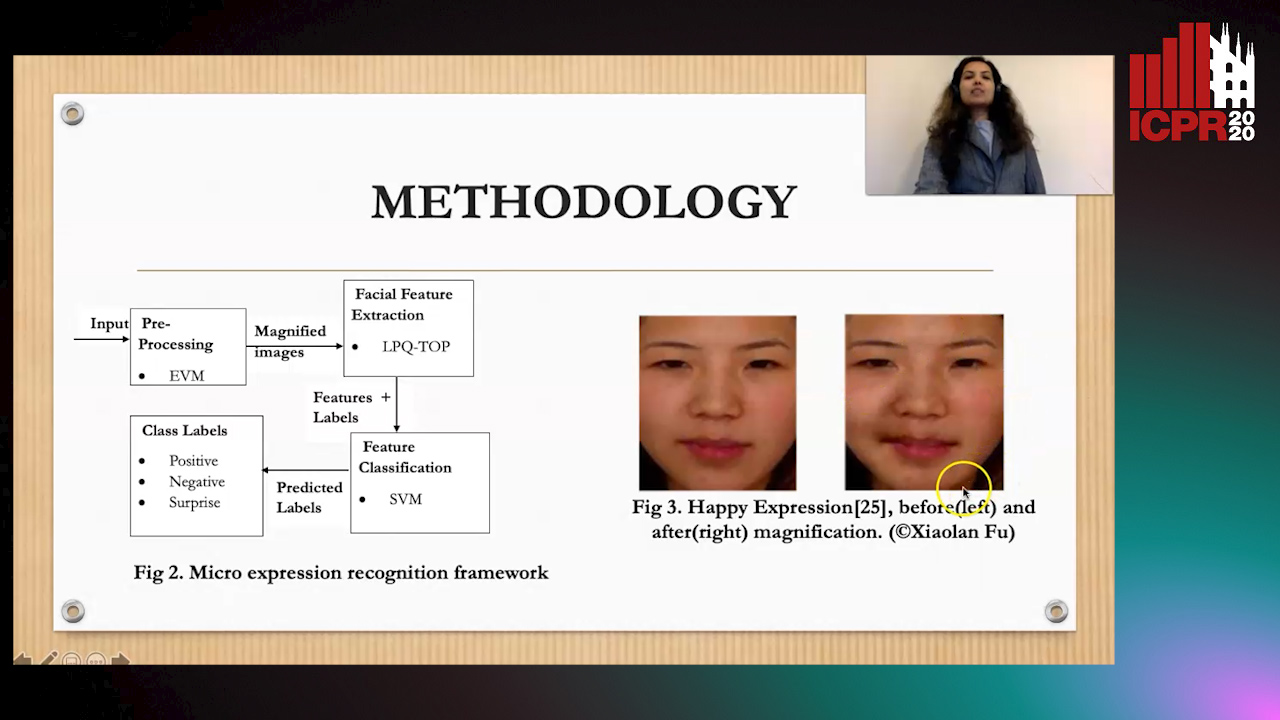

Magnifying Spontaneous Facial Micro Expressions for Improved Recognition

Pratikshya Sharma, Sonya Coleman, Pratheepan Yogarajah, Laurence Taggart, Pradeepa Samarasinghe

Auto-TLDR; Eulerian Video Magnification for Micro Expression Recognition

Abstract Slides Poster Similar

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures

Two-Level Attention-Based Fusion Learning for RGB-D Face Recognition

Hardik Uppal, Alireza Sepas-Moghaddam, Michael Greenspan, Ali Etemad

Auto-TLDR; Fused RGB-D Facial Recognition using Attention-Aware Feature Fusion

Abstract Slides Poster Similar

Question-Agnostic Attention for Visual Question Answering

Moshiur R Farazi, Salman Hameed Khan, Nick Barnes

Auto-TLDR; Question-Agnostic Attention for Visual Question Answering

Abstract Slides Poster Similar

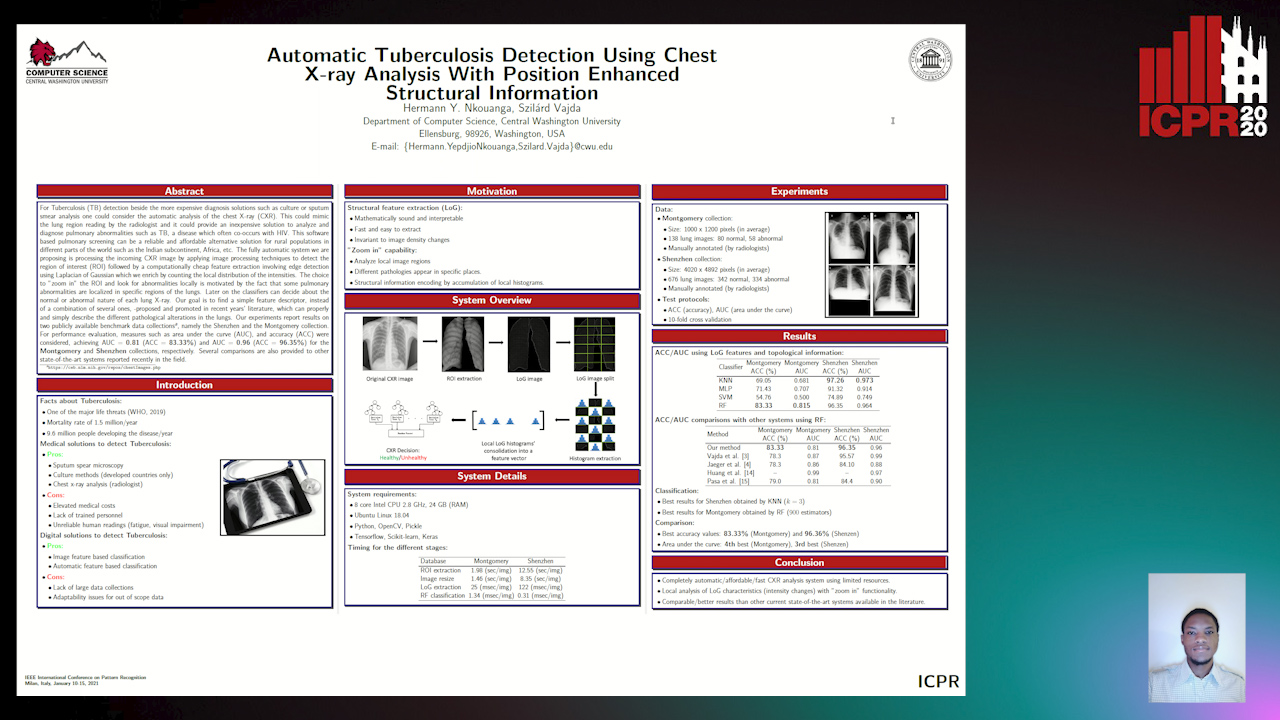

Automatic Tuberculosis Detection Using Chest X-Ray Analysis with Position Enhanced Structural Information

Hermann Jepdjio Nkouanga, Szilard Vajda

Auto-TLDR; Automatic Chest X-ray Screening for Tuberculosis in Rural Population using Localized Region on Interest

Abstract Slides Poster Similar

A Flatter Loss for Bias Mitigation in Cross-Dataset Facial Age Estimation

Ali Akbari, Muhammad Awais, Zhenhua Feng, Ammarah Farooq, Josef Kittler

Auto-TLDR; Cross-dataset Age Estimation for Neural Network Training

Abstract Slides Poster Similar

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar