Fingerprints, Forever Young?

Roman Kessler,

Olaf Henniger,

Christoph Busch

Auto-TLDR; Mated Similarity Scores for Fingerprint Recognition: A Hierarchical Linear Model

Similar papers

How Unique Is a Face: An Investigative Study

Michal Balazia, S L Happy, Francois Bremond, Antitza Dantcheva

Auto-TLDR; Uniqueness of Face Recognition: Exploring the Impact of Factors such as image resolution, feature representation, database size, age and gender

Abstract Slides Poster Similar

Level Three Synthetic Fingerprint Generation

Andre Wyzykowski, Mauricio Pamplona Segundo, Rubisley Lemes

Auto-TLDR; Synthesis of High-Resolution Fingerprints with Pore Detection Using CycleGAN

Abstract Slides Poster Similar

Rotation Detection in Finger Vein Biometrics Using CNNs

Bernhard Prommegger, Georg Wimmer, Andreas Uhl

Auto-TLDR; A CNN based rotation detector for finger vein recognition

Abstract Slides Poster Similar

One-Shot Representational Learning for Joint Biometric and Device Authentication

Auto-TLDR; Joint Biometric and Device Recognition from a Single Biometric Image

Abstract Slides Poster Similar

Can You Really Trust the Sensor's PRNU? How Image Content Might Impact the Finger Vein Sensor Identification Performance

Dominik Söllinger, Luca Debiasi, Andreas Uhl

Auto-TLDR; Finger vein imagery can cause the PRNU estimate to be biased by image content

Abstract Slides Poster Similar

Are Spoofs from Latent Fingerprints a Real Threat for the Best State-Of-Art Liveness Detectors?

Roberto Casula, Giulia Orrù, Daniele Angioni, Xiaoyi Feng, Gian Luca Marcialis, Fabio Roli

Auto-TLDR; ScreenSpoof: Attacks using latent fingerprints against state-of-art fingerprint liveness detectors and verification systems

Finger Vein Recognition and Intra-Subject Similarity Evaluation of Finger Veins Using the CNN Triplet Loss

Georg Wimmer, Bernhard Prommegger, Andreas Uhl

Auto-TLDR; Finger vein recognition using CNNs and hard triplet online selection

Abstract Slides Poster Similar

Super-Resolution Guided Pore Detection for Fingerprint Recognition

Syeda Nyma Ferdous, Ali Dabouei, Jeremy Dawson, Nasser M. Nasarabadi

Auto-TLDR; Super-Resolution Generative Adversarial Network for Fingerprint Recognition Using Pore Features

Abstract Slides Poster Similar

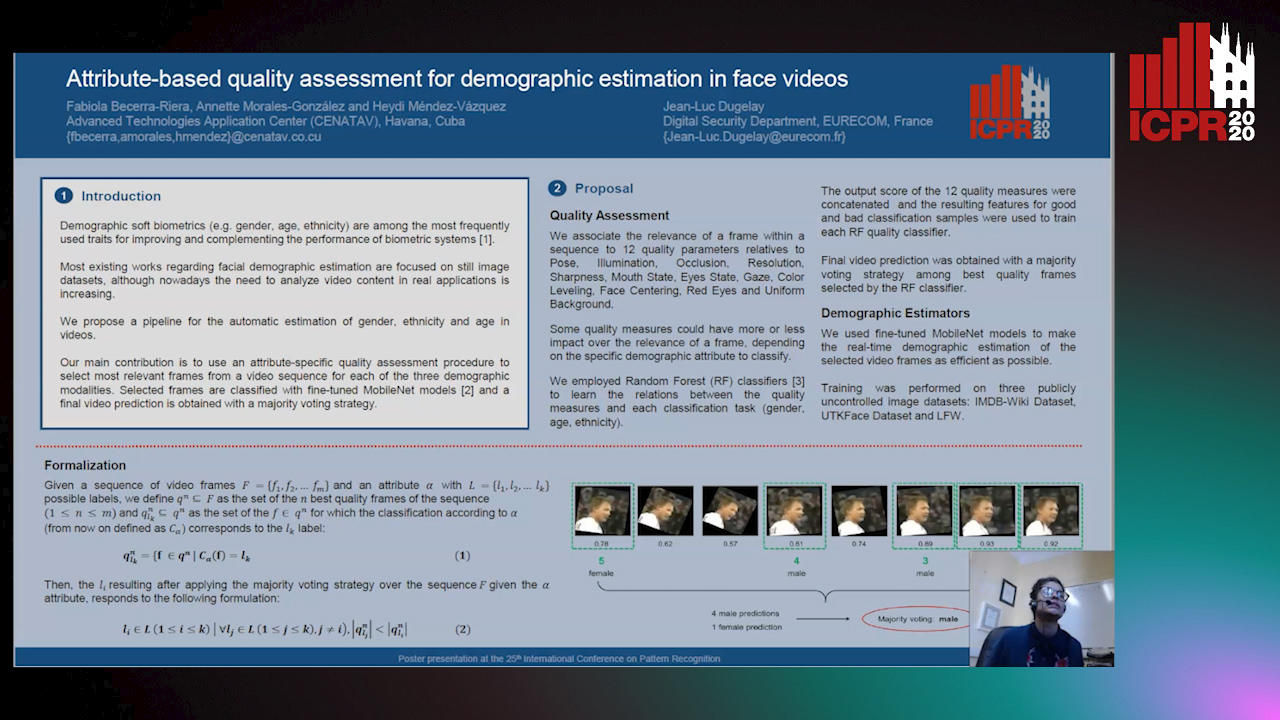

Attribute-Based Quality Assessment for Demographic Estimation in Face Videos

Fabiola Becerra-Riera, Annette Morales-González, Heydi Mendez-Vazquez, Jean-Luc Dugelay

Auto-TLDR; Facial Demographic Estimation in Video Scenarios Using Quality Assessment

Face Image Quality Assessment for Model and Human Perception

Ken Chen, Yichao Wu, Zhenmao Li, Yudong Wu, Ding Liang

Auto-TLDR; A labour-saving method for FIQA training with contradictory data from multiple sources

Abstract Slides Poster Similar

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures

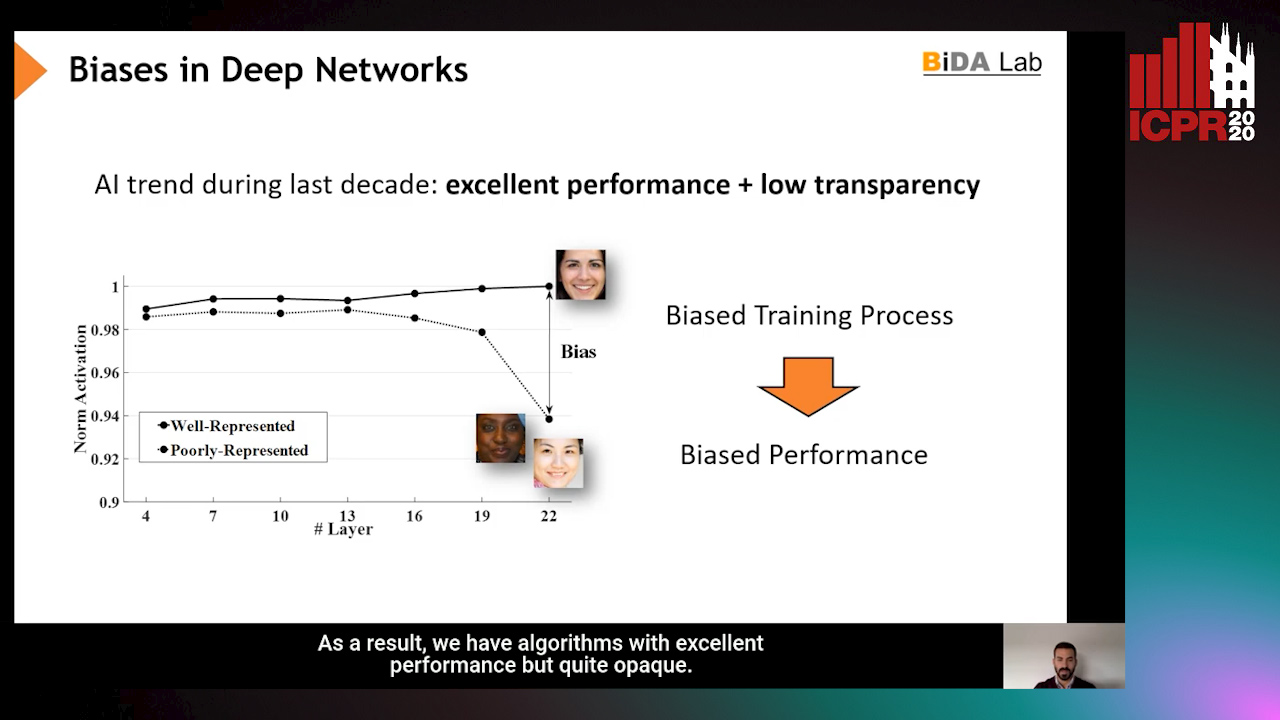

InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics

Ignacio Serna, Alejandro Peña Almansa, Aythami Morales, Julian Fierrez

Auto-TLDR; InsideBias: Detecting Bias in Deep Neural Networks from Face Images

Abstract Slides Poster Similar

Seasonal Inhomogeneous Nonconsecutive Arrival Process Search and Evaluation

Kimberly Holmgren, Paul Gibby, Joseph Zipkin

Auto-TLDR; SINAPSE: Fitting a Sparse Time Series Model to Seasonal Data

Abstract Slides Poster Similar

Video Analytics Gait Trend Measurement for Fall Prevention and Health Monitoring

Lawrence O'Gorman, Xinyi Liu, Md Imran Sarker, Mariofanna Milanova

Auto-TLDR; Towards Health Monitoring of Gait with Deep Learning

Abstract Slides Poster Similar

Exploring Seismocardiogram Biometrics with Wavelet Transform

Po-Ya Hsu, Po-Han Hsu, Hsin-Li Liu

Auto-TLDR; Seismocardiogram Biometric Matching Using Wavelet Transform and Deep Learning Models

Abstract Slides Poster Similar

Detection of Makeup Presentation Attacks Based on Deep Face Representations

Christian Rathgeb, Pawel Drozdowski, Christoph Busch

Auto-TLDR; An Attack Detection Scheme for Face Recognition Using Makeup Presentation Attacks

Abstract Slides Poster Similar

SoftmaxOut Transformation-Permutation Network for Facial Template Protection

Hakyoung Lee, Cheng Yaw Low, Andrew Teoh

Auto-TLDR; SoftmaxOut Transformation-Permutation Network for C cancellable Biometrics

Abstract Slides Poster Similar

Relative Feature Importance

Gunnar König, Christoph Molnar, Bernd Bischl, Moritz Grosse-Wentrup

Auto-TLDR; Relative Feature Importance for Interpretable Machine Learning

Lookalike Disambiguation: Improving Face Identification Performance at Top Ranks

Auto-TLDR; Lookalike Face Identification Using a Disambiguator for Lookalike Images

Age Gap Reducer-GAN for Recognizing Age-Separated Faces

Daksha Yadav, Naman Kohli, Mayank Vatsa, Richa Singh, Afzel Noore

Auto-TLDR; Generative Adversarial Network for Age-separated Face Recognition

Abstract Slides Poster Similar

One Step Clustering Based on A-Contrario Framework for Detection of Alterations in Historical Violins

Alireza Rezaei, Sylvie Le Hégarat-Mascle, Emanuel Aldea, Piercarlo Dondi, Marco Malagodi

Auto-TLDR; A-Contrario Clustering for the Detection of Altered Violins using UVIFL Images

Abstract Slides Poster Similar

A Local Descriptor with Physiological Characteristic for Finger Vein Recognition

Liping Zhang, Weijun Li, Ning Xin

Auto-TLDR; Finger vein-specific local feature descriptors based physiological characteristic of finger vein patterns

Abstract Slides Poster Similar

Identifying Missing Children: Face Age-Progression Via Deep Feature Aging

Debayan Deb, Divyansh Aggarwal, Anil Jain

Auto-TLDR; Aging Face Features for Missing Children Identification

Cancelable Biometrics Vault: A Secure Key-Binding Biometric Cryptosystem Based on Chaffing and Winnowing

Osama Ouda, Karthik Nandakumar, Arun Ross

Auto-TLDR; Cancelable Biometrics Vault for Key-binding Biometric Cryptosystem Framework

Abstract Slides Poster Similar

Feasibility Study of Using MyoBand for Learning Electronic Keyboard

Auto-TLDR; Autonomous Finger-Based Music Instrument Learning using Electromyography Using MyoBand and Machine Learning

Abstract Slides Poster Similar

On the Minimal Recognizable Image Patch

Mark Fonaryov, Michael Lindenbaum

Auto-TLDR; MIRC: A Deep Neural Network for Minimal Recognition on Partially Occluded Images

Abstract Slides Poster Similar

Using Machine Learning to Refer Patients with Chronic Kidney Disease to Secondary Care

Lee Au-Yeung, Xianghua Xie, Timothy Marcus Scale, James Anthony Chess

Auto-TLDR; A Machine Learning Approach for Chronic Kidney Disease Prediction using Blood Test Data

Abstract Slides Poster Similar

3D Dental Biometrics: Automatic Pose-Invariant Dental Arch Extraction and Matching

Auto-TLDR; Automatic Dental Arch Extraction and Matching for 3D Dental Identification using Laser-Scanned Plasters

Abstract Slides Poster Similar

3CS Algorithm for Efficient Gaussian Process Model Retrieval

Fabian Berns, Kjeld Schmidt, Ingolf Bracht, Christian Beecks

Auto-TLDR; Efficient retrieval of Gaussian Process Models for large-scale data using divide-&-conquer-based approach

Abstract Slides Poster Similar

Computational Data Analysis for First Quantization Estimation on JPEG Double Compressed Images

Sebastiano Battiato, Oliver Giudice, Francesco Guarnera, Giovanni Puglisi

Auto-TLDR; Exploiting Discrete Cosine Transform Coefficients for Multimedia Forensics

Abstract Slides Poster Similar

DR2S: Deep Regression with Region Selection for Camera Quality Evaluation

Marcelin Tworski, Stéphane Lathuiliere, Salim Belkarfa, Attilio Fiandrotti, Marco Cagnazzo

Auto-TLDR; Texture Quality Estimation Using Deep Learning

Abstract Slides Poster Similar

A Low-Complexity R-Peak Detection Algorithm with Adaptive Thresholding for Wearable Devices

Tiago Rodrigues, Hugo Plácido Da Silva, Ana Luisa Nobre Fred, Sirisack Samoutphonh

Auto-TLDR; Real-Time and Low-Complexity R-peak Detection for Single Lead ECG Signals

Abstract Slides Poster Similar

A Flatter Loss for Bias Mitigation in Cross-Dataset Facial Age Estimation

Ali Akbari, Muhammad Awais, Zhenhua Feng, Ammarah Farooq, Josef Kittler

Auto-TLDR; Cross-dataset Age Estimation for Neural Network Training

Abstract Slides Poster Similar

Predicting Chemical Properties Using Self-Attention Multi-Task Learning Based on SMILES Representation

Auto-TLDR; Self-attention based Transformer-Variant Model for Chemical Compound Properties Prediction

Abstract Slides Poster Similar

Wireless Localisation in WiFi Using Novel Deep Architectures

Peizheng Li, Han Cui, Aftab Khan, Usman Raza, Robert Piechocki, Angela Doufexi, Tim Farnham

Auto-TLDR; Deep Neural Network for Indoor Localisation of WiFi Devices in Indoor Environments

Abstract Slides Poster Similar

XGBoost to Interpret the Opioid Patients’ StateBased on Cognitive and Physiological Measures

Arash Shokouhmand, Omid Dehzangi, Jad Ramadan, Victor Finomore, Nasser M. Nasarabadi, Ali Rezai

Auto-TLDR; Predicting the Wellness of Opioid Addictions Using Multi-modal Sensor Data

Joint Learning Multiple Curvature Descriptor for 3D Palmprint Recognition

Lunke Fei, Bob Zhang, Jie Wen, Chunwei Tian, Peng Liu, Shuping Zhao

Auto-TLDR; Joint Feature Learning for 3D palmprint recognition using curvature data vectors

Abstract Slides Poster Similar

GazeMAE: General Representations of Eye Movements Using a Micro-Macro Autoencoder

Louise Gillian C. Bautista, Prospero Naval

Auto-TLDR; Fast and Slow Eye Movement Representations for Sentiment-agnostic Eye Tracking

Abstract Slides Poster Similar

Total Whitening for Online Signature Verification Based on Deep Representation

Xiaomeng Wu, Akisato Kimura, Kunio Kashino, Seiichi Uchida

Auto-TLDR; Total Whitening for Online Signature Verification

Abstract Slides Poster Similar

Uncertainty Guided Recognition of Tiny Craters on the Moon

Thorsten Wilhelm, Christian Wöhler

Auto-TLDR; Accurately Detecting Tiny Craters in Remote Sensed Images Using Deep Neural Networks

Abstract Slides Poster Similar

Recovery of 2D and 3D Layout Information through an Advanced Image Stitching Algorithm Using Scanning Electron Microscope Images

Aayush Singla, Bernhard Lippmann, Helmut Graeb

Auto-TLDR; Image Stitching for True Geometrical Layout Recovery in Nanoscale Dimension

Abstract Slides Poster Similar

Location Prediction in Real Homes of Older Adults based on K-Means in Low-Resolution Depth Videos

Simon Simonsson, Flávia Dias Casagrande, Evi Zouganeli

Auto-TLDR; Semi-supervised Learning for Location Recognition and Prediction in Smart Homes using Depth Video Cameras

Abstract Slides Poster Similar

Electroencephalography Signal Processing Based on Textural Features for Monitoring the Driver’s State by a Brain-Computer Interface

Giulia Orrù, Marco Micheletto, Fabio Terranova, Gian Luca Marcialis

Auto-TLDR; One-dimensional Local Binary Pattern Algorithm for Estimating Driver Vigilance in a Brain-Computer Interface System

Abstract Slides Poster Similar

Quantified Facial Temporal-Expressiveness Dynamics for Affect Analysis

Md Taufeeq Uddin, Shaun Canavan

Auto-TLDR; quantified facial Temporal-expressiveness Dynamics for quantified affect analysis

Deep Transformation Models: Tackling Complex Regression Problems with Neural Network Based Transformation Models

Beate Sick, Torsten Hothorn, Oliver Dürr

Auto-TLDR; A Deep Transformation Model for Probabilistic Regression

Abstract Slides Poster Similar

A Cross Domain Multi-Modal Dataset for Robust Face Anti-Spoofing

Qiaobin Ji, Shugong Xu, Xudong Chen, Shan Cao, Shunqing Zhang

Auto-TLDR; Cross domain multi-modal FAS dataset GREAT-FASD and several evaluation protocols for academic community

Abstract Slides Poster Similar

Approach for Document Detection by Contours and Contrasts

Daniil Tropin, Sergey Ilyuhin, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; A countor-based method for arbitrary document detection on a mobile device

Abstract Slides Poster Similar

Quality-Based Representation for Unconstrained Face Recognition

Nelson Méndez-Llanes, Katy Castillo-Rosado, Heydi Mendez-Vazquez, Massimo Tistarelli

Auto-TLDR; activation map for face recognition in unconstrained environments