On the Minimal Recognizable Image Patch

Mark Fonaryov,

Michael Lindenbaum

Auto-TLDR; MIRC: A Deep Neural Network for Minimal Recognition on Partially Occluded Images

Similar papers

Verifying the Causes of Adversarial Examples

Honglin Li, Yifei Fan, Frieder Ganz, Tony Yezzi, Payam Barnaghi

Auto-TLDR; Exploring the Causes of Adversarial Examples in Neural Networks

Abstract Slides Poster Similar

Probability Guided Maxout

Claudio Ferrari, Stefano Berretti, Alberto Del Bimbo

Auto-TLDR; Probability Guided Maxout for CNN Training

Abstract Slides Poster Similar

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

Uncertainty-Sensitive Activity Recognition: A Reliability Benchmark and the CARING Models

Alina Roitberg, Monica Haurilet, Manuel Martinez, Rainer Stiefelhagen

Auto-TLDR; CARING: Calibrated Action Recognition with Input Guidance

How Does DCNN Make Decisions?

Yi Lin, Namin Wang, Xiaoqing Ma, Ziwei Li, Gang Bai

Auto-TLDR; Exploring Deep Convolutional Neural Network's Decision-Making Interpretability

Abstract Slides Poster Similar

A Close Look at Deep Learning with Small Data

Auto-TLDR; Low-Complex Neural Networks for Small Data Conditions

Abstract Slides Poster Similar

Contextual Classification Using Self-Supervised Auxiliary Models for Deep Neural Networks

Sebastian Palacio, Philipp Engler, Jörn Hees, Andreas Dengel

Auto-TLDR; Self-Supervised Autogenous Learning for Deep Neural Networks

Abstract Slides Poster Similar

Estimation of Abundance and Distribution of SaltMarsh Plants from Images Using Deep Learning

Jayant Parashar, Suchendra Bhandarkar, Jacob Simon, Brian Hopkinson, Steven Pennings

Auto-TLDR; CNN-based approaches to automated plant identification and localization in salt marsh images

Feature-Dependent Cross-Connections in Multi-Path Neural Networks

Dumindu Tissera, Kasun Vithanage, Rukshan Wijesinghe, Kumara Kahatapitiya, Subha Fernando, Ranga Rodrigo

Auto-TLDR; Multi-path Networks for Adaptive Feature Extraction

Abstract Slides Poster Similar

Hierarchical Classification with Confidence Using Generalized Logits

James W. Davis, Tong Liang, James Enouen, Roman Ilin

Auto-TLDR; Generalized Logits for Hierarchical Classification

Abstract Slides Poster Similar

Towards Robust Learning with Different Label Noise Distributions

Diego Ortego, Eric Arazo, Paul Albert, Noel E O'Connor, Kevin Mcguinness

Auto-TLDR; Distribution Robust Pseudo-Labeling with Semi-supervised Learning

Understanding When Spatial Transformer Networks Do Not Support Invariance, and What to Do about It

Lukas Finnveden, Ylva Jansson, Tony Lindeberg

Auto-TLDR; Spatial Transformer Networks are unable to support invariance when transforming CNN feature maps

Abstract Slides Poster Similar

A Versatile Crack Inspection Portable System Based on Classifier Ensemble and Controlled Illumination

Milind Gajanan Padalkar, Carlos Beltran-Gonzalez, Matteo Bustreo, Alessio Del Bue, Vittorio Murino

Auto-TLDR; Lighting Conditions for Crack Detection in Ceramic Tile

Abstract Slides Poster Similar

Generalization Comparison of Deep Neural Networks Via Output Sensitivity

Mahsa Forouzesh, Farnood Salehi, Patrick Thiran

Auto-TLDR; Generalization of Deep Neural Networks using Sensitivity

Confidence Calibration for Deep Renal Biopsy Immunofluorescence Image Classification

Federico Pollastri, Juan Maroñas, Federico Bolelli, Giulia Ligabue, Roberto Paredes, Riccardo Magistroni, Costantino Grana

Auto-TLDR; A Probabilistic Convolutional Neural Network for Immunofluorescence Classification in Renal Biopsy

Abstract Slides Poster Similar

A GAN-Based Blind Inpainting Method for Masonry Wall Images

Yahya Ibrahim, Balázs Nagy, Csaba Benedek

Auto-TLDR; An End-to-End Blind Inpainting Algorithm for Masonry Wall Images

Abstract Slides Poster Similar

Improving Model Accuracy for Imbalanced Image Classification Tasks by Adding a Final Batch Normalization Layer: An Empirical Study

Veysel Kocaman, Ofer M. Shir, Thomas Baeck

Auto-TLDR; Exploiting Batch Normalization before the Output Layer in Deep Learning for Minority Class Detection in Imbalanced Data Sets

Abstract Slides Poster Similar

Force Banner for the Recognition of Spatial Relations

Robin Deléarde, Camille Kurtz, Laurent Wendling, Philippe Dejean

Auto-TLDR; Spatial Relation Recognition using Force Banners

Exploring the Ability of CNNs to Generalise to Previously Unseen Scales Over Wide Scale Ranges

Auto-TLDR; A theoretical analysis of invariance and covariance properties of scale channel networks

Abstract Slides Poster Similar

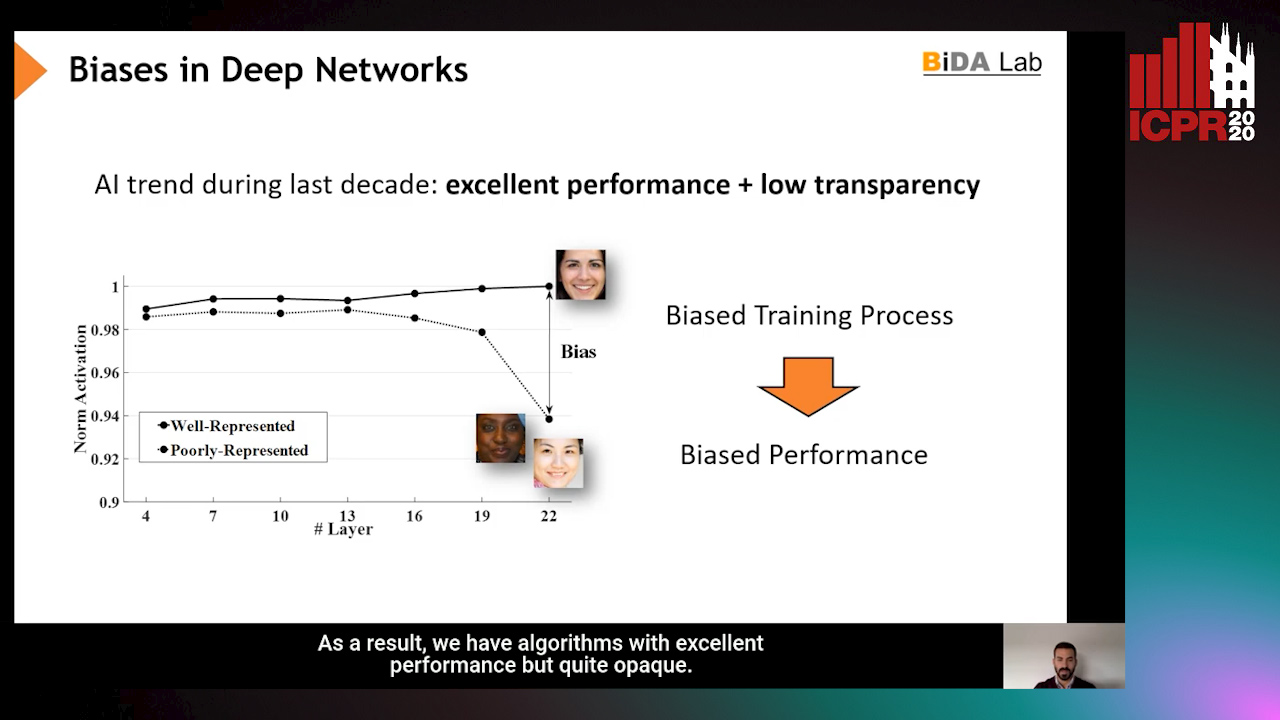

InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics

Ignacio Serna, Alejandro Peña Almansa, Aythami Morales, Julian Fierrez

Auto-TLDR; InsideBias: Detecting Bias in Deep Neural Networks from Face Images

Abstract Slides Poster Similar

Dual-Attention Guided Dropblock Module for Weakly Supervised Object Localization

Junhui Yin, Siqing Zhang, Dongliang Chang, Zhanyu Ma, Jun Guo

Auto-TLDR; Dual-Attention Guided Dropblock for Weakly Supervised Object Localization

Abstract Slides Poster Similar

Convolutional STN for Weakly Supervised Object Localization

Akhil Meethal, Marco Pedersoli, Soufiane Belharbi, Eric Granger

Auto-TLDR; Spatial Localization for Weakly Supervised Object Localization

From Early Biological Models to CNNs: Do They Look Where Humans Look?

Marinella Iole Cadoni, Andrea Lagorio, Enrico Grosso, Jia Huei Tan, Chee Seng Chan

Auto-TLDR; Comparing Neural Networks to Human Fixations for Semantic Learning

Abstract Slides Poster Similar

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Transformer-Encoder Detector Module: Using Context to Improve Robustness to Adversarial Attacks on Object Detection

Faisal Alamri, Sinan Kalkan, Nicolas Pugeault

Auto-TLDR; Context Module for Robust Object Detection with Transformer-Encoder Detector Module

Abstract Slides Poster Similar

Multi-Scale Keypoint Matching

Auto-TLDR; Multi-Scale Keypoint Matching Using Multi-Scale Information

Abstract Slides Poster Similar

Quasibinary Classifier for Images with Zero and Multiple Labels

Liao Shuai, Efstratios Gavves, Changyong Oh, Cees Snoek

Auto-TLDR; Quasibinary Classifiers for Zero-label and Multi-label Classification

Abstract Slides Poster Similar

Can Data Placement Be Effective for Neural Networks Classification Tasks? Introducing the Orthogonal Loss

Brais Cancela, Veronica Bolon-Canedo, Amparo Alonso-Betanzos

Auto-TLDR; Spatial Placement for Neural Network Training Loss Functions

Abstract Slides Poster Similar

DR2S: Deep Regression with Region Selection for Camera Quality Evaluation

Marcelin Tworski, Stéphane Lathuiliere, Salim Belkarfa, Attilio Fiandrotti, Marco Cagnazzo

Auto-TLDR; Texture Quality Estimation Using Deep Learning

Abstract Slides Poster Similar

Making Every Label Count: Handling Semantic Imprecision by Integrating Domain Knowledge

Clemens-Alexander Brust, Björn Barz, Joachim Denzler

Auto-TLDR; Class Hierarchies for Imprecise Label Learning and Annotation eXtrapolation

Abstract Slides Poster Similar

Locality-Promoting Representation Learning

Auto-TLDR; Locality-promoting Regularization for Neural Networks

Abstract Slides Poster Similar

Self-Supervised Learning for Astronomical Image Classification

Ana Martinazzo, Mateus Espadoto, Nina S. T. Hirata

Auto-TLDR; Unlabeled Astronomical Images for Deep Neural Network Pre-training

Abstract Slides Poster Similar

A Fine-Grained Dataset and Its Efficient Semantic Segmentation for Unstructured Driving Scenarios

Kai Andreas Metzger, Peter Mortimer, Hans J "Joe" Wuensche

Auto-TLDR; TAS500: A Semantic Segmentation Dataset for Autonomous Driving in Unstructured Environments

Abstract Slides Poster Similar

Color, Edge, and Pixel-Wise Explanation of Predictions Based onInterpretable Neural Network Model

Auto-TLDR; Explainable Deep Neural Network with Edge Detecting Filters

Accuracy-Perturbation Curves for Evaluation of Adversarial Attack and Defence Methods

Auto-TLDR; Accuracy-perturbation Curve for Robustness Evaluation of Adversarial Examples

Abstract Slides Poster Similar

Bridging the Gap between Natural and Medical Images through Deep Colorization

Lia Morra, Luca Piano, Fabrizio Lamberti, Tatiana Tommasi

Auto-TLDR; Transfer Learning for Diagnosis on X-ray Images Using Color Adaptation

Abstract Slides Poster Similar

Optimal Transport As a Defense against Adversarial Attacks

Quentin Bouniot, Romaric Audigier, Angélique Loesch

Auto-TLDR; Sinkhorn Adversarial Training with Optimal Transport Theory

Abstract Slides Poster Similar

Tackling Occlusion in Siamese Tracking with Structured Dropouts

Deepak Gupta, Efstratios Gavves, Arnold Smeulders

Auto-TLDR; Structured Dropout for Occlusion in latent space

Abstract Slides Poster Similar

Iterative Label Improvement: Robust Training by Confidence Based Filtering and Dataset Partitioning

Christian Haase-Schütz, Rainer Stal, Heinz Hertlein, Bernhard Sick

Auto-TLDR; Meta Training and Labelling for Unlabelled Data

Abstract Slides Poster Similar

Operation and Topology Aware Fast Differentiable Architecture Search

Shahid Siddiqui, Christos Kyrkou, Theocharis Theocharides

Auto-TLDR; EDARTS: Efficient Differentiable Architecture Search with Efficient Optimization

Abstract Slides Poster Similar

Learning with Delayed Feedback

Pranavan Theivendiram, Terence Sim

Auto-TLDR; Unsupervised Machine Learning with Delayed Feedback

Abstract Slides Poster Similar

Video Anomaly Detection by Estimating Likelihood of Representations

Auto-TLDR; Video Anomaly Detection in the latent feature space using a deep probabilistic model

Abstract Slides Poster Similar

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

Enhancing Semantic Segmentation of Aerial Images with Inhibitory Neurons

Ihsan Ullah, Sean Reilly, Michael Madden

Auto-TLDR; Lateral Inhibition in Deep Neural Networks for Object Recognition and Semantic Segmentation

Abstract Slides Poster Similar

Explainable Feature Embedding Using Convolutional Neural Networks for Pathological Image Analysis

Kazuki Uehara, Masahiro Murakawa, Hirokazu Nosato, Hidenori Sakanashi

Auto-TLDR; Explainable Diagnosis Using Convolutional Neural Networks for Pathological Image Analysis

Abstract Slides Poster Similar

MaxDropout: Deep Neural Network Regularization Based on Maximum Output Values

Claudio Filipi Gonçalves Santos, Danilo Colombo, Mateus Roder, Joao Paulo Papa

Auto-TLDR; MaxDropout: A Regularizer for Deep Neural Networks

Abstract Slides Poster Similar

Augmented Bi-Path Network for Few-Shot Learning

Baoming Yan, Chen Zhou, Bo Zhao, Kan Guo, Yang Jiang, Xiaobo Li, Zhang Ming, Yizhou Wang

Auto-TLDR; Augmented Bi-path Network for Few-shot Learning

Abstract Slides Poster Similar

Multi-Attribute Learning with Highly Imbalanced Data

Lady Viviana Beltran Beltran, Mickaël Coustaty, Nicholas Journet, Juan C. Caicedo, Antoine Doucet

Auto-TLDR; Data Imbalance in Multi-Attribute Deep Learning Models: Adaptation to face each one of the problems derived from imbalance

Abstract Slides Poster Similar