Transformer-Encoder Detector Module: Using Context to Improve Robustness to Adversarial Attacks on Object Detection

Faisal Alamri,

Sinan Kalkan,

Nicolas Pugeault

Auto-TLDR; Context Module for Robust Object Detection with Transformer-Encoder Detector Module

Similar papers

Detecting Objects with High Object Region Percentage

Fen Fang, Qianli Xu, Liyuan Li, Ying Gu, Joo-Hwee Lim

Auto-TLDR; Faster R-CNN for High-ORP Object Detection

Abstract Slides Poster Similar

Tilting at Windmills: Data Augmentation for Deeppose Estimation Does Not Help with Occlusions

Rafal Pytel, Osman Semih Kayhan, Jan Van Gemert

Auto-TLDR; Targeted Keypoint and Body Part Occlusion Attacks for Human Pose Estimation

Abstract Slides Poster Similar

CCA: Exploring the Possibility of Contextual Camouflage Attack on Object Detection

Shengnan Hu, Yang Zhang, Sumit Laha, Ankit Sharma, Hassan Foroosh

Auto-TLDR; Contextual camouflage attack for object detection

Abstract Slides Poster Similar

Adaptive Word Embedding Module for Semantic Reasoning in Large-Scale Detection

Yu Zhang, Xiaoyu Wu, Ruolin Zhu

Auto-TLDR; Adaptive Word Embedding Module for Object Detection

Abstract Slides Poster Similar

AdvHat: Real-World Adversarial Attack on ArcFace Face ID System

Stepan Komkov, Aleksandr Petiushko

Auto-TLDR; Adversarial Sticker Attack on ArcFace in Shooting Conditions

Abstract Slides Poster Similar

MAGNet: Multi-Region Attention-Assisted Grounding of Natural Language Queries at Phrase Level

Amar Shrestha, Krittaphat Pugdeethosapol, Haowen Fang, Qinru Qiu

Auto-TLDR; MAGNet: A Multi-Region Attention-Aware Grounding Network for Free-form Textual Queries

Abstract Slides Poster Similar

Object Detection Using Dual Graph Network

Shengjia Chen, Zhixin Li, Feicheng Huang, Canlong Zhang, Huifang Ma

Auto-TLDR; A Graph Convolutional Network for Object Detection with Key Relation Information

Defense Mechanism against Adversarial Attacks Using Density-Based Representation of Images

Yen-Ting Huang, Wen-Hung Liao, Chen-Wei Huang

Auto-TLDR; Adversarial Attacks Reduction Using Input Recharacterization

Abstract Slides Poster Similar

Context for Object Detection Via Lightweight Global and Mid-Level Representations

Mesut Erhan Unal, Adriana Kovashka

Auto-TLDR; Context-Based Object Detection with Semantic Similarity

Abstract Slides Poster Similar

VTT: Long-Term Visual Tracking with Transformers

Tianling Bian, Yang Hua, Tao Song, Zhengui Xue, Ruhui Ma, Neil Robertson, Haibing Guan

Auto-TLDR; Visual Tracking Transformer with transformers for long-term visual tracking

Multi-Modal Contextual Graph Neural Network for Text Visual Question Answering

Yaoyuan Liang, Xin Wang, Xuguang Duan, Wenwu Zhu

Auto-TLDR; Multi-modal Contextual Graph Neural Network for Text Visual Question Answering

Abstract Slides Poster Similar

Attack-Agnostic Adversarial Detection on Medical Data Using Explainable Machine Learning

Matthew Watson, Noura Al Moubayed

Auto-TLDR; Explainability-based Detection of Adversarial Samples on EHR and Chest X-Ray Data

Abstract Slides Poster Similar

Transformer Reasoning Network for Image-Text Matching and Retrieval

Nicola Messina, Fabrizio Falchi, Andrea Esuli, Giuseppe Amato

Auto-TLDR; A Transformer Encoder Reasoning Network for Image-Text Matching in Large-Scale Information Retrieval

Abstract Slides Poster Similar

Detective: An Attentive Recurrent Model for Sparse Object Detection

Amine Kechaou, Manuel Martinez, Monica Haurilet, Rainer Stiefelhagen

Auto-TLDR; Detective: An attentive object detector that identifies objects in images in a sequential manner

Abstract Slides Poster Similar

Object Detection in the DCT Domain: Is Luminance the Solution?

Benjamin Deguerre, Clement Chatelain, Gilles Gasso

Auto-TLDR; Jpeg Deep: Object Detection Using Compressed JPEG Images

Abstract Slides Poster Similar

Iterative Bounding Box Annotation for Object Detection

Bishwo Adhikari, Heikki Juhani Huttunen

Auto-TLDR; Semi-Automatic Bounding Box Annotation for Object Detection in Digital Images

Abstract Slides Poster Similar

The DeepScoresV2 Dataset and Benchmark for Music Object Detection

Lukas Tuggener, Yvan Putra Satyawan, Alexander Pacha, Jürgen Schmidhuber, Thilo Stadelmann

Auto-TLDR; DeepScoresV2: an extended version of the DeepScores dataset for optical music recognition

Abstract Slides Poster Similar

Construction Worker Hardhat-Wearing Detection Based on an Improved BiFPN

Chenyang Zhang, Zhiqiang Tian, Jingyi Song, Yaoyue Zheng, Bo Xu

Auto-TLDR; A One-Stage Object Detection Method for Hardhat-Wearing in Construction Site

Abstract Slides Poster Similar

SyNet: An Ensemble Network for Object Detection in UAV Images

Auto-TLDR; SyNet: Combining Multi-Stage and Single-Stage Object Detection for Aerial Images

Transferable Adversarial Attacks for Deep Scene Text Detection

Shudeng Wu, Tao Dai, Guanghao Meng, Bin Chen, Jian Lu, Shutao Xia

Auto-TLDR; Robustness of DNN-based STD methods against Adversarial Attacks

An Integrated Approach of Deep Learning and Symbolic Analysis for Digital PDF Table Extraction

Mengshi Zhang, Daniel Perelman, Vu Le, Sumit Gulwani

Auto-TLDR; Deep Learning and Symbolic Reasoning for Unstructured PDF Table Extraction

Abstract Slides Poster Similar

Beyond Cross-Entropy: Learning Highly Separable Feature Distributions for Robust and Accurate Classification

Arslan Ali, Andrea Migliorati, Tiziano Bianchi, Enrico Magli

Auto-TLDR; Gaussian class-conditional simplex loss for adversarial robust multiclass classifiers

Abstract Slides Poster Similar

Visual Style Extraction from Chart Images for Chart Restyling

Danqing Huang, Jinpeng Wang, Guoxin Wang, Chin-Yew Lin

Auto-TLDR; Exploiting Visual Properties from Reference Chart Images for Chart Restyling

Abstract Slides Poster Similar

Small Object Detection by Generative and Discriminative Learning

Yi Gu, Jie Li, Chentao Wu, Weijia Jia, Jianping Chen

Auto-TLDR; Generative and Discriminative Learning for Small Object Detection

Abstract Slides Poster Similar

Cross-View Relation Networks for Mammogram Mass Detection

Ma Jiechao, Xiang Li, Hongwei Li, Ruixuan Wang, Bjoern Menze, Wei-Shi Zheng

Auto-TLDR; Multi-view Modeling for Mass Detection in Mammogram

Abstract Slides Poster Similar

Vision-Based Layout Detection from Scientific Literature Using Recurrent Convolutional Neural Networks

Auto-TLDR; Transfer Learning for Scientific Literature Layout Detection Using Convolutional Neural Networks

Abstract Slides Poster Similar

Adversarial Training for Aspect-Based Sentiment Analysis with BERT

Akbar Karimi, Andrea Prati, Leonardo Rossi

Auto-TLDR; Adversarial Training of BERT for Aspect-Based Sentiment Analysis

Abstract Slides Poster Similar

Context Matters: Self-Attention for Sign Language Recognition

Fares Ben Slimane, Mohamed Bouguessa

Auto-TLDR; Attentional Network for Continuous Sign Language Recognition

Abstract Slides Poster Similar

Question-Agnostic Attention for Visual Question Answering

Moshiur R Farazi, Salman Hameed Khan, Nick Barnes

Auto-TLDR; Question-Agnostic Attention for Visual Question Answering

Abstract Slides Poster Similar

CDeC-Net: Composite Deformable Cascade Network for Table Detection in Document Images

Madhav Agarwal, Ajoy Mondal, C. V. Jawahar

Auto-TLDR; CDeC-Net: An End-to-End Trainable Deep Network for Detecting Tables in Document Images

Object Detection on Monocular Images with Two-Dimensional Canonical Correlation Analysis

Auto-TLDR; Multi-Task Object Detection from Monocular Images Using Multimodal RGB and Depth Data

Abstract Slides Poster Similar

Multi-Stage Attention Based Visual Question Answering

Aakansha Mishra, Ashish Anand, Prithwijit Guha

Auto-TLDR; Alternative Bi-directional Attention for Visual Question Answering

PIN: A Novel Parallel Interactive Network for Spoken Language Understanding

Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou

Auto-TLDR; Parallel Interactive Network for Spoken Language Understanding

Abstract Slides Poster Similar

Context Aware Group Activity Recognition

Avijit Dasgupta, C. V. Jawahar, Karteek Alahari

Auto-TLDR; A Two-Stream Architecture for Group Activity Recognition in Multi-Person Videos

Abstract Slides Poster Similar

Cost-Effective Adversarial Attacks against Scene Text Recognition

Mingkun Yang, Haitian Zheng, Xiang Bai, Jiebo Luo

Auto-TLDR; Adversarial Attacks on Scene Text Recognition

Abstract Slides Poster Similar

Tiny Object Detection in Aerial Images

Jinwang Wang, Wen Yang, Haowen Guo, Ruixiang Zhang, Gui-Song Xia

Auto-TLDR; Tiny Object Detection in Aerial Images Using Multiple Center Points Based Learning Network

Image-Based Table Cell Detection: A New Dataset and an Improved Detection Method

Dafeng Wei, Hongtao Lu, Yi Zhou, Kai Chen

Auto-TLDR; TableCell: A Semi-supervised Dataset for Table-wise Detection and Recognition

Abstract Slides Poster Similar

StrongPose: Bottom-up and Strong Keypoint Heat Map Based Pose Estimation

Auto-TLDR; StrongPose: A bottom-up box-free approach for human pose estimation and action recognition

Abstract Slides Poster Similar

Utilising Visual Attention Cues for Vehicle Detection and Tracking

Feiyan Hu, Venkatesh Gurram Munirathnam, Noel E O'Connor, Alan Smeaton, Suzanne Little

Auto-TLDR; Visual Attention for Object Detection and Tracking in Driver-Assistance Systems

Abstract Slides Poster Similar

Polynomial Universal Adversarial Perturbations for Person Re-Identification

Wenjie Ding, Xing Wei, Rongrong Ji, Xiaopeng Hong, Yihong Gong

Auto-TLDR; Polynomial Universal Adversarial Perturbation for Re-identification Methods

Abstract Slides Poster Similar

Mutual-Supervised Feature Modulation Network for Occluded Pedestrian Detection

Auto-TLDR; A Mutual-Supervised Feature Modulation Network for Occluded Pedestrian Detection

Bidirectional Matrix Feature Pyramid Network for Object Detection

Auto-TLDR; BMFPN: Bidirectional Matrix Feature Pyramid Network for Object Detection

Abstract Slides Poster Similar

A Novel Attention-Based Aggregation Function to Combine Vision and Language

Matteo Stefanini, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Fully-Attentive Reduction for Vision and Language

Abstract Slides Poster Similar

Object Detection Model Based on Scene-Level Region Proposal Self-Attention

Yu Quan, Zhixin Li, Canlong Zhang, Huifang Ma

Auto-TLDR; Exploiting Semantic Informations for Object Detection

Abstract Slides Poster Similar

Using Scene Graphs for Detecting Visual Relationships

Anurag Tripathi, Siddharth Srivastava, Brejesh Lall, Santanu Chaudhury

Auto-TLDR; Relationship Detection using Context Aligned Scene Graph Embeddings

Abstract Slides Poster Similar

Forground-Guided Vehicle Perception Framework

Kun Tian, Tong Zhou, Shiming Xiang, Chunhong Pan

Auto-TLDR; A foreground segmentation branch for vehicle detection

Abstract Slides Poster Similar

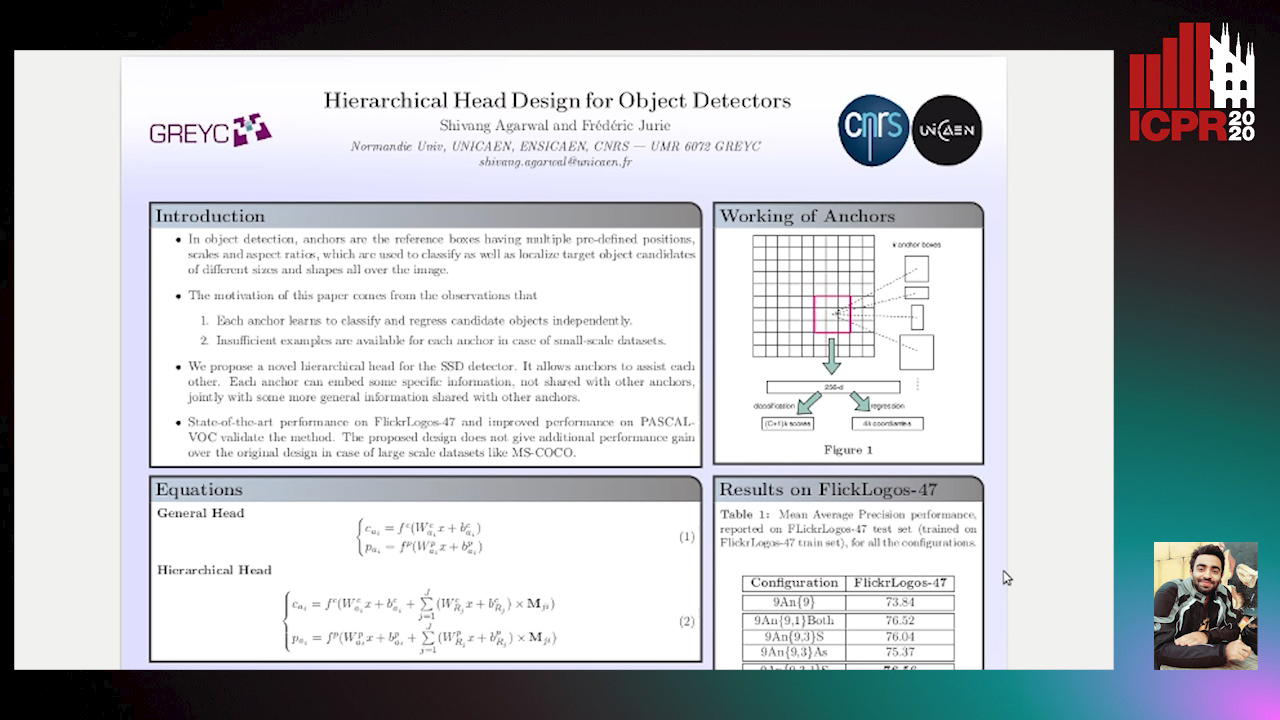

Hierarchical Head Design for Object Detectors

Shivang Agarwal, Frederic Jurie

Auto-TLDR; Hierarchical Anchor for SSD Detector

Abstract Slides Poster Similar

Real Time Fencing Move Classification and Detection at Touch Time During a Fencing Match

Cem Ekin Sunal, Chris G. Willcocks, Boguslaw Obara

Auto-TLDR; Fencing Body Move Classification and Detection Using Deep Learning