AdvHat: Real-World Adversarial Attack on ArcFace Face ID System

Stepan Komkov,

Aleksandr Petiushko

Auto-TLDR; Adversarial Sticker Attack on ArcFace in Shooting Conditions

Similar papers

Beyond Cross-Entropy: Learning Highly Separable Feature Distributions for Robust and Accurate Classification

Arslan Ali, Andrea Migliorati, Tiziano Bianchi, Enrico Magli

Auto-TLDR; Gaussian class-conditional simplex loss for adversarial robust multiclass classifiers

Abstract Slides Poster Similar

CCA: Exploring the Possibility of Contextual Camouflage Attack on Object Detection

Shengnan Hu, Yang Zhang, Sumit Laha, Ankit Sharma, Hassan Foroosh

Auto-TLDR; Contextual camouflage attack for object detection

Abstract Slides Poster Similar

Defense Mechanism against Adversarial Attacks Using Density-Based Representation of Images

Yen-Ting Huang, Wen-Hung Liao, Chen-Wei Huang

Auto-TLDR; Adversarial Attacks Reduction Using Input Recharacterization

Abstract Slides Poster Similar

Variational Inference with Latent Space Quantization for Adversarial Resilience

Vinay Kyatham, Deepak Mishra, Prathosh A.P.

Auto-TLDR; A Generalized Defense Mechanism for Adversarial Attacks on Data Manifolds

Abstract Slides Poster Similar

A Delayed Elastic-Net Approach for Performing Adversarial Attacks

Brais Cancela, Veronica Bolon-Canedo, Amparo Alonso-Betanzos

Auto-TLDR; Robustness of ImageNet Pretrained Models against Adversarial Attacks

Abstract Slides Poster Similar

Adversarially Training for Audio Classifiers

Raymel Alfonso Sallo, Mohammad Esmaeilpour, Patrick Cardinal

Auto-TLDR; Adversarially Training for Robust Neural Networks against Adversarial Attacks

Abstract Slides Poster Similar

Transferable Adversarial Attacks for Deep Scene Text Detection

Shudeng Wu, Tao Dai, Guanghao Meng, Bin Chen, Jian Lu, Shutao Xia

Auto-TLDR; Robustness of DNN-based STD methods against Adversarial Attacks

Accuracy-Perturbation Curves for Evaluation of Adversarial Attack and Defence Methods

Auto-TLDR; Accuracy-perturbation Curve for Robustness Evaluation of Adversarial Examples

Abstract Slides Poster Similar

Attack Agnostic Adversarial Defense via Visual Imperceptible Bound

Saheb Chhabra, Akshay Agarwal, Richa Singh, Mayank Vatsa

Auto-TLDR; Robust Adversarial Defense with Visual Imperceptible Bound

Abstract Slides Poster Similar

Cost-Effective Adversarial Attacks against Scene Text Recognition

Mingkun Yang, Haitian Zheng, Xiang Bai, Jiebo Luo

Auto-TLDR; Adversarial Attacks on Scene Text Recognition

Abstract Slides Poster Similar

Adaptive Noise Injection for Training Stochastic Student Networks from Deterministic Teachers

Yi Xiang Marcus Tan, Yuval Elovici, Alexander Binder

Auto-TLDR; Adaptive Stochastic Networks for Adversarial Attacks

DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Gaoang Wang, Chen Lin, Tianqiang Liu, Mingwei He, Jiebo Luo

Auto-TLDR; DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Abstract Slides Poster Similar

F-Mixup: Attack CNNs from Fourier Perspective

Xiu-Chuan Li, Xu-Yao Zhang, Fei Yin, Cheng-Lin Liu

Auto-TLDR; F-Mixup: A novel black-box attack in frequency domain for deep neural networks

Abstract Slides Poster Similar

Optimal Transport As a Defense against Adversarial Attacks

Quentin Bouniot, Romaric Audigier, Angélique Loesch

Auto-TLDR; Sinkhorn Adversarial Training with Optimal Transport Theory

Abstract Slides Poster Similar

Polynomial Universal Adversarial Perturbations for Person Re-Identification

Wenjie Ding, Xing Wei, Rongrong Ji, Xiaopeng Hong, Yihong Gong

Auto-TLDR; Polynomial Universal Adversarial Perturbation for Re-identification Methods

Abstract Slides Poster Similar

On the Robustness of 3D Human Pose Estimation

Zerui Chen, Yan Huang, Liang Wang

Auto-TLDR; Robustness of 3D Human Pose Estimation Methods to Adversarial Attacks

Transformer-Encoder Detector Module: Using Context to Improve Robustness to Adversarial Attacks on Object Detection

Faisal Alamri, Sinan Kalkan, Nicolas Pugeault

Auto-TLDR; Context Module for Robust Object Detection with Transformer-Encoder Detector Module

Abstract Slides Poster Similar

Verifying the Causes of Adversarial Examples

Honglin Li, Yifei Fan, Frieder Ganz, Tony Yezzi, Payam Barnaghi

Auto-TLDR; Exploring the Causes of Adversarial Examples in Neural Networks

Abstract Slides Poster Similar

Angular Sparsemax for Face Recognition

Auto-TLDR; Angular Sparsemax for Face Recognition

Abstract Slides Poster Similar

Face Anti-Spoofing Using Spatial Pyramid Pooling

Lei Shi, Zhuo Zhou, Zhenhua Guo

Auto-TLDR; Spatial Pyramid Pooling for Face Anti-Spoofing

Abstract Slides Poster Similar

SATGAN: Augmenting Age Biased Dataset for Cross-Age Face Recognition

Wenshuang Liu, Wenting Chen, Yuanlue Zhu, Linlin Shen

Auto-TLDR; SATGAN: Stable Age Translation GAN for Cross-Age Face Recognition

Abstract Slides Poster Similar

Task-based Focal Loss for Adversarially Robust Meta-Learning

Yufan Hou, Lixin Zou, Weidong Liu

Auto-TLDR; Task-based Adversarial Focal Loss for Few-shot Meta-Learner

Abstract Slides Poster Similar

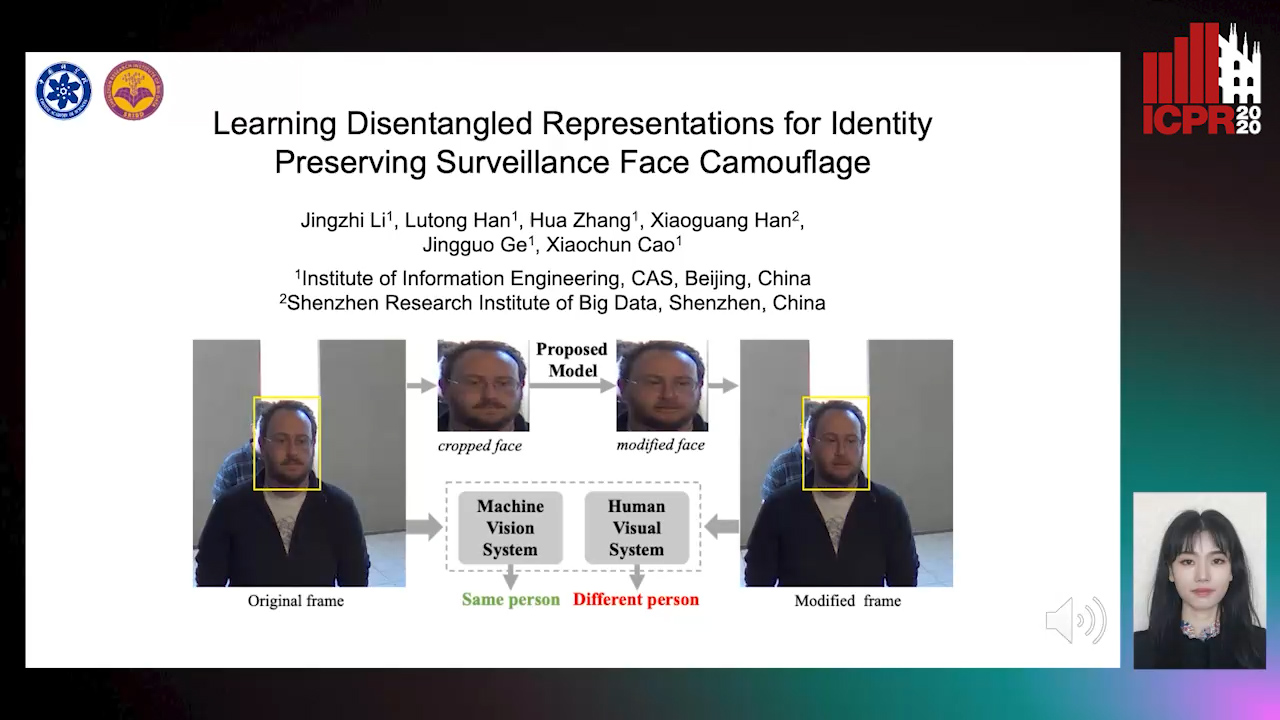

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

Explain2Attack: Text Adversarial Attacks via Cross-Domain Interpretability

Mahmoud Hossam, Le Trung, He Zhao, Dinh Phung

Auto-TLDR; Transfer2Attack: A Black-box Adversarial Attack on Text Classification

Abstract Slides Poster Similar

Attentive Part-Aware Networks for Partial Person Re-Identification

Lijuan Huo, Chunfeng Song, Zhengyi Liu, Zhaoxiang Zhang

Auto-TLDR; Part-Aware Learning for Partial Person Re-identification

Abstract Slides Poster Similar

Detection of Makeup Presentation Attacks Based on Deep Face Representations

Christian Rathgeb, Pawel Drozdowski, Christoph Busch

Auto-TLDR; An Attack Detection Scheme for Face Recognition Using Makeup Presentation Attacks

Abstract Slides Poster Similar

Cam-Softmax for Discriminative Deep Feature Learning

Tamas Suveges, Stephen James Mckenna

Auto-TLDR; Cam-Softmax: A Generalisation of Activations and Softmax for Deep Feature Spaces

Abstract Slides Poster Similar

Building Computationally Efficient and Well-Generalizing Person Re-Identification Models with Metric Learning

Vladislav Sovrasov, Dmitry Sidnev

Auto-TLDR; Cross-Domain Generalization in Person Re-identification using Omni-Scale Network

Generating Private Data Surrogates for Vision Related Tasks

Ryan Webster, Julien Rabin, Loic Simon, Frederic Jurie

Auto-TLDR; Generative Adversarial Networks for Membership Inference Attacks

Abstract Slides Poster Similar

Killing Four Birds with One Gaussian Process: The Relation between Different Test-Time Attacks

Kathrin Grosse, Michael Thomas Smith, Michael Backes

Auto-TLDR; Security of Gaussian Process Classifiers against Attack Algorithms

Abstract Slides Poster Similar

An Unsupervised Approach towards Varying Human Skin Tone Using Generative Adversarial Networks

Debapriya Roy, Diganta Mukherjee, Bhabatosh Chanda

Auto-TLDR; Unsupervised Skin Tone Change Using Augmented Reality Based Models

Abstract Slides Poster Similar

Lightweight Low-Resolution Face Recognition for Surveillance Applications

Yoanna Martínez-Díaz, Heydi Mendez-Vazquez, Luis S. Luevano, Leonardo Chang, Miguel Gonzalez-Mendoza

Auto-TLDR; Efficiency of Lightweight Deep Face Networks on Low-Resolution Surveillance Imagery

Abstract Slides Poster Similar

SoftmaxOut Transformation-Permutation Network for Facial Template Protection

Hakyoung Lee, Cheng Yaw Low, Andrew Teoh

Auto-TLDR; SoftmaxOut Transformation-Permutation Network for C cancellable Biometrics

Abstract Slides Poster Similar

Contrastive Data Learning for Facial Pose and Illumination Normalization

Auto-TLDR; Pose and Illumination Normalization with Contrast Data Learning for Face Recognition

Abstract Slides Poster Similar

A Cross Domain Multi-Modal Dataset for Robust Face Anti-Spoofing

Qiaobin Ji, Shugong Xu, Xudong Chen, Shan Cao, Shunqing Zhang

Auto-TLDR; Cross domain multi-modal FAS dataset GREAT-FASD and several evaluation protocols for academic community

Abstract Slides Poster Similar

Unsupervised Disentangling of Viewpoint and Residues Variations by Substituting Representations for Robust Face Recognition

Minsu Kim, Joanna Hong, Junho Kim, Hong Joo Lee, Yong Man Ro

Auto-TLDR; Unsupervised Disentangling of Identity, viewpoint, and Residue Representations for Robust Face Recognition

Abstract Slides Poster Similar

Attack-Agnostic Adversarial Detection on Medical Data Using Explainable Machine Learning

Matthew Watson, Noura Al Moubayed

Auto-TLDR; Explainability-based Detection of Adversarial Samples on EHR and Chest X-Ray Data

Abstract Slides Poster Similar

Identifying Missing Children: Face Age-Progression Via Deep Feature Aging

Debayan Deb, Divyansh Aggarwal, Anil Jain

Auto-TLDR; Aging Face Features for Missing Children Identification

An Experimental Evaluation of Recent Face Recognition Losses for Deepfake Detection

Yu-Cheng Liu, Chia-Ming Chang, I-Hsuan Chen, Yu Ju Ku, Jun-Cheng Chen

Auto-TLDR; Deepfake Classification and Detection using Loss Functions for Face Recognition

Abstract Slides Poster Similar

Towards Explaining Adversarial Examples Phenomenon in Artificial Neural Networks

Ramin Barati, Reza Safabakhsh, Mohammad Rahmati

Auto-TLDR; Convolutional Neural Networks and Adversarial Training from the Perspective of convergence

Abstract Slides Poster Similar

Face Image Quality Assessment for Model and Human Perception

Ken Chen, Yichao Wu, Zhenmao Li, Yudong Wu, Ding Liang

Auto-TLDR; A labour-saving method for FIQA training with contradictory data from multiple sources

Abstract Slides Poster Similar

Tilting at Windmills: Data Augmentation for Deeppose Estimation Does Not Help with Occlusions

Rafal Pytel, Osman Semih Kayhan, Jan Van Gemert

Auto-TLDR; Targeted Keypoint and Body Part Occlusion Attacks for Human Pose Estimation

Abstract Slides Poster Similar

Video Face Manipulation Detection through Ensemble of CNNs

Nicolo Bonettini, Edoardo Daniele Cannas, Sara Mandelli, Luca Bondi, Paolo Bestagini, Stefano Tubaro

Auto-TLDR; Face Manipulation Detection in Video Sequences Using Convolutional Neural Networks

Discriminative Multi-Level Reconstruction under Compact Latent Space for One-Class Novelty Detection

Jaewoo Park, Yoon Gyo Jung, Andrew Teoh

Auto-TLDR; Discriminative Compact AE for One-Class novelty detection and Adversarial Example Detection

FeatureNMS: Non-Maximum Suppression by Learning Feature Embeddings

Auto-TLDR; FeatureNMS: Non-Maximum Suppression for Multiple Object Detection

Abstract Slides Poster Similar

Pose-Robust Face Recognition by Deep Meta Capsule Network-Based Equivariant Embedding

Fangyu Wu, Jeremy Simon Smith, Wenjin Lu, Bailing Zhang

Auto-TLDR; Deep Meta Capsule Network-based Equivariant Embedding Model for Pose-Robust Face Recognition

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

A Flatter Loss for Bias Mitigation in Cross-Dataset Facial Age Estimation

Ali Akbari, Muhammad Awais, Zhenhua Feng, Ammarah Farooq, Josef Kittler

Auto-TLDR; Cross-dataset Age Estimation for Neural Network Training

Abstract Slides Poster Similar