Exploring the Ability of CNNs to Generalise to Previously Unseen Scales Over Wide Scale Ranges

Auto-TLDR; A theoretical analysis of invariance and covariance properties of scale channel networks

Similar papers

Understanding When Spatial Transformer Networks Do Not Support Invariance, and What to Do about It

Lukas Finnveden, Ylva Jansson, Tony Lindeberg

Auto-TLDR; Spatial Transformer Networks are unable to support invariance when transforming CNN feature maps

Abstract Slides Poster Similar

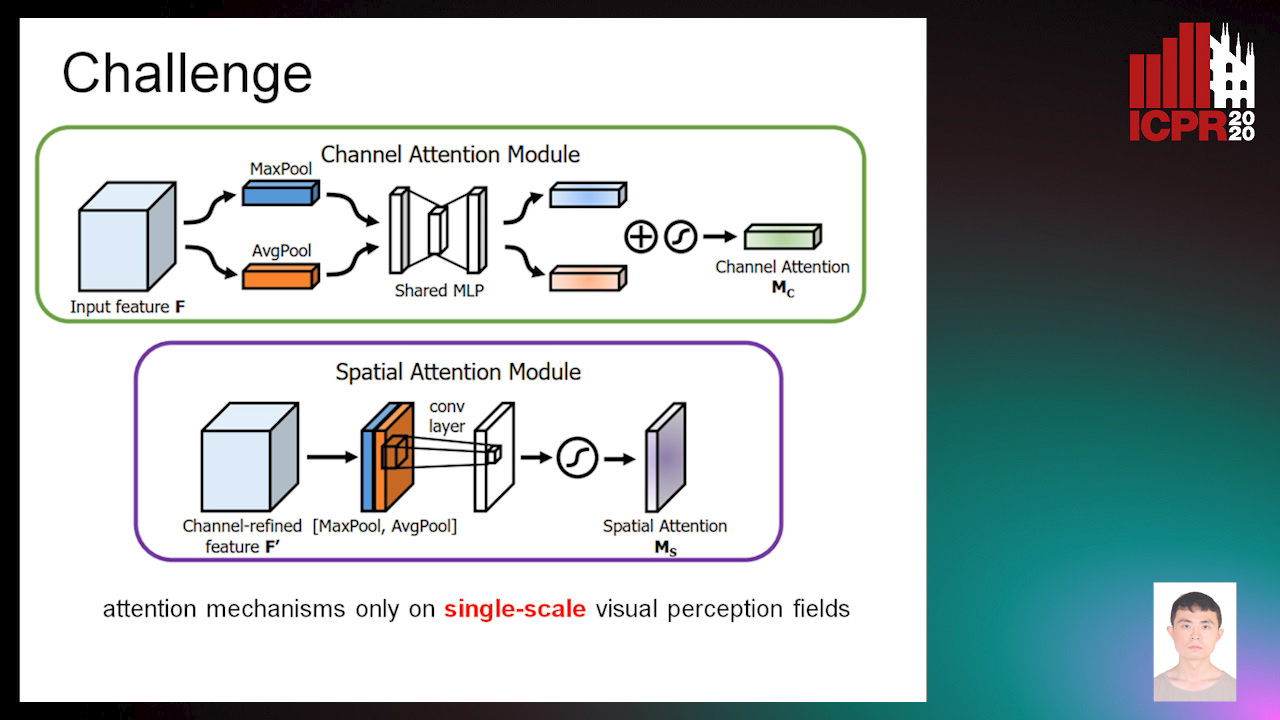

Attention Pyramid Module for Scene Recognition

Zhinan Qiao, Xiaohui Yuan, Chengyuan Zhuang, Abolfazl Meyarian

Auto-TLDR; Attention Pyramid Module for Multi-Scale Scene Recognition

Abstract Slides Poster Similar

Locality-Promoting Representation Learning

Auto-TLDR; Locality-promoting Regularization for Neural Networks

Abstract Slides Poster Similar

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

FatNet: A Feature-Attentive Network for 3D Point Cloud Processing

Chaitanya Kaul, Nick Pears, Suresh Manandhar

Auto-TLDR; Feature-Attentive Neural Networks for Point Cloud Classification and Segmentation

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Efficient-Receptive Field Block with Group Spatial Attention Mechanism for Object Detection

Jiacheng Zhang, Zhicheng Zhao, Fei Su

Auto-TLDR; E-RFB: Efficient-Receptive Field Block for Deep Neural Network for Object Detection

Abstract Slides Poster Similar

Convolutional STN for Weakly Supervised Object Localization

Akhil Meethal, Marco Pedersoli, Soufiane Belharbi, Eric Granger

Auto-TLDR; Spatial Localization for Weakly Supervised Object Localization

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

Verifying the Causes of Adversarial Examples

Honglin Li, Yifei Fan, Frieder Ganz, Tony Yezzi, Payam Barnaghi

Auto-TLDR; Exploring the Causes of Adversarial Examples in Neural Networks

Abstract Slides Poster Similar

ResFPN: Residual Skip Connections in Multi-Resolution Feature Pyramid Networks for Accurate Dense Pixel Matching

Rishav ., René Schuster, Ramy Battrawy, Oliver Wasenmüler, Didier Stricker

Auto-TLDR; Resolution Feature Pyramid Networks for Dense Pixel Matching

Kernel-based Graph Convolutional Networks

Auto-TLDR; Spatial Graph Convolutional Networks in Recurrent Kernel Hilbert Space

Abstract Slides Poster Similar

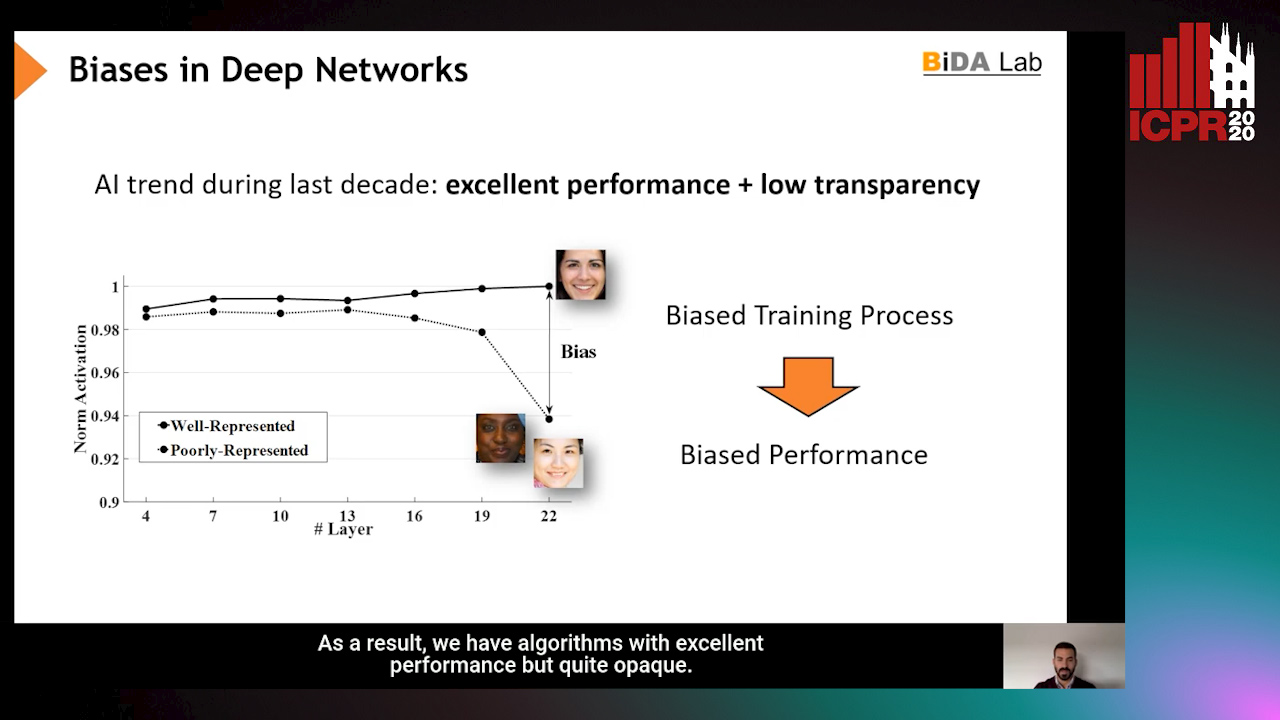

InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics

Ignacio Serna, Alejandro Peña Almansa, Aythami Morales, Julian Fierrez

Auto-TLDR; InsideBias: Detecting Bias in Deep Neural Networks from Face Images

Abstract Slides Poster Similar

Triplet-Path Dilated Network for Detection and Segmentation of General Pathological Images

Jiaqi Luo, Zhicheng Zhao, Fei Su, Limei Guo

Auto-TLDR; Triplet-path Network for One-Stage Object Detection and Segmentation in Pathological Images

A Novel Region of Interest Extraction Layer for Instance Segmentation

Leonardo Rossi, Akbar Karimi, Andrea Prati

Auto-TLDR; Generic RoI Extractor for Two-Stage Neural Network for Instance Segmentation

Abstract Slides Poster Similar

On the Minimal Recognizable Image Patch

Mark Fonaryov, Michael Lindenbaum

Auto-TLDR; MIRC: A Deep Neural Network for Minimal Recognition on Partially Occluded Images

Abstract Slides Poster Similar

A Close Look at Deep Learning with Small Data

Auto-TLDR; Low-Complex Neural Networks for Small Data Conditions

Abstract Slides Poster Similar

Quaternion Capsule Networks

Barış Özcan, Furkan Kınlı, Mustafa Furkan Kirac

Auto-TLDR; Quaternion Capsule Networks for Object Recognition

Abstract Slides Poster Similar

Generalization Comparison of Deep Neural Networks Via Output Sensitivity

Mahsa Forouzesh, Farnood Salehi, Patrick Thiran

Auto-TLDR; Generalization of Deep Neural Networks using Sensitivity

Combined Invariants to Gaussian Blur and Affine Transformation

Jitka Kostkova, Jan Flusser, Matteo Pedone

Auto-TLDR; A new theory of combined moment invariants to Gaussian blur and spatial affine transformation

Abstract Slides Poster Similar

SiamMT: Real-Time Arbitrary Multi-Object Tracking

Lorenzo Vaquero, Manuel Mucientes, Victor Brea

Auto-TLDR; SiamMT: A Deep-Learning-based Arbitrary Multi-Object Tracking System for Video

Abstract Slides Poster Similar

Improved Residual Networks for Image and Video Recognition

Ionut Cosmin Duta, Li Liu, Fan Zhu, Ling Shao

Auto-TLDR; Residual Networks for Deep Learning

Abstract Slides Poster Similar

Light3DPose: Real-Time Multi-Person 3D Pose Estimation from Multiple Views

Alessio Elmi, Davide Mazzini, Pietro Tortella

Auto-TLDR; 3D Pose Estimation of Multiple People from a Few calibrated Camera Views using Deep Learning

Abstract Slides Poster Similar

PS^2-Net: A Locally and Globally Aware Network for Point-Based Semantic Segmentation

Na Zhao, Tat Seng Chua, Gim Hee Lee

Auto-TLDR; PS2-Net: A Local and Globally Aware Deep Learning Framework for Semantic Segmentation on 3D Point Clouds

Abstract Slides Poster Similar

Transferable Model for Shape Optimization subject to Physical Constraints

Lukas Harsch, Johannes Burgbacher, Stefan Riedelbauch

Auto-TLDR; U-Net with Spatial Transformer Network for Flow Simulations

Abstract Slides Poster Similar

Uncertainty Guided Recognition of Tiny Craters on the Moon

Thorsten Wilhelm, Christian Wöhler

Auto-TLDR; Accurately Detecting Tiny Craters in Remote Sensed Images Using Deep Neural Networks

Abstract Slides Poster Similar

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

Hierarchically Aggregated Residual Transformation for Single Image Super Resolution

Auto-TLDR; HARTnet: Hierarchically Aggregated Residual Transformation for Multi-Scale Super-resolution

Abstract Slides Poster Similar

Interpolation in Auto Encoders with Bridge Processes

Carl Ringqvist, Henrik Hult, Judith Butepage, Hedvig Kjellstrom

Auto-TLDR; Stochastic interpolations from auto encoders trained on flattened sequences

Abstract Slides Poster Similar

Contextual Classification Using Self-Supervised Auxiliary Models for Deep Neural Networks

Sebastian Palacio, Philipp Engler, Jörn Hees, Andreas Dengel

Auto-TLDR; Self-Supervised Autogenous Learning for Deep Neural Networks

Abstract Slides Poster Similar

ResNet-Like Architecture with Low Hardware Requirements

Elena Limonova, Daniil Alfonso, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; BM-ResNet: Bipolar Morphological ResNet for Image Classification

Abstract Slides Poster Similar

Rotation Invariant Aerial Image Retrieval with Group Convolutional Metric Learning

Hyunseung Chung, Woo-Jeoung Nam, Seong-Whan Lee

Auto-TLDR; Robust Remote Sensing Image Retrieval Using Group Convolution with Attention Mechanism and Metric Learning

Abstract Slides Poster Similar

Force Banner for the Recognition of Spatial Relations

Robin Deléarde, Camille Kurtz, Laurent Wendling, Philippe Dejean

Auto-TLDR; Spatial Relation Recognition using Force Banners

Dimensionality Reduction for Data Visualization and Linear Classification, and the Trade-Off between Robustness and Classification Accuracy

Martin Becker, Jens Lippel, Thomas Zielke

Auto-TLDR; Robustness Assessment of Deep Autoencoder for Data Visualization using Scatter Plots

Abstract Slides Poster Similar

Learning a Dynamic High-Resolution Network for Multi-Scale Pedestrian Detection

Mengyuan Ding, Shanshan Zhang, Jian Yang

Auto-TLDR; Learningable Dynamic HRNet for Pedestrian Detection

Abstract Slides Poster Similar

Feature-Dependent Cross-Connections in Multi-Path Neural Networks

Dumindu Tissera, Kasun Vithanage, Rukshan Wijesinghe, Kumara Kahatapitiya, Subha Fernando, Ranga Rodrigo

Auto-TLDR; Multi-path Networks for Adaptive Feature Extraction

Abstract Slides Poster Similar

Aggregating Object Features Based on Attention Weights for Fine-Grained Image Retrieval

Hongli Lin, Yongqi Song, Zixuan Zeng, Weisheng Wang

Auto-TLDR; DSAW: Unsupervised Dual-selection for Fine-Grained Image Retrieval

On Resource-Efficient Bayesian Network Classifiers and Deep Neural Networks

Wolfgang Roth, Günther Schindler, Holger Fröning, Franz Pernkopf

Auto-TLDR; Quantization-Aware Bayesian Network Classifiers for Small-Scale Scenarios

Abstract Slides Poster Similar

Context-Aware Residual Module for Image Classification

Auto-TLDR; Context-Aware Residual Module for Image Classification

Abstract Slides Poster Similar

CQNN: Convolutional Quadratic Neural Networks

Auto-TLDR; Quadratic Neural Network for Image Classification

Abstract Slides Poster Similar

Recursive Convolutional Neural Networks for Epigenomics

Aikaterini Symeonidi, Anguelos Nicolaou, Frank Johannes, Vincent Christlein

Auto-TLDR; Recursive Convolutional Neural Networks for Epigenomic Data Analysis

Abstract Slides Poster Similar

Can Data Placement Be Effective for Neural Networks Classification Tasks? Introducing the Orthogonal Loss

Brais Cancela, Veronica Bolon-Canedo, Amparo Alonso-Betanzos

Auto-TLDR; Spatial Placement for Neural Network Training Loss Functions

Abstract Slides Poster Similar

Ordinal Depth Classification Using Region-Based Self-Attention

Minh Hieu Phan, Son Lam Phung, Abdesselam Bouzerdoum

Auto-TLDR; Region-based Self-Attention for Multi-scale Depth Estimation from a Single 2D Image

Abstract Slides Poster Similar

ScarfNet: Multi-Scale Features with Deeply Fused and Redistributed Semantics for Enhanced Object Detection

Jin Hyeok Yoo, Dongsuk Kum, Jun Won Choi

Auto-TLDR; Semantic Fusion of Multi-scale Feature Maps for Object Detection

Abstract Slides Poster Similar

On the Information of Feature Maps and Pruning of Deep Neural Networks

Mohammadreza Soltani, Suya Wu, Jie Ding, Robert Ravier, Vahid Tarokh

Auto-TLDR; Compressing Deep Neural Models Using Mutual Information

Abstract Slides Poster Similar

Filtered Batch Normalization

András Horváth, Jalal Al-Afandi

Auto-TLDR; Batch Normalization with Out-of-Distribution Activations in Deep Neural Networks

Abstract Slides Poster Similar

Revisiting the Training of Very Deep Neural Networks without Skip Connections

Oyebade Kayode Oyedotun, Abd El Rahman Shabayek, Djamila Aouada, Bjorn Ottersten

Auto-TLDR; Optimization of Very Deep PlainNets without shortcut connections with 'vanishing and exploding units' activations'

Abstract Slides Poster Similar

A CNN-RNN Framework for Image Annotation from Visual Cues and Social Network Metadata

Tobia Tesan, Pasquale Coscia, Lamberto Ballan

Auto-TLDR; Context-Based Image Annotation with Multiple Semantic Embeddings and Recurrent Neural Networks

Abstract Slides Poster Similar