Predicting Chemical Properties Using Self-Attention Multi-Task Learning Based on SMILES Representation

Auto-TLDR; Self-attention based Transformer-Variant Model for Chemical Compound Properties Prediction

Similar papers

Evaluation of BERT and ALBERT Sentence Embedding Performance on Downstream NLP Tasks

Hyunjin Choi, Judong Kim, Seongho Joe, Youngjune Gwon

Auto-TLDR; Sentence Embedding Models for BERT and ALBERT: A Comparison and Evaluation

Abstract Slides Poster Similar

PIN: A Novel Parallel Interactive Network for Spoken Language Understanding

Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou

Auto-TLDR; Parallel Interactive Network for Spoken Language Understanding

Abstract Slides Poster Similar

Cross-Lingual Text Image Recognition Via Multi-Task Sequence to Sequence Learning

Zhuo Chen, Fei Yin, Xu-Yao Zhang, Qing Yang, Cheng-Lin Liu

Auto-TLDR; Cross-Lingual Text Image Recognition with Multi-task Learning

Abstract Slides Poster Similar

Emerging Relation Network and Task Embedding for Multi-Task Regression Problems

Auto-TLDR; A Comparative Study of Multi-Task Learning for Non-linear Time Series Problems

Abstract Slides Poster Similar

Automatic Student Network Search for Knowledge Distillation

Zhexi Zhang, Wei Zhu, Junchi Yan, Peng Gao, Guotong Xie

Auto-TLDR; NAS-KD: Knowledge Distillation for BERT

Abstract Slides Poster Similar

A Transformer-Based Radical Analysis Network for Chinese Character Recognition

Chen Yang, Qing Wang, Jun Du, Jianshu Zhang, Changjie Wu, Jiaming Wang

Auto-TLDR; Transformer-based Radical Analysis Network for Chinese Character Recognition

Abstract Slides Poster Similar

KoreALBERT: Pretraining a Lite BERT Model for Korean Language Understanding

Hyunjae Lee, Jaewoong Yun, Bongkyu Hwang, Seongho Joe, Seungjai Min, Youngjune Gwon

Auto-TLDR; KoreALBERT: A monolingual ALBERT model for Korean language understanding

Abstract Slides Poster Similar

Tackling Contradiction Detection in German Using Machine Translation and End-To-End Recurrent Neural Networks

Maren Pielka, Rafet Sifa, Lars Patrick Hillebrand, David Biesner, Rajkumar Ramamurthy, Anna Ladi, Christian Bauckhage

Auto-TLDR; Contradiction Detection in Natural Language Inference using Recurrent Neural Networks

Abstract Slides Poster Similar

Adversarial Training for Aspect-Based Sentiment Analysis with BERT

Akbar Karimi, Andrea Prati, Leonardo Rossi

Auto-TLDR; Adversarial Training of BERT for Aspect-Based Sentiment Analysis

Abstract Slides Poster Similar

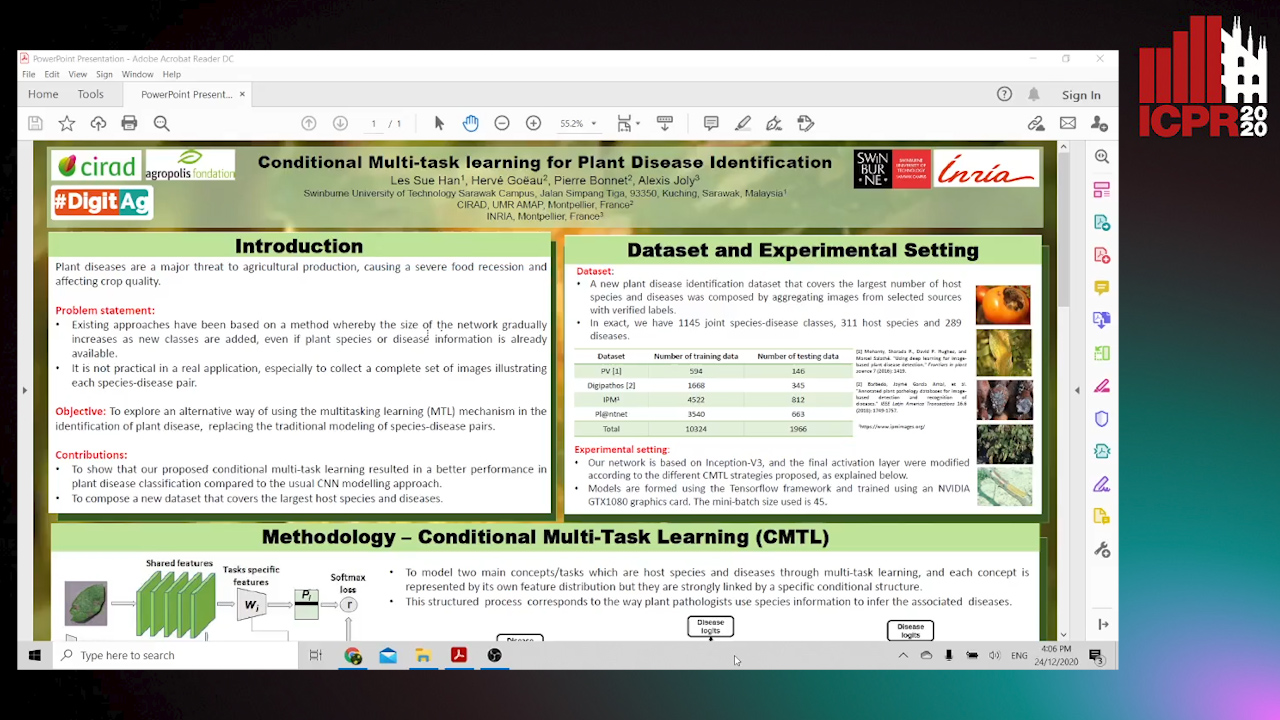

Conditional Multi-Task Learning for Plant Disease Identification

Sue Han Lee, Herve Goëau, Pierre Bonnet, Alexis Joly

Auto-TLDR; A conditional multi-task learning approach for plant disease identification

Abstract Slides Poster Similar

Segmenting Messy Text: Detecting Boundaries in Text Derived from Historical Newspaper Images

Auto-TLDR; Text Segmentation of Marriage Announcements Using Deep Learning-based Models

Abstract Slides Poster Similar

Enhancing Handwritten Text Recognition with N-Gram Sequencedecomposition and Multitask Learning

Vasiliki Tassopoulou, George Retsinas, Petros Maragos

Auto-TLDR; Multi-task Learning for Handwritten Text Recognition

Abstract Slides Poster Similar

PICK: Processing Key Information Extraction from Documents Using Improved Graph Learning-Convolutional Networks

Wenwen Yu, Ning Lu, Xianbiao Qi, Ping Gong, Rong Xiao

Auto-TLDR; PICK: A Graph Learning Framework for Key Information Extraction from Documents

Abstract Slides Poster Similar

Global Context-Based Network with Transformer for Image2latex

Nuo Pang, Chun Yang, Xiaobin Zhu, Jixuan Li, Xu-Cheng Yin

Auto-TLDR; Image2latex with Global Context block and Transformer

Abstract Slides Poster Similar

Transformer Networks for Trajectory Forecasting

Francesco Giuliari, Hasan Irtiza, Marco Cristani, Fabio Galasso

Auto-TLDR; TransformerNetworks for Trajectory Prediction of People Interactions

Abstract Slides Poster Similar

Adversarial Encoder-Multi-Task-Decoder for Multi-Stage Processes

Andre Mendes, Julian Togelius, Leandro Dos Santos Coelho

Auto-TLDR; Multi-Task Learning and Semi-Supervised Learning for Multi-Stage Processes

TAAN: Task-Aware Attention Network for Few-Shot Classification

Auto-TLDR; TAAN: Task-Aware Attention Network for Few-Shot Classification

Abstract Slides Poster Similar

GCNs-Based Context-Aware Short Text Similarity Model

Auto-TLDR; Context-Aware Graph Convolutional Network for Text Similarity

Abstract Slides Poster Similar

MA-LSTM: A Multi-Attention Based LSTM for Complex Pattern Extraction

Jingjie Guo, Kelang Tian, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; MA-LSTM: Multiple Attention based recurrent neural network for forget gate

Abstract Slides Poster Similar

Mood Detection Analyzing Lyrics and Audio Signal Based on Deep Learning Architectures

Konstantinos Pyrovolakis, Paraskevi Tzouveli, Giorgos Stamou

Auto-TLDR; Automated Music Mood Detection using Music Information Retrieval

Abstract Slides Poster Similar

Context Matters: Self-Attention for Sign Language Recognition

Fares Ben Slimane, Mohamed Bouguessa

Auto-TLDR; Attentional Network for Continuous Sign Language Recognition

Abstract Slides Poster Similar

A Novel Attention-Based Aggregation Function to Combine Vision and Language

Matteo Stefanini, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Fully-Attentive Reduction for Vision and Language

Abstract Slides Poster Similar

Assessing the Severity of Health States Based on Social Media Posts

Shweta Yadav, Joy Prakash Sain, Amit Sheth, Asif Ekbal, Sriparna Saha, Pushpak Bhattacharyya

Auto-TLDR; A Multiview Learning Framework for Assessment of Health State in Online Health Communities

Abstract Slides Poster Similar

Weakly Supervised Attention Rectification for Scene Text Recognition

Chengyu Gu, Shilin Wang, Yiwei Zhu, Zheng Huang, Kai Chen

Auto-TLDR; An auxiliary supervision branch for attention-based scene text recognition

Abstract Slides Poster Similar

Attention-Driven Body Pose Encoding for Human Activity Recognition

Bappaditya Debnath, Swagat Kumar, Marry O'Brien, Ardhendu Behera

Auto-TLDR; Attention-based Body Pose Encoding for Human Activity Recognition

Abstract Slides Poster Similar

Stratified Multi-Task Learning for Robust Spotting of Scene Texts

Kinjal Dasgupta, Sudip Das, Ujjwal Bhattacharya

Auto-TLDR; Feature Representation Block for Multi-task Learning of Scene Text

Learning Neural Textual Representations for Citation Recommendation

Thanh Binh Kieu, Inigo Jauregi Unanue, Son Bao Pham, Xuan-Hieu Phan, M. Piccardi

Auto-TLDR; Sentence-BERT cascaded with Siamese and triplet networks for citation recommendation

Abstract Slides Poster Similar

Deep Multi-Task Learning for Facial Expression Recognition and Synthesis Based on Selective Feature Sharing

Rui Zhao, Tianshan Liu, Jun Xiao, P. K. Daniel Lun, Kin-Man Lam

Auto-TLDR; Multi-task Learning for Facial Expression Recognition and Synthesis

Abstract Slides Poster Similar

Reinforcement Learning with Dual Attention Guided Graph Convolution for Relation Extraction

Zhixin Li, Yaru Sun, Suqin Tang, Canlong Zhang, Huifang Ma

Auto-TLDR; Dual Attention Graph Convolutional Network for Relation Extraction

Abstract Slides Poster Similar

Multi-Task Learning Based Traditional Mongolian Words Recognition

Hongxi Wei, Hui Zhang, Jing Zhang, Kexin Liu

Auto-TLDR; Multi-task Learning for Mongolian Words Recognition

Abstract Slides Poster Similar

Neuron-Based Network Pruning Based on Majority Voting

Ali Alqahtani, Xianghua Xie, Ehab Essa, Mark W. Jones

Auto-TLDR; Large-Scale Neural Network Pruning using Majority Voting

Abstract Slides Poster Similar

Edge-Aware Graph Attention Network for Ratio of Edge-User Estimation in Mobile Networks

Jiehui Deng, Sheng Wan, Xiang Wang, Enmei Tu, Xiaolin Huang, Jie Yang, Chen Gong

Auto-TLDR; EAGAT: Edge-Aware Graph Attention Network for Automatic REU Estimation in Mobile Networks

Abstract Slides Poster Similar

Aggregating Object Features Based on Attention Weights for Fine-Grained Image Retrieval

Hongli Lin, Yongqi Song, Zixuan Zeng, Weisheng Wang

Auto-TLDR; DSAW: Unsupervised Dual-selection for Fine-Grained Image Retrieval

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

VTT: Long-Term Visual Tracking with Transformers

Tianling Bian, Yang Hua, Tao Song, Zhengui Xue, Ruhui Ma, Neil Robertson, Haibing Guan

Auto-TLDR; Visual Tracking Transformer with transformers for long-term visual tracking

Multi-Task Learning for Calorie Prediction on a Novel Large-Scale Recipe Dataset Enriched with Nutritional Information

Robin Ruede, Verena Heusser, Lukas Frank, Monica Haurilet, Alina Roitberg, Rainer Stiefelhagen

Auto-TLDR; Pic2kcal: Learning Food Recipes from Images for Calorie Estimation

Abstract Slides Poster Similar

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

Answer-Checking in Context: A Multi-Modal Fully Attention Network for Visual Question Answering

Hantao Huang, Tao Han, Wei Han, Deep Yap Deep Yap, Cheng-Ming Chiang

Auto-TLDR; Fully Attention Based Visual Question Answering

Abstract Slides Poster Similar

Recursive Convolutional Neural Networks for Epigenomics

Aikaterini Symeonidi, Anguelos Nicolaou, Frank Johannes, Vincent Christlein

Auto-TLDR; Recursive Convolutional Neural Networks for Epigenomic Data Analysis

Abstract Slides Poster Similar

Zero-Shot Text Classification with Semantically Extended Graph Convolutional Network

Tengfei Liu, Yongli Hu, Junbin Gao, Yanfeng Sun, Baocai Yin

Auto-TLDR; Semantically Extended Graph Convolutional Network for Zero-shot Text Classification

Abstract Slides Poster Similar

Leveraging Unlabeled Data for Glioma Molecular Subtype and Survival Prediction

Nicholas Nuechterlein, Beibin Li, Mehmet Saygin Seyfioglu, Sachin Mehta, Patrick Cimino, Linda Shapiro

Auto-TLDR; Multimodal Brain Tumor Segmentation Using Unlabeled MR Data and Genomic Data for Cancer Prediction

Abstract Slides Poster Similar

Deep Learning on Active Sonar Data Using Bayesian Optimization for Hyperparameter Tuning

Henrik Berg, Karl Thomas Hjelmervik

Auto-TLDR; Bayesian Optimization for Sonar Operations in Littoral Environments

Abstract Slides Poster Similar

Text Synopsis Generation for Egocentric Videos

Aidean Sharghi, Niels Lobo, Mubarak Shah

Auto-TLDR; Egocentric Video Summarization Using Multi-task Learning for End-to-End Learning

Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

William Prew, Toby Breckon, Magnus Bordewich, Ulrik Beierholm

Auto-TLDR; Improving grasping performance from monocularcolour images in an end-to-end CNN architecture with multi-task learning

Abstract Slides Poster Similar

Contextual Classification Using Self-Supervised Auxiliary Models for Deep Neural Networks

Sebastian Palacio, Philipp Engler, Jörn Hees, Andreas Dengel

Auto-TLDR; Self-Supervised Autogenous Learning for Deep Neural Networks

Abstract Slides Poster Similar

Transformer Reasoning Network for Image-Text Matching and Retrieval

Nicola Messina, Fabrizio Falchi, Andrea Esuli, Giuseppe Amato

Auto-TLDR; A Transformer Encoder Reasoning Network for Image-Text Matching in Large-Scale Information Retrieval

Abstract Slides Poster Similar

Analyzing Zero-Shot Cross-Lingual Transfer in Supervised NLP Tasks

Hyunjin Choi, Judong Kim, Seongho Joe, Seungjai Min, Youngjune Gwon

Auto-TLDR; Cross-Lingual Language Model Pretraining for Zero-Shot Cross-lingual Transfer

Abstract Slides Poster Similar

CKG: Dynamic Representation Based on Context and Knowledge Graph

Xunzhu Tang, Tiezhu Sun, Rujie Zhu

Auto-TLDR; CKG: Dynamic Representation Based on Knowledge Graph for Language Sentences

Abstract Slides Poster Similar