Deep Multiple Instance Learning with Spatial Attention for ROP Case Classification, Instance Selection and Abnormality Localization

Xirong Li,

Wencui Wan,

Yang Zhou,

Jianchun Zhao,

Qijie Wei,

Junbo Rong,

Pengyi Zhou,

Limin Xu,

Lijuan Lang,

Yuying Liu,

Chengzhi Niu,

Dayong Ding,

Xuemin Jin

Auto-TLDR; MIL-SA: Deep Multiple Instance Learning for Automated Screening of Retinopathy of Prematurity

Similar papers

Learn to Segment Retinal Lesions and Beyond

Qijie Wei, Xirong Li, Weihong Yu, Xiao Zhang, Yongpeng Zhang, Bojie Hu, Bin Mo, Di Gong, Ning Chen, Dayong Ding, Youxin Chen

Auto-TLDR; Multi-task Lesion Segmentation and Disease Classification for Diabetic Retinopathy Grading

Robust Localization of Retinal Lesions Via Weakly-Supervised Learning

Auto-TLDR; Weakly Learning of Lesions in Fundus Images Using Multi-level Feature Maps and Classification Score

Abstract Slides Poster Similar

Zoom-CAM: Generating Fine-Grained Pixel Annotations from Image Labels

Xiangwei Shi, Seyran Khademi, Yunqiang Li, Jan Van Gemert

Auto-TLDR; Zoom-CAM for Weakly Supervised Object Localization and Segmentation

Abstract Slides Poster Similar

SA-UNet: Spatial Attention U-Net for Retinal Vessel Segmentation

Changlu Guo, Marton Szemenyei, Yugen Yi, Wenle Wang, Buer Chen, Changqi Fan

Auto-TLDR; Spatial Attention U-Net for Segmentation of Retinal Blood Vessels

Abstract Slides Poster Similar

Attention Based Multi-Instance Thyroid Cytopathological Diagnosis with Multi-Scale Feature Fusion

Shuhao Qiu, Yao Guo, Chuang Zhu, Wenli Zhou, Huang Chen

Auto-TLDR; A weakly supervised multi-instance learning framework based on attention mechanism with multi-scale feature fusion for thyroid cytopathological diagnosis

Abstract Slides Poster Similar

Skin Lesion Classification Using Weakly-Supervised Fine-Grained Method

Xi Xue, Sei-Ichiro Kamata, Daming Luo

Auto-TLDR; Different Region proposal module for skin lesion classification

Abstract Slides Poster Similar

Dual-Attention Guided Dropblock Module for Weakly Supervised Object Localization

Junhui Yin, Siqing Zhang, Dongliang Chang, Zhanyu Ma, Jun Guo

Auto-TLDR; Dual-Attention Guided Dropblock for Weakly Supervised Object Localization

Abstract Slides Poster Similar

Fine-Tuning Convolutional Neural Networks: A Comprehensive Guide and Benchmark Analysis for Glaucoma Screening

Amed Mvoulana, Rostom Kachouri, Mohamed Akil

Auto-TLDR; Fine-tuning Convolutional Neural Networks for Glaucoma Screening

Abstract Slides Poster Similar

Supporting Skin Lesion Diagnosis with Content-Based Image Retrieval

Stefano Allegretti, Federico Bolelli, Federico Pollastri, Sabrina Longhitano, Giovanni Pellacani, Costantino Grana

Auto-TLDR; Skin Images Retrieval Using Convolutional Neural Networks for Skin Lesion Classification and Segmentation

Abstract Slides Poster Similar

Aggregating Object Features Based on Attention Weights for Fine-Grained Image Retrieval

Hongli Lin, Yongqi Song, Zixuan Zeng, Weisheng Wang

Auto-TLDR; DSAW: Unsupervised Dual-selection for Fine-Grained Image Retrieval

Sparse Network Inversion for Key Instance Detection in Multiple Instance Learning

Beomjo Shin, Junsu Cho, Hwanjo Yu, Seungjin Choi

Auto-TLDR; Improving Attention-based Deep Multiple Instance Learning for Key Instance Detection (KID)

Abstract Slides Poster Similar

Attention-Based Selection Strategy for Weakly Supervised Object Localization

Auto-TLDR; An Attention-based Selection Strategy for Weakly Supervised Object Localization

Abstract Slides Poster Similar

ACRM: Attention Cascade R-CNN with Mix-NMS for Metallic Surface Defect Detection

Junting Fang, Xiaoyang Tan, Yuhui Wang

Auto-TLDR; Attention Cascade R-CNN with Mix Non-Maximum Suppression for Robust Metal Defect Detection

Abstract Slides Poster Similar

Semi-Supervised Generative Adversarial Networks with a Pair of Complementary Generators for Retinopathy Screening

Yingpeng Xie, Qiwei Wan, Hai Xie, En-Leng Tan, Yanwu Xu, Baiying Lei

Auto-TLDR; Generative Adversarial Networks for Retinopathy Diagnosis via Fundus Images

Abstract Slides Poster Similar

Transfer Learning through Weighted Loss Function and Group Normalization for Vessel Segmentation from Retinal Images

Abdullah Sarhan, Jon Rokne, Reda Alhajj, Andrew Crichton

Auto-TLDR; Deep Learning for Segmentation of Blood Vessels in Retinal Images

Abstract Slides Poster Similar

A Comparison of Neural Network Approaches for Melanoma Classification

Maria Frasca, Michele Nappi, Michele Risi, Genoveffa Tortora, Alessia Auriemma Citarella

Auto-TLDR; Classification of Melanoma Using Deep Neural Network Methodologies

Abstract Slides Poster Similar

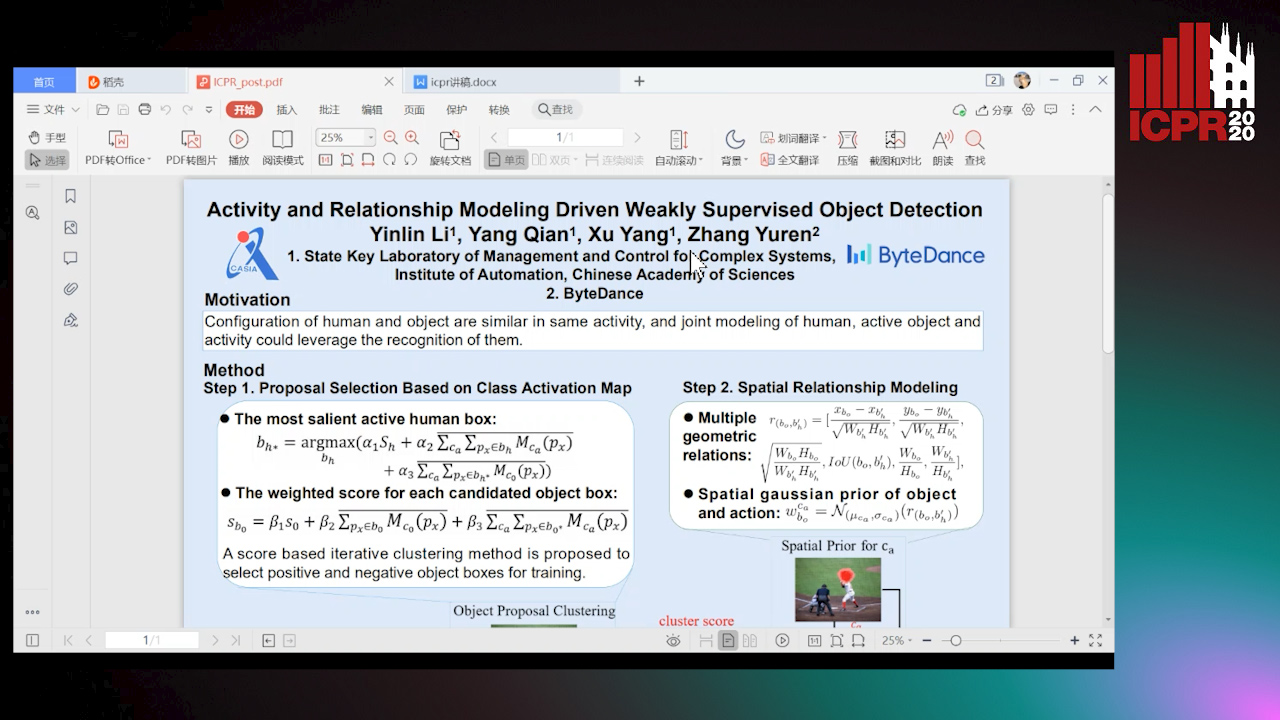

Activity and Relationship Modeling Driven Weakly Supervised Object Detection

Yinlin Li, Yang Qian, Xu Yang, Yuren Zhang

Auto-TLDR; Weakly Supervised Object Detection Using Activity Label and Relationship Modeling

Abstract Slides Poster Similar

Multi-Order Feature Statistical Model for Fine-Grained Visual Categorization

Qingtao Wang, Ke Zhang, Shaoli Huang, Lianbo Zhang, Jin Fan

Auto-TLDR; Multi-Order Feature Statistical Method for Fine-Grained Visual Categorization

Abstract Slides Poster Similar

Inception Based Deep Learning Architecture for Tuberculosis Screening of Chest X-Rays

Dipayan Das, K.C. Santosh, Umapada Pal

Auto-TLDR; End to End CNN-based Chest X-ray Screening for Tuberculosis positive patients in the severely resource constrained regions of the world

Abstract Slides Poster Similar

Classify Breast Histopathology Images with Ductal Instance-Oriented Pipeline

Beibin Li, Ezgi Mercan, Sachin Mehta, Stevan Knezevich, Corey Arnold, Donald Weaver, Joann Elmore, Linda Shapiro

Auto-TLDR; DIOP: Ductal Instance-Oriented Pipeline for Diagnostic Classification

Abstract Slides Poster Similar

SAT-Net: Self-Attention and Temporal Fusion for Facial Action Unit Detection

Zhihua Li, Zheng Zhang, Lijun Yin

Auto-TLDR; Temporal Fusion and Self-Attention Network for Facial Action Unit Detection

Abstract Slides Poster Similar

PCANet: Pyramid Context-Aware Network for Retinal Vessel Segmentation

Yi Zhang, Yixuan Chen, Kai Zhang

Auto-TLDR; PCANet: Adaptive Context-Aware Network for Automated Retinal Vessel Segmentation

Abstract Slides Poster Similar

Global-Local Attention Network for Semantic Segmentation in Aerial Images

Minglong Li, Lianlei Shan, Weiqiang Wang

Auto-TLDR; GLANet: Global-Local Attention Network for Semantic Segmentation

Abstract Slides Poster Similar

A Generalizable Saliency Map-Based Interpretation of Model Outcome

Shailja Thakur, Sebastian Fischmeister

Auto-TLDR; Interpretability of Deep Neural Networks Using Salient Input and Output

Efficient-Receptive Field Block with Group Spatial Attention Mechanism for Object Detection

Jiacheng Zhang, Zhicheng Zhao, Fei Su

Auto-TLDR; E-RFB: Efficient-Receptive Field Block for Deep Neural Network for Object Detection

Abstract Slides Poster Similar

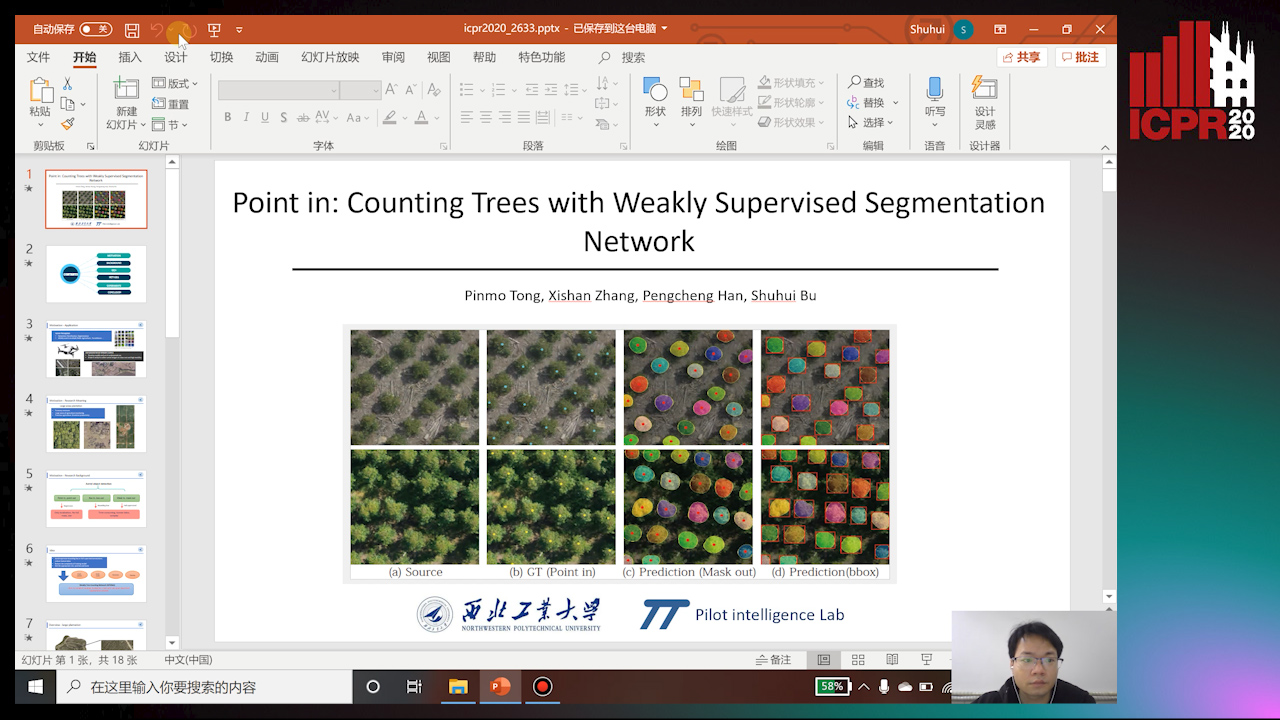

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

TAAN: Task-Aware Attention Network for Few-Shot Classification

Auto-TLDR; TAAN: Task-Aware Attention Network for Few-Shot Classification

Abstract Slides Poster Similar

Convolutional STN for Weakly Supervised Object Localization

Akhil Meethal, Marco Pedersoli, Soufiane Belharbi, Eric Granger

Auto-TLDR; Spatial Localization for Weakly Supervised Object Localization

BCAU-Net: A Novel Architecture with Binary Channel Attention Module for MRI Brain Segmentation

Yongpei Zhu, Zicong Zhou, Guojun Liao, Kehong Yuan

Auto-TLDR; BCAU-Net: Binary Channel Attention U-Net for MRI brain segmentation

Abstract Slides Poster Similar

A Systematic Investigation on Deep Architectures for Automatic Skin Lesions Classification

Pierluigi Carcagni, Marco Leo, Andrea Cuna, Giuseppe Celeste, Cosimo Distante

Auto-TLDR; RegNet: Deep Investigation of Convolutional Neural Networks for Automatic Classification of Skin Lesions

Abstract Slides Poster Similar

A Benchmark Dataset for Segmenting Liver, Vasculature and Lesions from Large-Scale Computed Tomography Data

Bo Wang, Zhengqing Xu, Wei Xu, Qingsen Yan, Liang Zhang, Zheng You

Auto-TLDR; The Biggest Treatment-Oriented Liver Cancer Dataset for Segmentation

Abstract Slides Poster Similar

DARN: Deep Attentive Refinement Network for Liver Tumor Segmentation from 3D CT Volume

Yao Zhang, Jiang Tian, Cheng Zhong, Yang Zhang, Zhongchao Shi, Zhiqiang He

Auto-TLDR; Deep Attentive Refinement Network for Liver Tumor Segmentation from 3D Computed Tomography Using Multi-Level Features

Abstract Slides Poster Similar

MFPP: Morphological Fragmental Perturbation Pyramid for Black-Box Model Explanations

Qing Yang, Xia Zhu, Jong-Kae Fwu, Yun Ye, Ganmei You, Yuan Zhu

Auto-TLDR; Morphological Fragmental Perturbation Pyramid for Explainable Deep Neural Network

Abstract Slides Poster Similar

Accurate Cell Segmentation in Digital Pathology Images Via Attention Enforced Networks

Zeyi Yao, Kaiqi Li, Guanhong Zhang, Yiwen Luo, Xiaoguang Zhou, Muyi Sun

Auto-TLDR; AENet: Attention Enforced Network for Automatic Cell Segmentation

Abstract Slides Poster Similar

Multi-Scale Residual Pyramid Attention Network for Monocular Depth Estimation

Jing Liu, Xiaona Zhang, Zhaoxin Li, Tianlu Mao

Auto-TLDR; Multi-scale Residual Pyramid Attention Network for Monocular Depth Estimation

Abstract Slides Poster Similar

CAggNet: Crossing Aggregation Network for Medical Image Segmentation

Auto-TLDR; Crossing Aggregation Network for Medical Image Segmentation

Abstract Slides Poster Similar

An Improved Bilinear Pooling Method for Image-Based Action Recognition

Auto-TLDR; An improved bilinear pooling method for image-based action recognition

Abstract Slides Poster Similar

Cross-View Relation Networks for Mammogram Mass Detection

Ma Jiechao, Xiang Li, Hongwei Li, Ruixuan Wang, Bjoern Menze, Wei-Shi Zheng

Auto-TLDR; Multi-view Modeling for Mass Detection in Mammogram

Abstract Slides Poster Similar

Improving Explainability of Integrated Gradients with Guided Non-Linearity

Hyuk Jin Kwon, Hyung Il Koo, Nam Ik Cho

Auto-TLDR; Guided Non-linearity for Attribution in Convolutional Neural Networks

Abstract Slides Poster Similar

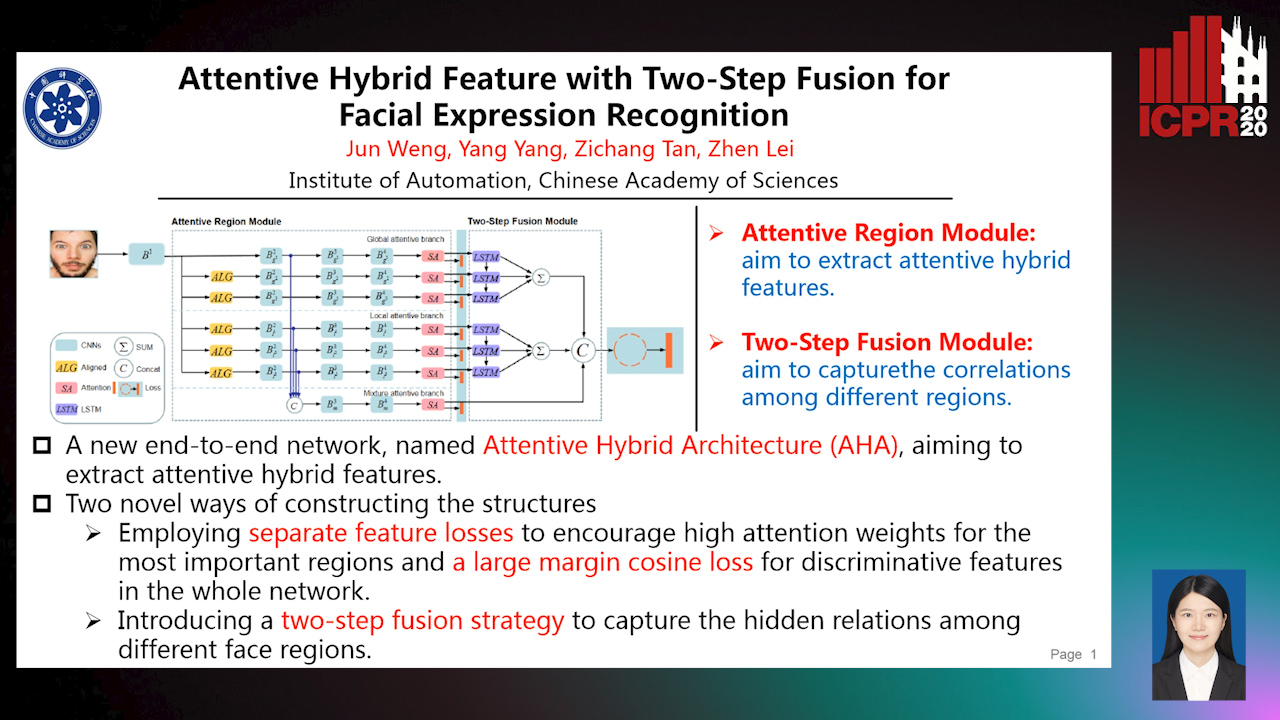

Attentive Hybrid Feature Based a Two-Step Fusion for Facial Expression Recognition

Jun Weng, Yang Yang, Zichang Tan, Zhen Lei

Auto-TLDR; Attentive Hybrid Architecture for Facial Expression Recognition

Abstract Slides Poster Similar

Multi-Scale and Attention Based ResNet for Heartbeat Classification

Haojie Zhang, Gongping Yang, Yuwen Huang, Feng Yuan, Yilong Yin

Auto-TLDR; A Multi-Scale and Attention based ResNet for ECG heartbeat classification in intra-patient and inter-patient paradigms

Abstract Slides Poster Similar

Multi-Modal Identification of State-Sponsored Propaganda on Social Media

Auto-TLDR; A balanced dataset for detecting state-sponsored Internet propaganda

Abstract Slides Poster Similar

Attention Pyramid Module for Scene Recognition

Zhinan Qiao, Xiaohui Yuan, Chengyuan Zhuang, Abolfazl Meyarian

Auto-TLDR; Attention Pyramid Module for Multi-Scale Scene Recognition

Abstract Slides Poster Similar

Collaborative Human Machine Attention Module for Character Recognition

Chetan Ralekar, Tapan Gandhi, Santanu Chaudhury

Auto-TLDR; A Collaborative Human-Machine Attention Module for Deep Neural Networks

Abstract Slides Poster Similar

Dual Stream Network with Selective Optimization for Skin Disease Recognition in Consumer Grade Images

Krishnam Gupta, Jaiprasad Rampure, Monu Krishnan, Ajit Narayanan, Nikhil Narayan

Auto-TLDR; A Deep Network Architecture for Skin Disease Localisation and Classification on Consumer Grade Images

Abstract Slides Poster Similar

Unsupervised Sound Source Localization From Audio-Image Pairs Using Input Gradient Map

Tomohiro Tanaka, Takahiro Shinozaki

Auto-TLDR; Unsupervised Sound Localization Using Gradient Method

Abstract Slides Poster Similar

Self and Channel Attention Network for Person Re-Identification

Asad Munir, Niki Martinel, Christian Micheloni

Auto-TLDR; SCAN: Self and Channel Attention Network for Person Re-identification

Abstract Slides Poster Similar

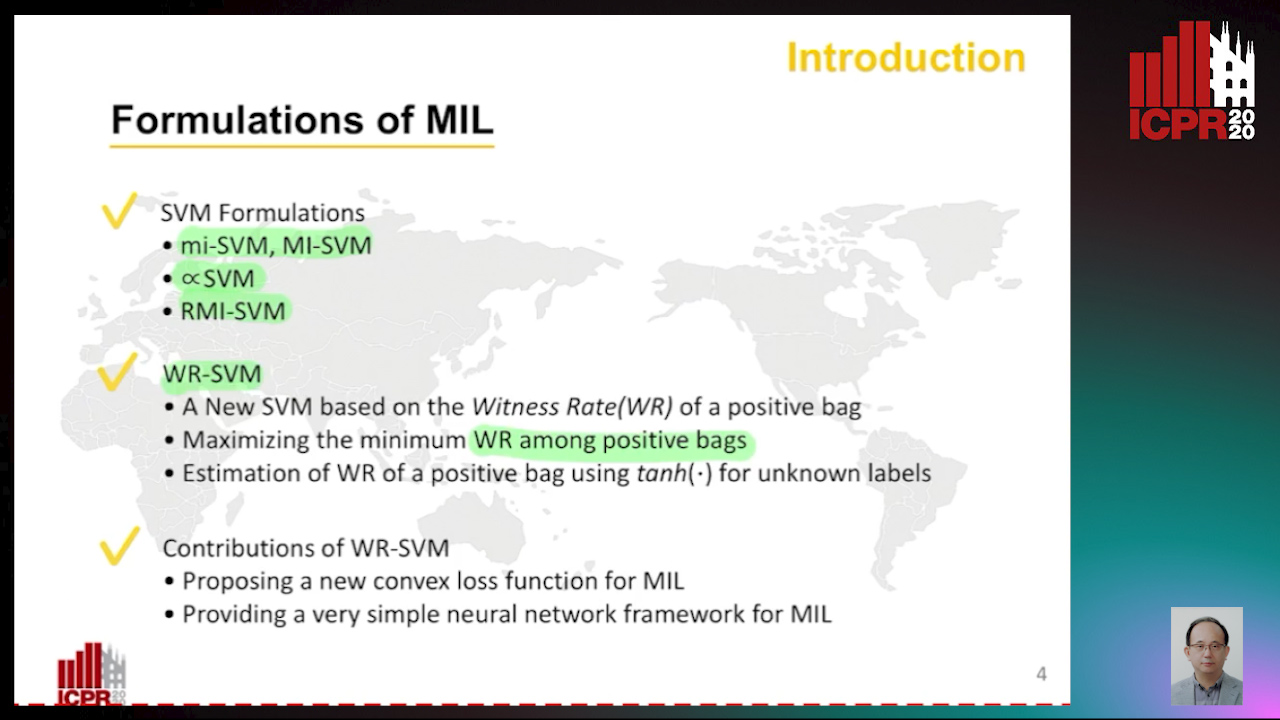

A New Convex Loss Function for Multiple Instance Support Vector Machines

Auto-TLDR; WR-SVM: A Novel Multiple Instance SVM for Video Classification

Abstract Slides Poster Similar