Multi-Attribute Regression Network for Face Reconstruction

Auto-TLDR; A Multi-Attribute Regression Network for Face Reconstruction

Similar papers

Joint Face Alignment and 3D Face Reconstruction with Efficient Convolution Neural Networks

Keqiang Li, Huaiyu Wu, Xiuqin Shang, Zhen Shen, Gang Xiong, Xisong Dong, Bin Hu, Fei-Yue Wang

Auto-TLDR; Mobile-FRNet: Efficient 3D Morphable Model Alignment and 3D Face Reconstruction from a Single 2D Facial Image

Abstract Slides Poster Similar

Learning Semantic Representations Via Joint 3D Face Reconstruction and Facial Attribute Estimation

Zichun Weng, Youjun Xiang, Xianfeng Li, Juntao Liang, Wanliang Huo, Yuli Fu

Auto-TLDR; Joint Framework for 3D Face Reconstruction with Facial Attribute Estimation

Abstract Slides Poster Similar

Hybrid Approach for 3D Head Reconstruction: Using Neural Networks and Visual Geometry

Oussema Bouafif, Bogdan Khomutenko, Mohammed Daoudi

Auto-TLDR; Recovering 3D Head Geometry from a Single Image using Deep Learning and Geometric Techniques

Abstract Slides Poster Similar

Quality-Based Representation for Unconstrained Face Recognition

Nelson Méndez-Llanes, Katy Castillo-Rosado, Heydi Mendez-Vazquez, Massimo Tistarelli

Auto-TLDR; activation map for face recognition in unconstrained environments

Inner Eye Canthus Localization for Human Body Temperature Screening

Claudio Ferrari, Lorenzo Berlincioni, Marco Bertini, Alberto Del Bimbo

Auto-TLDR; Automatic Localization of the Inner Eye Canthus in Thermal Face Images using 3D Morphable Face Model

Abstract Slides Poster Similar

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

Face Super-Resolution Network with Incremental Enhancement of Facial Parsing Information

Shuang Liu, Chengyi Xiong, Zhirong Gao

Auto-TLDR; Learning-based Face Super-Resolution with Incremental Boosting Facial Parsing Information

Abstract Slides Poster Similar

Continuous Learning of Face Attribute Synthesis

Ning Xin, Shaohui Xu, Fangzhe Nan, Xiaoli Dong, Weijun Li, Yuanzhou Yao

Auto-TLDR; Continuous Learning for Face Attribute Synthesis

Abstract Slides Poster Similar

Object Features and Face Detection Performance: Analyses with 3D-Rendered Synthetic Data

Jian Han, Sezer Karaoglu, Hoang-An Le, Theo Gevers

Auto-TLDR; Synthetic Data for Face Detection Using 3DU Face Dataset

Abstract Slides Poster Similar

Orthographic Projection Linear Regression for Single Image 3D Human Pose Estimation

Yahui Zhang, Shaodi You, Theo Gevers

Auto-TLDR; A Deep Neural Network for 3D Human Pose Estimation from a Single 2D Image in the Wild

Abstract Slides Poster Similar

A Multi-Task Neural Network for Action Recognition with 3D Key-Points

Rongxiao Tang, Wang Luyang, Zhenhua Guo

Auto-TLDR; Multi-task Neural Network for Action Recognition and 3D Human Pose Estimation

Abstract Slides Poster Similar

Sequential Non-Rigid Factorisation for Head Pose Estimation

Stefania Cristina, Kenneth Patrick Camilleri

Auto-TLDR; Sequential Shape-and-Motion Factorisation for Head Pose Estimation in Eye-Gaze Tracking

Abstract Slides Poster Similar

Exemplar Guided Cross-Spectral Face Hallucination Via Mutual Information Disentanglement

Haoxue Wu, Huaibo Huang, Aijing Yu, Jie Cao, Zhen Lei, Ran He

Auto-TLDR; Exemplar Guided Cross-Spectral Face Hallucination with Structural Representation Learning

Abstract Slides Poster Similar

Adaptive Feature Fusion Network for Gaze Tracking in Mobile Tablets

Yiwei Bao, Yihua Cheng, Yunfei Liu, Feng Lu

Auto-TLDR; Adaptive Feature Fusion Network for Multi-stream Gaze Estimation in Mobile Tablets

Abstract Slides Poster Similar

HP2IFS: Head Pose Estimation Exploiting Partitioned Iterated Function Systems

Carmen Bisogni, Michele Nappi, Chiara Pero, Stefano Ricciardi

Auto-TLDR; PIFS based head pose estimation using fractal coding theory and Partitioned Iterated Function Systems

Abstract Slides Poster Similar

DmifNet:3D Shape Reconstruction Based on Dynamic Multi-Branch Information Fusion

Auto-TLDR; DmifNet: Dynamic Multi-branch Information Fusion Network for 3D Shape Reconstruction from a Single-View Image

MRP-Net: A Light Multiple Region Perception Neural Network for Multi-Label AU Detection

Yang Tang, Shuang Chen, Honggang Zhang, Gang Wang, Rui Yang

Auto-TLDR; MRP-Net: A Fast and Light Neural Network for Facial Action Unit Detection

Abstract Slides Poster Similar

SATGAN: Augmenting Age Biased Dataset for Cross-Age Face Recognition

Wenshuang Liu, Wenting Chen, Yuanlue Zhu, Linlin Shen

Auto-TLDR; SATGAN: Stable Age Translation GAN for Cross-Age Face Recognition

Abstract Slides Poster Similar

Unsupervised Face Manipulation Via Hallucination

Keerthy Kusumam, Enrique Sanchez, Georgios Tzimiropoulos

Auto-TLDR; Unpaired Face Image Manipulation using Autoencoders

Abstract Slides Poster Similar

Pixel-based Facial Expression Synthesis

Auto-TLDR; pixel-based facial expression synthesis using GANs

Abstract Slides Poster Similar

Talking Face Generation Via Learning Semantic and Temporal Synchronous Landmarks

Aihua Zheng, Feixia Zhu, Hao Zhu, Mandi Luo, Ran He

Auto-TLDR; A semantic and temporal synchronous landmark learning method for talking face generation

Abstract Slides Poster Similar

Two-Stream Temporal Convolutional Network for Dynamic Facial Attractiveness Prediction

Nina Weng, Jiahao Wang, Annan Li, Yunhong Wang

Auto-TLDR; 2S-TCN: A Two-Stream Temporal Convolutional Network for Dynamic Facial Attractiveness Prediction

Abstract Slides Poster Similar

Detecting Manipulated Facial Videos: A Time Series Solution

Zhang Zhewei, Ma Can, Gao Meilin, Ding Bowen

Auto-TLDR; Face-Alignment Based Bi-LSTM for Fake Video Detection

Abstract Slides Poster Similar

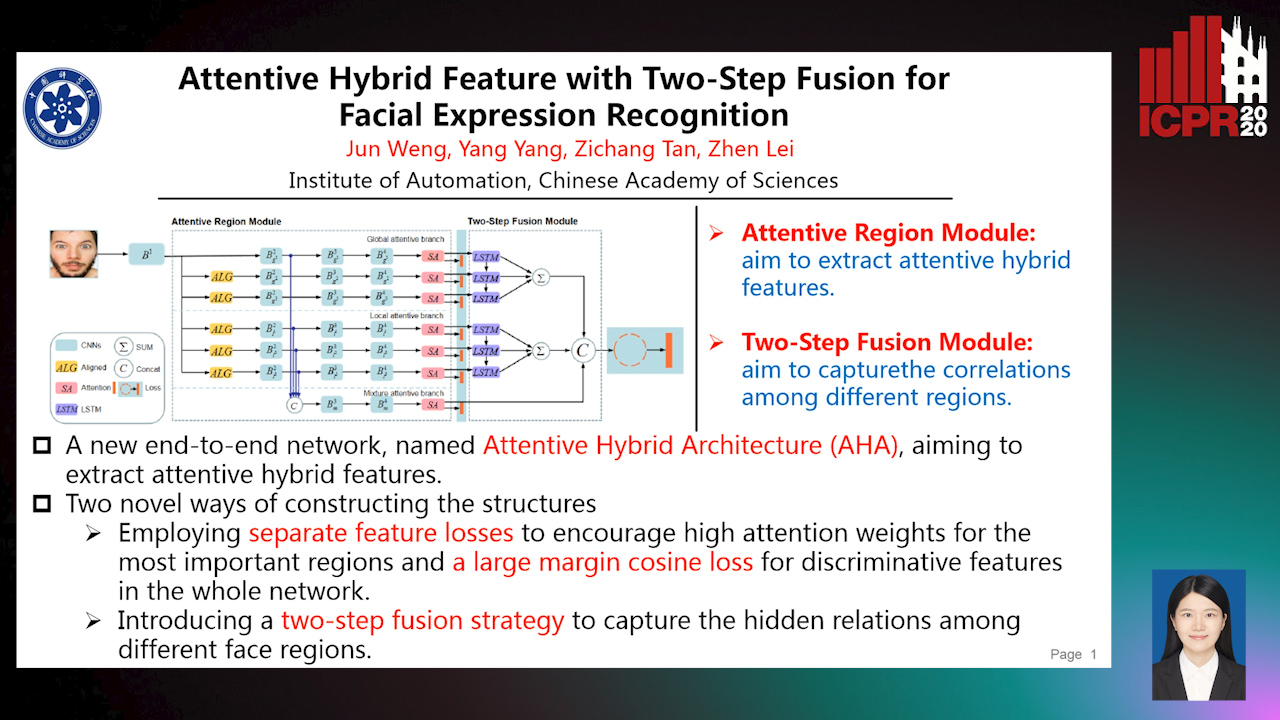

Attentive Hybrid Feature Based a Two-Step Fusion for Facial Expression Recognition

Jun Weng, Yang Yang, Zichang Tan, Zhen Lei

Auto-TLDR; Attentive Hybrid Architecture for Facial Expression Recognition

Abstract Slides Poster Similar

Face Image Quality Assessment for Model and Human Perception

Ken Chen, Yichao Wu, Zhenmao Li, Yudong Wu, Ding Liang

Auto-TLDR; A labour-saving method for FIQA training with contradictory data from multiple sources

Abstract Slides Poster Similar

Multi-Laplacian GAN with Edge Enhancement for Face Super Resolution

Auto-TLDR; Face Image Super-Resolution with Enhanced Edge Information

Abstract Slides Poster Similar

Contrastive Data Learning for Facial Pose and Illumination Normalization

Auto-TLDR; Pose and Illumination Normalization with Contrast Data Learning for Face Recognition

Abstract Slides Poster Similar

PEAN: 3D Hand Pose Estimation Adversarial Network

Linhui Sun, Yifan Zhang, Jing Lu, Jian Cheng, Hanqing Lu

Auto-TLDR; PEAN: 3D Hand Pose Estimation with Adversarial Learning Framework

Abstract Slides Poster Similar

Facial Expression Recognition Using Residual Masking Network

Luan Pham, Vu Huynh, Tuan Anh Tran

Auto-TLDR; Deep Residual Masking for Automatic Facial Expression Recognition

Abstract Slides Poster Similar

Unsupervised Learning of Landmarks Based on Inter-Intra Subject Consistencies

Weijian Li, Haofu Liao, Shun Miao, Le Lu, Jiebo Luo

Auto-TLDR; Unsupervised Learning for Facial Landmark Discovery using Inter-subject Landmark consistencies

Deep Multi-Task Learning for Facial Expression Recognition and Synthesis Based on Selective Feature Sharing

Rui Zhao, Tianshan Liu, Jun Xiao, P. K. Daniel Lun, Kin-Man Lam

Auto-TLDR; Multi-task Learning for Facial Expression Recognition and Synthesis

Abstract Slides Poster Similar

Estimating Gaze Points from Facial Landmarks by a Remote Spherical Camera

Auto-TLDR; Gaze Point Estimation from a Spherical Image from Facial Landmarks

Abstract Slides Poster Similar

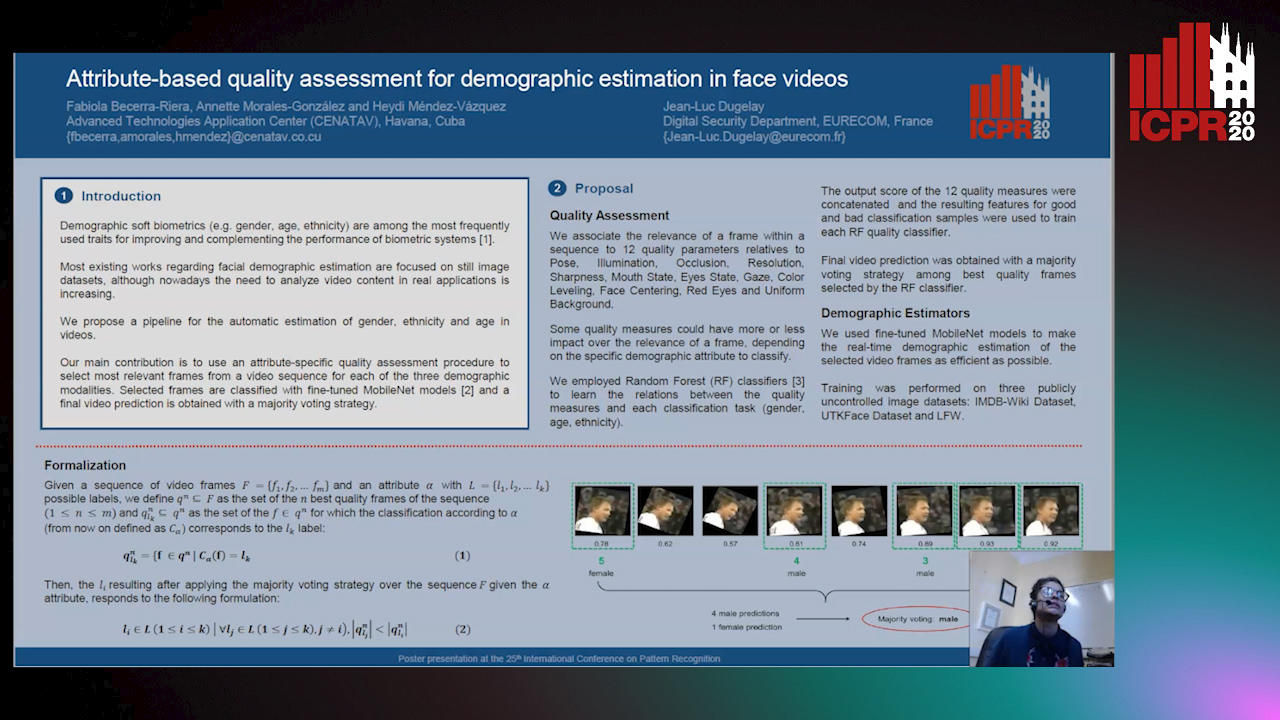

Attribute-Based Quality Assessment for Demographic Estimation in Face Videos

Fabiola Becerra-Riera, Annette Morales-González, Heydi Mendez-Vazquez, Jean-Luc Dugelay

Auto-TLDR; Facial Demographic Estimation in Video Scenarios Using Quality Assessment

Unsupervised Disentangling of Viewpoint and Residues Variations by Substituting Representations for Robust Face Recognition

Minsu Kim, Joanna Hong, Junho Kim, Hong Joo Lee, Yong Man Ro

Auto-TLDR; Unsupervised Disentangling of Identity, viewpoint, and Residue Representations for Robust Face Recognition

Abstract Slides Poster Similar

Exploring Severe Occlusion: Multi-Person 3D Pose Estimation with Gated Convolution

Renshu Gu, Gaoang Wang, Jenq-Neng Hwang

Auto-TLDR; 3D Human Pose Estimation for Multi-Human Videos with Occlusion

Coherence and Identity Learning for Arbitrary-Length Face Video Generation

Shuquan Ye, Chu Han, Jiaying Lin, Guoqiang Han, Shengfeng He

Auto-TLDR; Face Video Synthesis Using Identity-Aware GAN and Face Coherence Network

Abstract Slides Poster Similar

A Flatter Loss for Bias Mitigation in Cross-Dataset Facial Age Estimation

Ali Akbari, Muhammad Awais, Zhenhua Feng, Ammarah Farooq, Josef Kittler

Auto-TLDR; Cross-dataset Age Estimation for Neural Network Training

Abstract Slides Poster Similar

Three-Dimensional Lip Motion Network for Text-Independent Speaker Recognition

Jianrong Wang, Tong Wu, Shanyu Wang, Mei Yu, Qiang Fang, Ju Zhang, Li Liu

Auto-TLDR; Lip Motion Network for Text-Independent and Text-Dependent Speaker Recognition

Abstract Slides Poster Similar

Rethinking ReID:Multi-Feature Fusion Person Re-Identification Based on Orientation Constraints

Mingjing Ai, Guozhi Shan, Bo Liu, Tianyang Liu

Auto-TLDR; Person Re-identification with Orientation Constrained Network

Abstract Slides Poster Similar

Unconstrained Facial Expression Recogniton Based on Cascade Decision and Gabor Filters

Yanhong Wu, Lijie Zhang, Guannan Chen, Pablo Navarrete Michelini

Auto-TLDR; Convolutional Neural Network for Facial Expression Recognition under unconstrained natural conditions

Pose-Robust Face Recognition by Deep Meta Capsule Network-Based Equivariant Embedding

Fangyu Wu, Jeremy Simon Smith, Wenjin Lu, Bailing Zhang

Auto-TLDR; Deep Meta Capsule Network-based Equivariant Embedding Model for Pose-Robust Face Recognition

3D Point Cloud Registration Based on Cascaded Mutual Information Attention Network

Auto-TLDR; Cascaded Mutual Information Attention Network for 3D Point Cloud Registration

Abstract Slides Poster Similar

Light3DPose: Real-Time Multi-Person 3D Pose Estimation from Multiple Views

Alessio Elmi, Davide Mazzini, Pietro Tortella

Auto-TLDR; 3D Pose Estimation of Multiple People from a Few calibrated Camera Views using Deep Learning

Abstract Slides Poster Similar

Identity-Aware Facial Expression Recognition in Compressed Video

Xiaofeng Liu, Linghao Jin, Xu Han, Jun Lu, Jonghye Woo, Jane You

Auto-TLDR; Exploring Facial Expression Representation in Compressed Video with Mutual Information Minimization

Local-Global Interactive Network for Face Age Transformation

Jie Song, Ping Wei, Huan Li, Yongchi Zhang, Nanning Zheng

Auto-TLDR; A Novel Local-Global Interaction Framework for Long-span Face Age Transformation

Abstract Slides Poster Similar

PROPEL: Probabilistic Parametric Regression Loss for Convolutional Neural Networks

Muhammad Asad, Rilwan Basaru, S M Masudur Rahman Al Arif, Greg Slabaugh

Auto-TLDR; PRObabilistic Parametric rEgression Loss for Probabilistic Regression Using Convolutional Neural Networks

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

MixedFusion: 6D Object Pose Estimation from Decoupled RGB-Depth Features

Hangtao Feng, Lu Zhang, Xu Yang, Zhiyong Liu

Auto-TLDR; MixedFusion: Combining Color and Point Clouds for 6D Pose Estimation

Abstract Slides Poster Similar