Learning to Sort Handwritten Text Lines in Reading Order through Estimated Binary Order Relations

Auto-TLDR; Automatic Reading Order of Text Lines in Handwritten Text Documents

Similar papers

The HisClima Database: Historical Weather Logs for Automatic Transcription and Information Extraction

Verónica Romero, Joan Andreu Sánchez

Auto-TLDR; Automatic Handwritten Text Recognition and Information Extraction from Historical Weather Logs

Abstract Slides Poster Similar

Textual-Content Based Classification of Bundles of Untranscribed of Manuscript Images

José Ramón Prieto Fontcuberta, Enrique Vidal, Vicente Bosch, Carlos Alonso, Carmen Orcero, Lourdes Márquez

Auto-TLDR; Probabilistic Indexing for Text-based Classification of Manuscripts

Abstract Slides Poster Similar

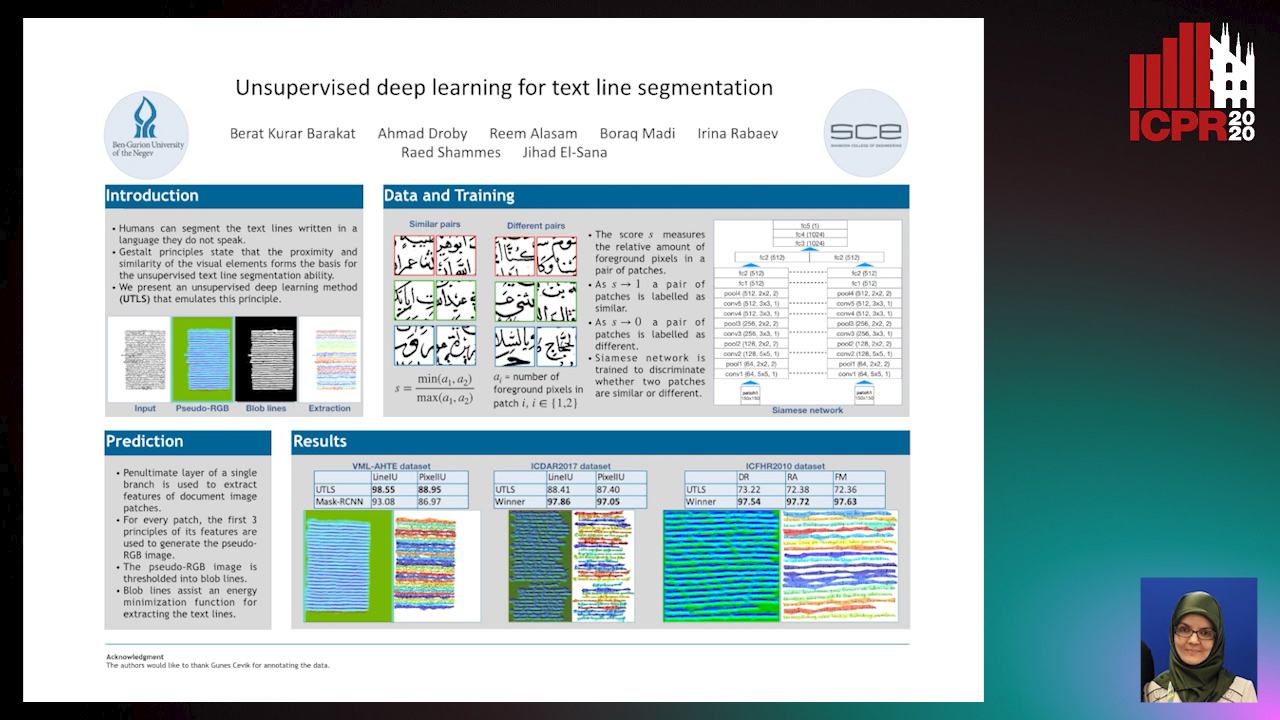

Unsupervised deep learning for text line segmentation

Berat Kurar Barakat, Ahmad Droby, Reem Alaasam, Borak Madi, Irina Rabaev, Raed Shammes, Jihad El-Sana

Auto-TLDR; Unsupervised Deep Learning for Handwritten Text Line Segmentation without Annotation

Watch Your Strokes: Improving Handwritten Text Recognition with Deformable Convolutions

Iulian Cojocaru, Silvia Cascianelli, Lorenzo Baraldi, Massimiliano Corsini, Rita Cucchiara

Auto-TLDR; Deformable Convolutional Neural Networks for Handwritten Text Recognition

Abstract Slides Poster Similar

Named Entity Recognition and Relation Extraction with Graph Neural Networks in Semi Structured Documents

Manuel Carbonell, Pau Riba, Mauricio Villegas, Alicia Fornés, Josep Llados

Auto-TLDR; Graph Neural Network for Entity Recognition and Relation Extraction in Semi-Structured Documents

Text Baseline Recognition Using a Recurrent Convolutional Neural Network

Matthias Wödlinger, Robert Sablatnig

Auto-TLDR; Automatic Baseline Detection of Handwritten Text Using Recurrent Convolutional Neural Network

Abstract Slides Poster Similar

Writer Identification Using Deep Neural Networks: Impact of Patch Size and Number of Patches

Akshay Punjabi, José Ramón Prieto Fontcuberta, Enrique Vidal

Auto-TLDR; Writer Recognition Using Deep Neural Networks for Handwritten Text Images

Abstract Slides Poster Similar

Multiple Document Datasets Pre-Training Improves Text Line Detection with Deep Neural Networks

Mélodie Boillet, Christopher Kermorvant, Thierry Paquet

Auto-TLDR; A fully convolutional network for document layout analysis

Combining Deep and Ad-Hoc Solutions to Localize Text Lines in Ancient Arabic Document Images

Olfa Mechi, Maroua Mehri, Rolf Ingold, Najoua Essoukri Ben Amara

Auto-TLDR; Text Line Localization in Ancient Handwritten Arabic Document Images using U-Net and Topological Structural Analysis

Abstract Slides Poster Similar

A Few-Shot Learning Approach for Historical Ciphered Manuscript Recognition

Mohamed Ali Souibgui, Alicia Fornés, Yousri Kessentini, Crina Tudor

Auto-TLDR; Handwritten Ciphers Recognition Using Few-Shot Object Detection

An Evaluation of DNN Architectures for Page Segmentation of Historical Newspapers

Manuel Burghardt, Bernhard Liebl

Auto-TLDR; Evaluation of Backbone Architectures for Optical Character Segmentation of Historical Documents

Abstract Slides Poster Similar

Generation of Hypergraphs from the N-Best Parsing of 2D-Probabilistic Context-Free Grammars for Mathematical Expression Recognition

Noya Ernesto, Joan Andreu Sánchez, Jose Miguel Benedi

Auto-TLDR; Hypergraphs: A Compact Representation of the N-best parse trees from 2D-PCFGs

Abstract Slides Poster Similar

Generic Document Image Dewarping by Probabilistic Discretization of Vanishing Points

Gilles Simon, Salvatore Tabbone

Auto-TLDR; Robust Document Dewarping using vanishing points

Abstract Slides Poster Similar

Improving Word Recognition Using Multiple Hypotheses and Deep Embeddings

Siddhant Bansal, Praveen Krishnan, C. V. Jawahar

Auto-TLDR; EmbedNet: fuse recognition-based and recognition-free approaches for word recognition using learning-based methods

Abstract Slides Poster Similar

Ancient Document Layout Analysis: Autoencoders Meet Sparse Coding

Homa Davoudi, Marco Fiorucci, Arianna Traviglia

Auto-TLDR; Unsupervised Unsupervised Representation Learning for Document Layout Analysis

Abstract Slides Poster Similar

End-To-End Hierarchical Relation Extraction for Generic Form Understanding

Tuan Anh Nguyen Dang, Duc-Thanh Hoang, Quang Bach Tran, Chih-Wei Pan, Thanh-Dat Nguyen

Auto-TLDR; Joint Entity Labeling and Link Prediction for Form Understanding in Noisy Scanned Documents

Abstract Slides Poster Similar

Vision-Based Layout Detection from Scientific Literature Using Recurrent Convolutional Neural Networks

Auto-TLDR; Transfer Learning for Scientific Literature Layout Detection Using Convolutional Neural Networks

Abstract Slides Poster Similar

Fast Approximate Modelling of the Next Combination Result for Stopping the Text Recognition in a Video

Konstantin Bulatov, Nadezhda Fedotova, Vladimir V. Arlazarov

Auto-TLDR; Stopping Video Stream Recognition of a Text Field Using Optimized Computation Scheme

Abstract Slides Poster Similar

PICK: Processing Key Information Extraction from Documents Using Improved Graph Learning-Convolutional Networks

Wenwen Yu, Ning Lu, Xianbiao Qi, Ping Gong, Rong Xiao

Auto-TLDR; PICK: A Graph Learning Framework for Key Information Extraction from Documents

Abstract Slides Poster Similar

LODENet: A Holistic Approach to Offline Handwritten Chinese and Japanese Text Line Recognition

Huu Tin Hoang, Chun-Jen Peng, Hung Tran, Hung Le, Huy Hoang Nguyen

Auto-TLDR; Logographic DEComposition Encoding for Chinese and Japanese Text Line Recognition

Abstract Slides Poster Similar

Recursive Recognition of Offline Handwritten Mathematical Expressions

Marco Cotogni, Claudio Cusano, Antonino Nocera

Auto-TLDR; Online Handwritten Mathematical Expression Recognition with Recurrent Neural Network

Abstract Slides Poster Similar

A Gated and Bifurcated Stacked U-Net Module for Document Image Dewarping

Hmrishav Bandyopadhyay, Tanmoy Dasgupta, Nibaran Das, Mita Nasipuri

Auto-TLDR; Gated and Bifurcated Stacked U-Net for Dewarping Document Images

Abstract Slides Poster Similar

ID Documents Matching and Localization with Multi-Hypothesis Constraints

Guillaume Chiron, Nabil Ghanmi, Ahmad Montaser Awal

Auto-TLDR; Identity Document Localization in the Wild Using Multi-hypothesis Exploration

Abstract Slides Poster Similar

A Multilinear Sampling Algorithm to Estimate Shapley Values

Auto-TLDR; A sampling method for Shapley values for multilayer Perceptrons

Abstract Slides Poster Similar

Approach for Document Detection by Contours and Contrasts

Daniil Tropin, Sergey Ilyuhin, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; A countor-based method for arbitrary document detection on a mobile device

Abstract Slides Poster Similar

Recognizing Bengali Word Images - A Zero-Shot Learning Perspective

Sukalpa Chanda, Daniël Arjen Willem Haitink, Prashant Kumar Prasad, Jochem Baas, Umapada Pal, Lambert Schomaker

Auto-TLDR; Zero-Shot Learning for Word Recognition in Bengali Script

Abstract Slides Poster Similar

An Integrated Approach of Deep Learning and Symbolic Analysis for Digital PDF Table Extraction

Mengshi Zhang, Daniel Perelman, Vu Le, Sumit Gulwani

Auto-TLDR; Deep Learning and Symbolic Reasoning for Unstructured PDF Table Extraction

Abstract Slides Poster Similar

Multimodal Side-Tuning for Document Classification

Stefano Zingaro, Giuseppe Lisanti, Maurizio Gabbrielli

Auto-TLDR; Side-tuning for Multimodal Document Classification

Abstract Slides Poster Similar

Multi-Task Learning Based Traditional Mongolian Words Recognition

Hongxi Wei, Hui Zhang, Jing Zhang, Kexin Liu

Auto-TLDR; Multi-task Learning for Mongolian Words Recognition

Abstract Slides Poster Similar

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

Automated Whiteboard Lecture Video Summarization by Content Region Detection and Representation

Bhargava Urala Kota, Alexander Stone, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; A Framework for Summarizing Whiteboard Lecture Videos Using Feature Representations of Handwritten Content Regions

Online Trajectory Recovery from Offline Handwritten Japanese Kanji Characters of Multiple Strokes

Hung Tuan Nguyen, Tsubasa Nakamura, Cuong Tuan Nguyen, Masaki Nakagawa

Auto-TLDR; Recovering Dynamic Online Trajectories from Offline Japanese Kanji Character Images for Handwritten Character Recognition

Abstract Slides Poster Similar

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

Recognizing Multiple Text Sequences from an Image by Pure End-To-End Learning

Zhenlong Xu, Shuigeng Zhou, Fan Bai, Cheng Zhanzhan, Yi Niu, Shiliang Pu

Auto-TLDR; Pure End-to-End Learning for Multiple Text Sequences Recognition from Images

Abstract Slides Poster Similar

The DeepScoresV2 Dataset and Benchmark for Music Object Detection

Lukas Tuggener, Yvan Putra Satyawan, Alexander Pacha, Jürgen Schmidhuber, Thilo Stadelmann

Auto-TLDR; DeepScoresV2: an extended version of the DeepScores dataset for optical music recognition

Abstract Slides Poster Similar

Image-Based Table Cell Detection: A New Dataset and an Improved Detection Method

Dafeng Wei, Hongtao Lu, Yi Zhou, Kai Chen

Auto-TLDR; TableCell: A Semi-supervised Dataset for Table-wise Detection and Recognition

Abstract Slides Poster Similar

Feature Embedding Based Text Instance Grouping for Largely Spaced and Occluded Text Detection

Pan Gao, Qi Wan, Renwu Gao, Linlin Shen

Auto-TLDR; Text Instance Embedding Based Feature Embeddings for Multiple Text Instance Grouping

Abstract Slides Poster Similar

Motion Segmentation with Pairwise Matches and Unknown Number of Motions

Federica Arrigoni, Tomas Pajdla, Luca Magri

Auto-TLDR; Motion Segmentation using Multi-Modelfitting andpermutation synchronization

Abstract Slides Poster Similar

Learning Neural Textual Representations for Citation Recommendation

Thanh Binh Kieu, Inigo Jauregi Unanue, Son Bao Pham, Xuan-Hieu Phan, M. Piccardi

Auto-TLDR; Sentence-BERT cascaded with Siamese and triplet networks for citation recommendation

Abstract Slides Poster Similar

Enhancing Handwritten Text Recognition with N-Gram Sequencedecomposition and Multitask Learning

Vasiliki Tassopoulou, George Retsinas, Petros Maragos

Auto-TLDR; Multi-task Learning for Handwritten Text Recognition

Abstract Slides Poster Similar

Force Banner for the Recognition of Spatial Relations

Robin Deléarde, Camille Kurtz, Laurent Wendling, Philippe Dejean

Auto-TLDR; Spatial Relation Recognition using Force Banners

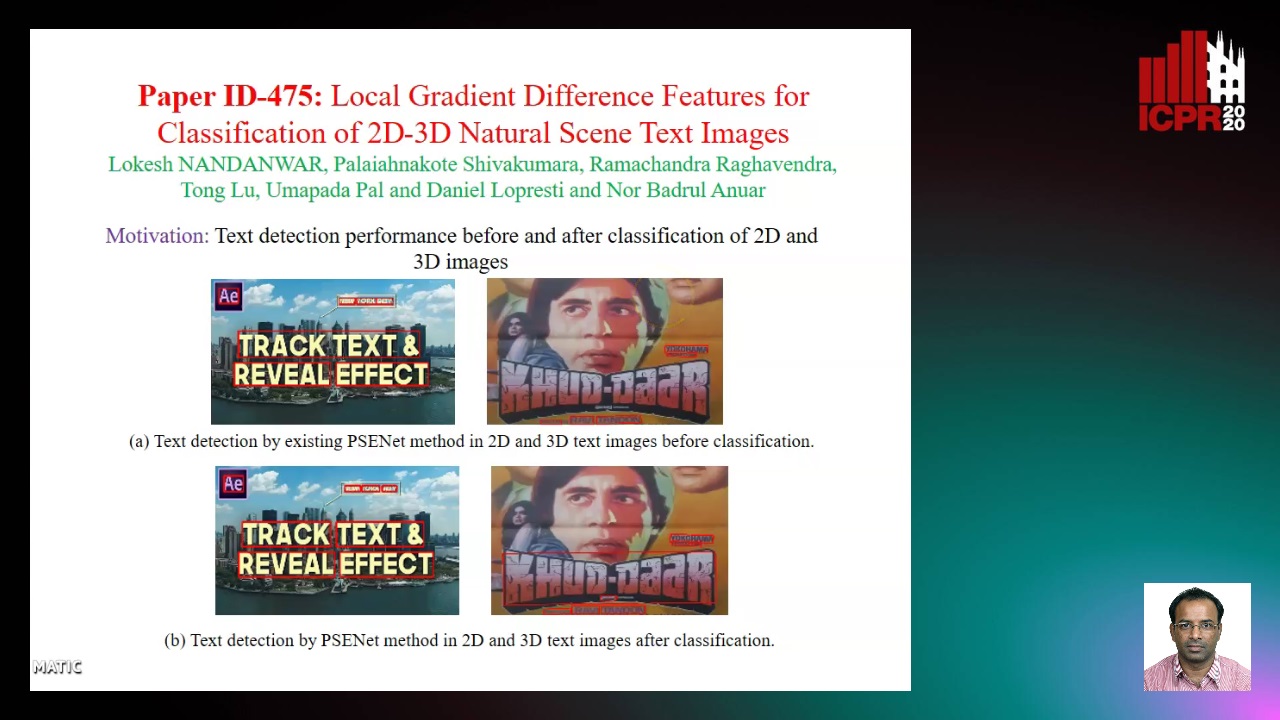

Local Gradient Difference Based Mass Features for Classification of 2D-3D Natural Scene Text Images

Lokesh Nandanwar, Shivakumara Palaiahnakote, Raghavendra Ramachandra, Tong Lu, Umapada Pal, Daniel Lopresti, Nor Badrul Anuar

Auto-TLDR; Classification of 2D and 3D Natural Scene Images Using COLD

Abstract Slides Poster Similar

ConvMath : A Convolutional Sequence Network for Mathematical Expression Recognition

Zuoyu Yan, Xiaode Zhang, Liangcai Gao, Ke Yuan, Zhi Tang

Auto-TLDR; Convolutional Sequence Modeling for Mathematical Expressions Recognition

Abstract Slides Poster Similar

Uncertainty Guided Recognition of Tiny Craters on the Moon

Thorsten Wilhelm, Christian Wöhler

Auto-TLDR; Accurately Detecting Tiny Craters in Remote Sensed Images Using Deep Neural Networks

Abstract Slides Poster Similar

CDeC-Net: Composite Deformable Cascade Network for Table Detection in Document Images

Madhav Agarwal, Ajoy Mondal, C. V. Jawahar

Auto-TLDR; CDeC-Net: An End-to-End Trainable Deep Network for Detecting Tables in Document Images

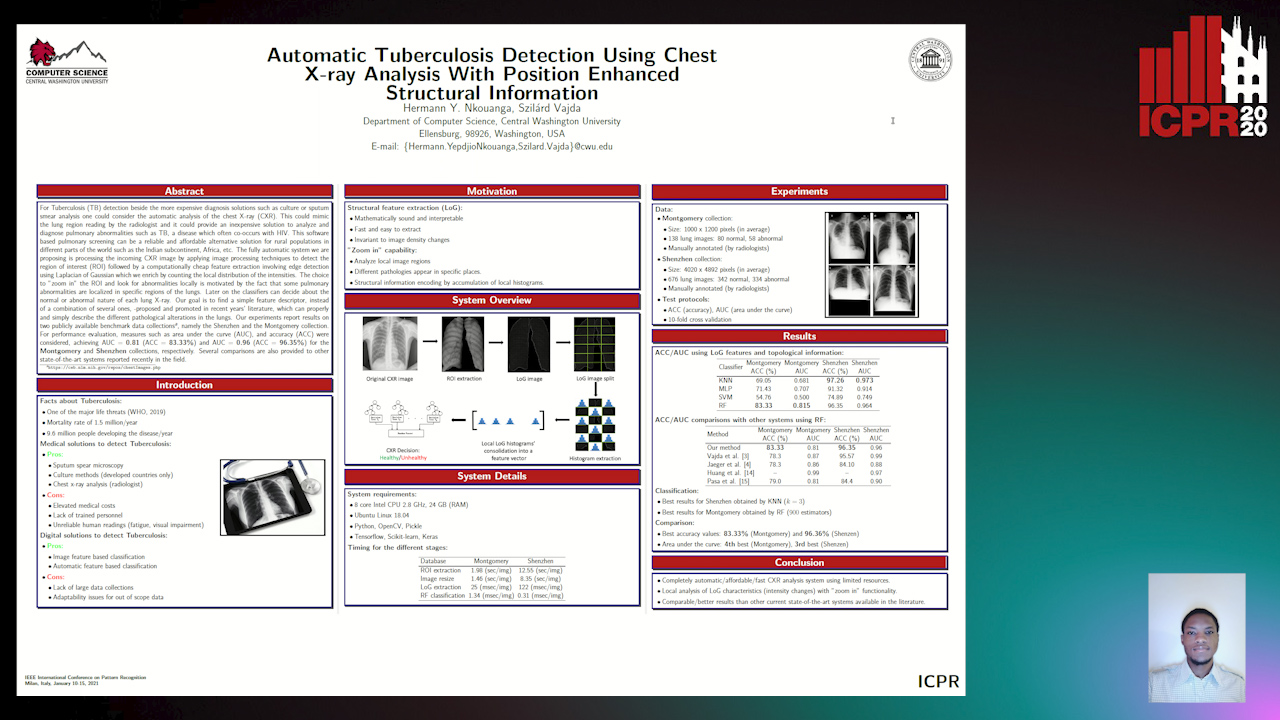

Automatic Tuberculosis Detection Using Chest X-Ray Analysis with Position Enhanced Structural Information

Hermann Jepdjio Nkouanga, Szilard Vajda

Auto-TLDR; Automatic Chest X-ray Screening for Tuberculosis in Rural Population using Localized Region on Interest

Abstract Slides Poster Similar

Text Recognition - Real World Data and Where to Find Them

Klára Janoušková, Lluis Gomez, Dimosthenis Karatzas, Jiri Matas

Auto-TLDR; Exploiting Weakly Annotated Images for Text Extraction

Abstract Slides Poster Similar

3D Pots Configuration System by Optimizing Over Geometric Constraints

Jae Eun Kim, Muhammad Zeeshan Arshad, Seong Jong Yoo, Je Hyeong Hong, Jinwook Kim, Young Min Kim

Auto-TLDR; Optimizing 3D Configurations for Stable Pottery Restoration from irregular and noisy evidence

Abstract Slides Poster Similar