The Surprising Effectiveness of Linear Unsupervised Image-to-Image Translation

Auto-TLDR; linear encoder-decoder architectures for unsupervised image-to-image translation

Similar papers

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

Detail Fusion GAN: High-Quality Translation for Unpaired Images with GAN-Based Data Augmentation

Ling Li, Yaochen Li, Chuan Wu, Hang Dong, Peilin Jiang, Fei Wang

Auto-TLDR; Data Augmentation with GAN-based Generative Adversarial Network

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

Cycle-Consistent Adversarial Networks and Fast Adaptive Bi-Dimensional Empirical Mode Decomposition for Style Transfer

Elissavet Batziou, Petros Alvanitopoulos, Konstantinos Ioannidis, Ioannis Patras, Stefanos Vrochidis, Ioannis Kompatsiaris

Auto-TLDR; FABEMD: Fast and Adaptive Bidimensional Empirical Mode Decomposition for Style Transfer on Images

Abstract Slides Poster Similar

Unsupervised Contrastive Photo-To-Caricature Translation Based on Auto-Distortion

Yuhe Ding, Xin Ma, Mandi Luo, Aihua Zheng, Ran He

Auto-TLDR; Unsupervised contrastive photo-to-caricature translation with style loss

Abstract Slides Poster Similar

Galaxy Image Translation with Semi-Supervised Noise-Reconstructed Generative Adversarial Networks

Qiufan Lin, Dominique Fouchez, Jérôme Pasquet

Auto-TLDR; Semi-supervised Image Translation with Generative Adversarial Networks Using Paired and Unpaired Images

Abstract Slides Poster Similar

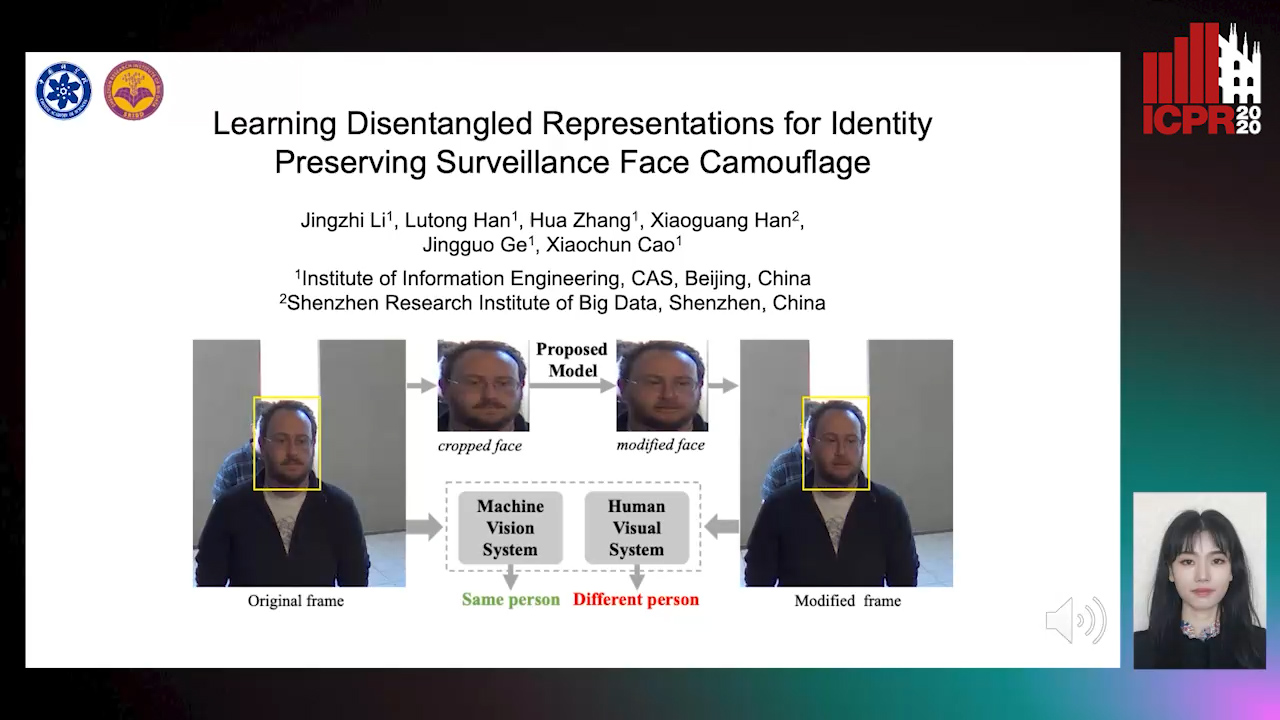

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

Multi-Domain Image-To-Image Translation with Adaptive Inference Graph

The Phuc Nguyen, Stéphane Lathuiliere, Elisa Ricci

Auto-TLDR; Adaptive Graph Structure for Multi-Domain Image-to-Image Translation

Abstract Slides Poster Similar

Continuous Learning of Face Attribute Synthesis

Ning Xin, Shaohui Xu, Fangzhe Nan, Xiaoli Dong, Weijun Li, Yuanzhou Yao

Auto-TLDR; Continuous Learning for Face Attribute Synthesis

Abstract Slides Poster Similar

Future Urban Scenes Generation through Vehicles Synthesis

Alessandro Simoni, Luca Bergamini, Andrea Palazzi, Simone Calderara, Rita Cucchiara

Auto-TLDR; Predicting the Future of an Urban Scene with a Novel View Synthesis Paradigm

Abstract Slides Poster Similar

Towards Artifacts-Free Image Defogging

Gabriele Graffieti, Davide Maltoni

Auto-TLDR; CurL-Defog: Learning Based Defogging with CycleGAN and HArD

AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault, Hervé Le Borgne, Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Abstract Slides Poster Similar

Augmented Cyclic Consistency Regularization for Unpaired Image-To-Image Translation

Takehiko Ohkawa, Naoto Inoue, Hirokatsu Kataoka, Nakamasa Inoue

Auto-TLDR; Augmented Cyclic Consistency Regularization for Unpaired Image-to-Image Translation

Abstract Slides Poster Similar

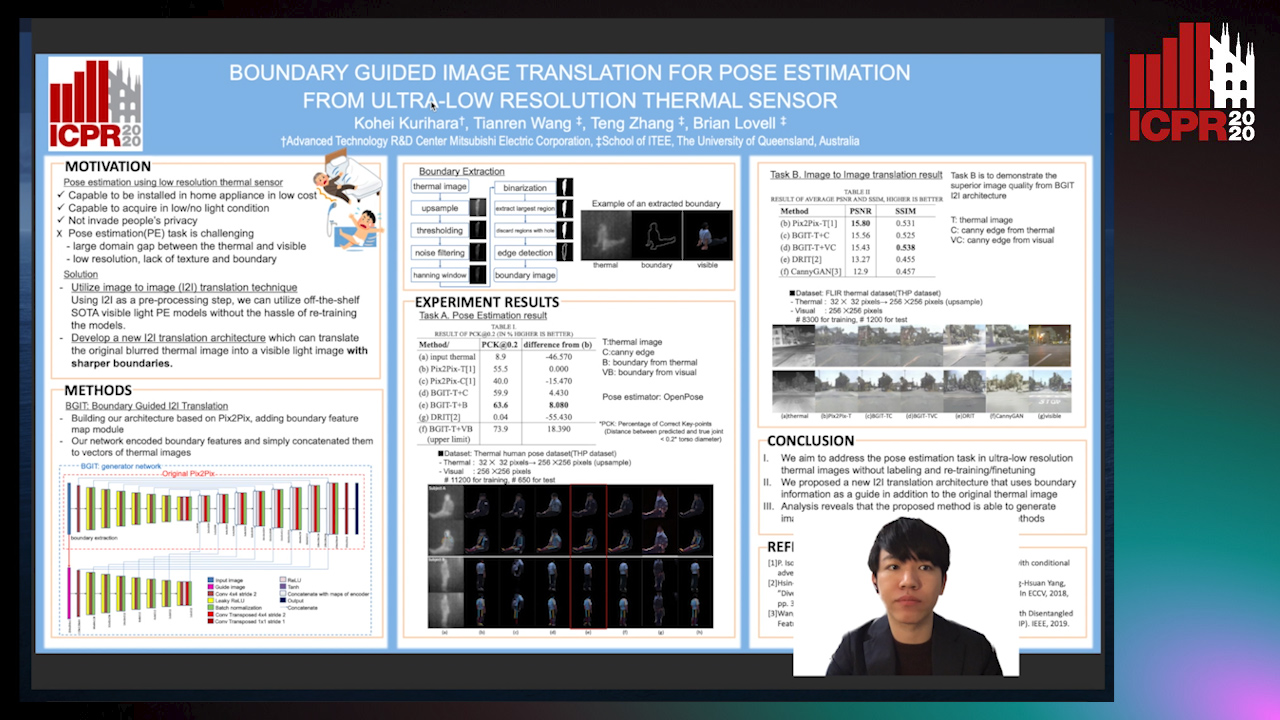

Boundary Guided Image Translation for Pose Estimation from Ultra-Low Resolution Thermal Sensor

Kohei Kurihara, Tianren Wang, Teng Zhang, Brian Carrington Lovell

Auto-TLDR; Pose Estimation on Low-Resolution Thermal Images Using Image-to-Image Translation Architecture

Abstract Slides Poster Similar

Pixel-based Facial Expression Synthesis

Auto-TLDR; pixel-based facial expression synthesis using GANs

Abstract Slides Poster Similar

Stylized-Colorization for Line Arts

Tzu-Ting Fang, Minh Duc Vo, Akihiro Sugimoto, Shang-Hong Lai

Auto-TLDR; Stylized-colorization using GAN-based End-to-End Model for Anime

Abstract Slides Poster Similar

Learning Low-Shot Generative Networks for Cross-Domain Data

Hsuan-Kai Kao, Cheng-Che Lee, Wei-Chen Chiu

Auto-TLDR; Learning Generators for Cross-Domain Data under Low-Shot Learning

Abstract Slides Poster Similar

Learning Image Inpainting from Incomplete Images using Self-Supervision

Sriram Yenamandra, Rohit Kumar Jena, Ansh Khurana, Suyash Awate

Auto-TLDR; Unsupervised Deep Neural Network for Semantic Image Inpainting

Abstract Slides Poster Similar

Mask-Based Style-Controlled Image Synthesis Using a Mask Style Encoder

Jaehyeong Cho, Wataru Shimoda, Keiji Yanai

Auto-TLDR; Style-controlled Image Synthesis from Semantic Segmentation masks using GANs

Abstract Slides Poster Similar

Image Inpainting with Contrastive Relation Network

Xiaoqiang Zhou, Junjie Li, Zilei Wang, Ran He, Tieniu Tan

Auto-TLDR; Two-Stage Inpainting with Graph-based Relation Network

DEN: Disentangling and Exchanging Network for Depth Completion

You-Feng Wu, Vu-Hoang Tran, Ting-Wei Chang, Wei-Chen Chiu, Ching-Chun Huang

Auto-TLDR; Disentangling and Exchanging Network for Depth Completion

Makeup Style Transfer on Low-Quality Images with Weighted Multi-Scale Attention

Daniel Organisciak, Edmond S. L. Ho, Shum Hubert P. H.

Auto-TLDR; Facial Makeup Style Transfer for Low-Resolution Images Using Multi-Scale Spatial Attention

Abstract Slides Poster Similar

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

SIDGAN: Single Image Dehazing without Paired Supervision

Pan Wei, Xin Wang, Lei Wang, Ji Xiang, Zihan Wang

Auto-TLDR; DehazeGAN: An End-to-End Generative Adversarial Network for Image Dehazing

Abstract Slides Poster Similar

Semi-Supervised Outdoor Image Generation Conditioned on Weather Signals

Sota Kawakami, Kei Okada, Naoko Nitta, Kazuaki Nakamura, Noboru Babaguchi

Auto-TLDR; Semi-supervised Generative Adversarial Network for Prediction of Weather Signals from Outdoor Images

Abstract Slides Poster Similar

A Simple Domain Shifting Network for Generating Low Quality Images

Guruprasad Hegde, Avinash Nittur Ramesh, Kanchana Vaishnavi Gandikota, Michael Möller, Roman Obermaisser

Auto-TLDR; Robotic Image Classification Using Quality degrading networks

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

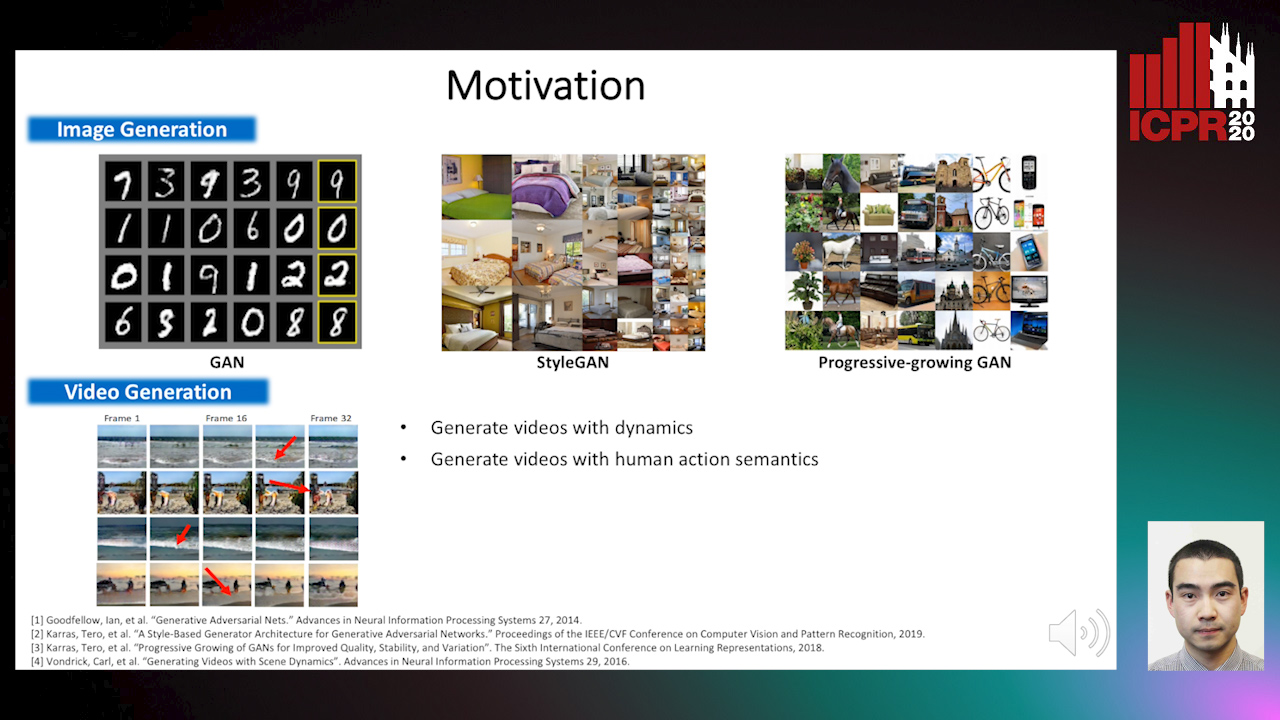

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Efficient Shadow Detection and Removal Using Synthetic Data with Domain Adaptation

Rui Guo, Babajide Ayinde, Hao Sun

Auto-TLDR; Shadow Detection and Removal with Domain Adaptation and Synthetic Image Database

UCCTGAN: Unsupervised Clothing Color Transformation Generative Adversarial Network

Shuming Sun, Xiaoqiang Li, Jide Li

Auto-TLDR; An Unsupervised Clothing Color Transformation Generative Adversarial Network

Abstract Slides Poster Similar

Hybrid Approach for 3D Head Reconstruction: Using Neural Networks and Visual Geometry

Oussema Bouafif, Bogdan Khomutenko, Mohammed Daoudi

Auto-TLDR; Recovering 3D Head Geometry from a Single Image using Deep Learning and Geometric Techniques

Abstract Slides Poster Similar

Unsupervised Domain Adaptation for Object Detection in Cultural Sites

Giovanni Pasqualino, Antonino Furnari, Giovanni Maria Farinella

Auto-TLDR; Unsupervised Domain Adaptation for Object Detection in Cultural Sites

Adaptive Image Compression Using GAN Based Semantic-Perceptual Residual Compensation

Ruojing Wang, Zitang Sun, Sei-Ichiro Kamata, Weili Chen

Auto-TLDR; Adaptive Image Compression using GAN based Semantic-Perceptual Residual Compensation

Abstract Slides Poster Similar

Unsupervised Learning of Landmarks Based on Inter-Intra Subject Consistencies

Weijian Li, Haofu Liao, Shun Miao, Le Lu, Jiebo Luo

Auto-TLDR; Unsupervised Learning for Facial Landmark Discovery using Inter-subject Landmark consistencies

A NoGAN Approach for Image and Video Restoration and Compression Artifact Removal

Mameli Filippo, Marco Bertini, Leonardo Galteri, Alberto Del Bimbo

Auto-TLDR; Deep Neural Network for Image and Video Compression Artifact Removal and Restoration

N2D: (Not Too) Deep Clustering Via Clustering the Local Manifold of an Autoencoded Embedding

Ryan Mcconville, Raul Santos-Rodriguez, Robert Piechocki, Ian Craddock

Auto-TLDR; Local Manifold Learning for Deep Clustering on Autoencoded Embeddings

LFIEM: Lightweight Filter-Based Image Enhancement Model

Oktai Tatanov, Aleksei Samarin

Auto-TLDR; Image Retouching Using Semi-supervised Learning for Mobile Devices

Abstract Slides Poster Similar

Exemplar Guided Cross-Spectral Face Hallucination Via Mutual Information Disentanglement

Haoxue Wu, Huaibo Huang, Aijing Yu, Jie Cao, Zhen Lei, Ran He

Auto-TLDR; Exemplar Guided Cross-Spectral Face Hallucination with Structural Representation Learning

Abstract Slides Poster Similar

Robust Pedestrian Detection in Thermal Imagery Using Synthesized Images

My Kieu, Lorenzo Berlincioni, Leonardo Galteri, Marco Bertini, Andrew Bagdanov, Alberto Del Bimbo

Auto-TLDR; Improving Pedestrian Detection in the thermal domain using Generative Adversarial Network

Abstract Slides Poster Similar

Feature Point Matching in Cross-Spectral Images with Cycle Consistency Learning

Ryosuke Furuta, Naoaki Noguchi, Xueting Wang, Toshihiko Yamasaki

Auto-TLDR; Unsupervised Learning for General Feature Point Matching in Cross-Spectral Settings

Abstract Slides Poster Similar

MBD-GAN: Model-Based Image Deblurring with a Generative Adversarial Network

Auto-TLDR; Model-Based Deblurring GAN for Inverse Imaging

Abstract Slides Poster Similar

SATGAN: Augmenting Age Biased Dataset for Cross-Age Face Recognition

Wenshuang Liu, Wenting Chen, Yuanlue Zhu, Linlin Shen

Auto-TLDR; SATGAN: Stable Age Translation GAN for Cross-Age Face Recognition

Abstract Slides Poster Similar

Boosting High-Level Vision with Joint Compression Artifacts Reduction and Super-Resolution

Xiaoyu Xiang, Qian Lin, Jan Allebach

Auto-TLDR; A Context-Aware Joint CAR and SR Neural Network for High-Resolution Text Recognition and Face Detection

Abstract Slides Poster Similar

A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera, Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Abstract Slides Poster Similar

Deep Realistic Novel View Generation for City-Scale Aerial Images

Koundinya Nouduri, Ke Gao, Joshua Fraser, Shizeng Yao, Hadi Aliakbarpour, Filiz Bunyak, Kannappan Palaniappan

Auto-TLDR; End-to-End 3D Voxel Renderer for Multi-View Stereo Data Generation and Evaluation

Abstract Slides Poster Similar