Quantization in Relative Gradient Angle Domain for Building Polygon Estimation

Yuhao Chen,

Yifan Wu,

Linlin Xu,

Alexander Wong

Auto-TLDR; Relative Gradient Angle Transform for Building Footprint Extraction from Remote Sensing Data

Similar papers

Machine-Learned Regularization and Polygonization of Building Segmentation Masks

Stefano Zorzi, Ksenia Bittner, Friedrich Fraundorfer

Auto-TLDR; Automatic Regularization and Polygonization of Building Segmentation masks using Generative Adversarial Network

Abstract Slides Poster Similar

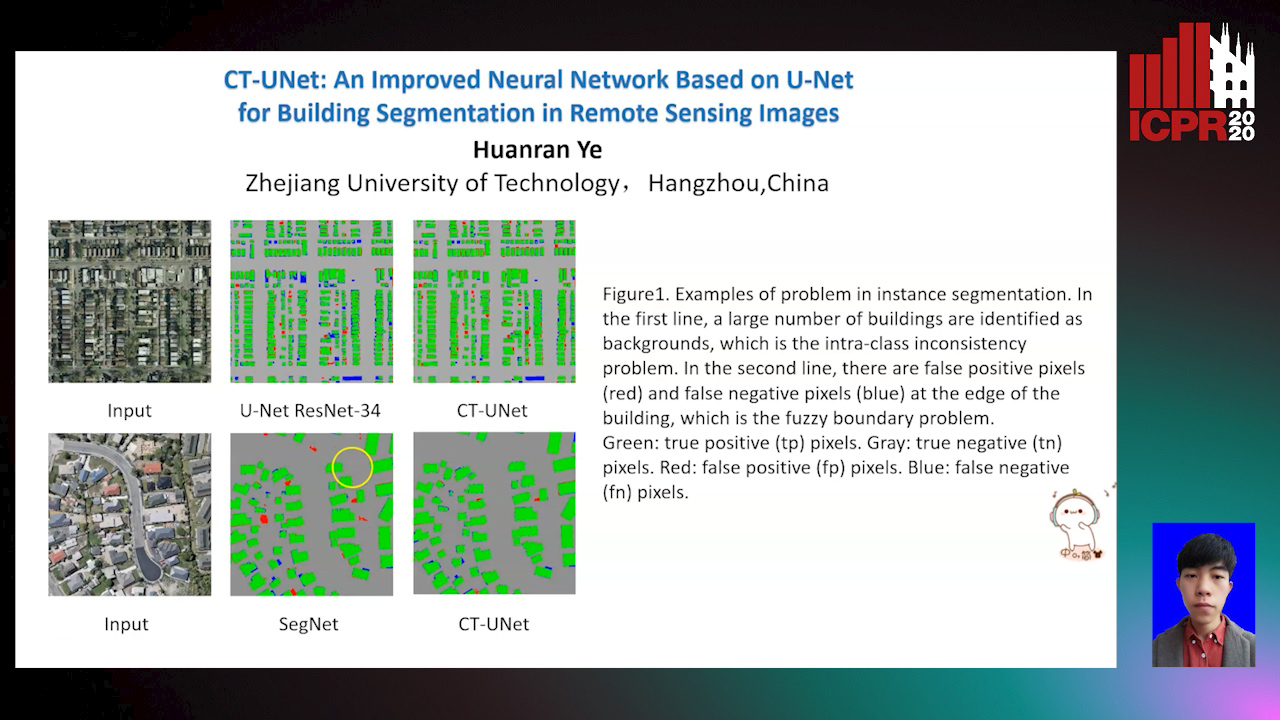

CT-UNet: An Improved Neural Network Based on U-Net for Building Segmentation in Remote Sensing Images

Huanran Ye, Sheng Liu, Kun Jin, Haohao Cheng

Auto-TLDR; Context-Transfer-UNet: A UNet-based Network for Building Segmentation in Remote Sensing Images

Abstract Slides Poster Similar

Global-Local Attention Network for Semantic Segmentation in Aerial Images

Minglong Li, Lianlei Shan, Weiqiang Wang

Auto-TLDR; GLANet: Global-Local Attention Network for Semantic Segmentation

Abstract Slides Poster Similar

RescueNet: Joint Building Segmentation and Damage Assessment from Satellite Imagery

Auto-TLDR; RescueNet: End-to-End Building Segmentation and Damage Classification for Humanitarian Aid and Disaster Response

Abstract Slides Poster Similar

3D Semantic Labeling of Photogrammetry Meshes Based on Active Learning

Mengqi Rong, Shuhan Shen, Zhanyi Hu

Auto-TLDR; 3D Semantic Expression of Urban Scenes Based on Active Learning

Abstract Slides Poster Similar

Walk the Lines: Object Contour Tracing CNN for Contour Completion of Ships

Auto-TLDR; Walk the Lines: A Convolutional Neural Network trained to follow object contours

Abstract Slides Poster Similar

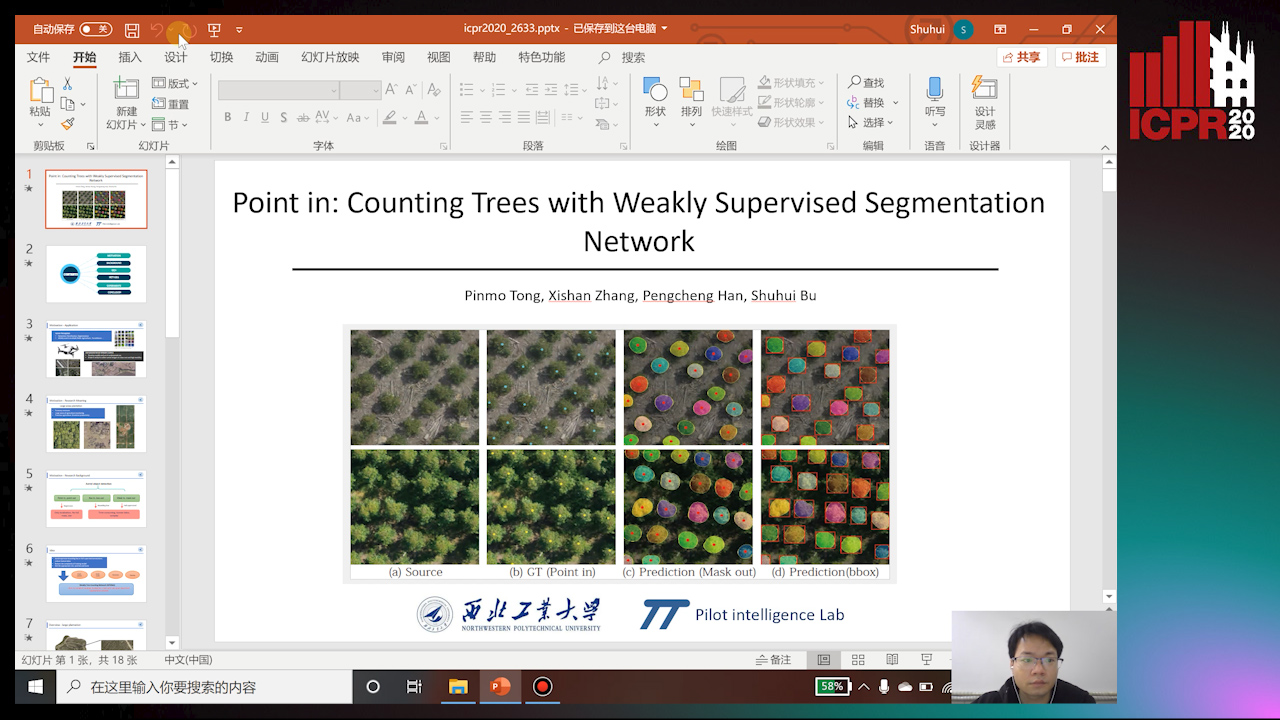

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

Triplet-Path Dilated Network for Detection and Segmentation of General Pathological Images

Jiaqi Luo, Zhicheng Zhao, Fei Su, Limei Guo

Auto-TLDR; Triplet-path Network for One-Stage Object Detection and Segmentation in Pathological Images

End-To-End Deep Learning Methods for Automated Damage Detection in Extreme Events at Various Scales

Yongsheng Bai, Alper Yilmaz, Halil Sezen

Auto-TLDR; Robust Mask R-CNN for Crack Detection in Extreme Events

Abstract Slides Poster Similar

Derivation of Geometrically and Semantically Annotated UAV Datasets at Large Scales from 3D City Models

Sidi Wu, Lukas Liebel, Marco Körner

Auto-TLDR; Large-Scale Dataset of Synthetic UAV Imagery for Geometric and Semantic Annotation

Abstract Slides Poster Similar

Revisiting Sequence-To-Sequence Video Object Segmentation with Multi-Task Loss and Skip-Memory

Fatemeh Azimi, Benjamin Bischke, Sebastian Palacio, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Sequence-to-Sequence Learning for Video Object Segmentation

Abstract Slides Poster Similar

UHRSNet: A Semantic Segmentation Network Specifically for Ultra-High-Resolution Images

Auto-TLDR; Ultra-High-Resolution Segmentation with Local and Global Feature Fusion

Learning to Segment Clustered Amoeboid Cells from Brightfield Microscopy Via Multi-Task Learning with Adaptive Weight Selection

Rituparna Sarkar, Suvadip Mukherjee, Elisabeth Labruyere, Jean-Christophe Olivo-Marin

Auto-TLDR; Supervised Cell Segmentation from Microscopy Images using Multi-task Learning in a Multi-Task Learning Paradigm

Approach for Document Detection by Contours and Contrasts

Daniil Tropin, Sergey Ilyuhin, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; A countor-based method for arbitrary document detection on a mobile device

Abstract Slides Poster Similar

Enhancing Deep Semantic Segmentation of RGB-D Data with Entangled Forests

Matteo Terreran, Elia Bonetto, Stefano Ghidoni

Auto-TLDR; FuseNet: A Lighter Deep Learning Model for Semantic Segmentation

Abstract Slides Poster Similar

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

Deep Realistic Novel View Generation for City-Scale Aerial Images

Koundinya Nouduri, Ke Gao, Joshua Fraser, Shizeng Yao, Hadi Aliakbarpour, Filiz Bunyak, Kannappan Palaniappan

Auto-TLDR; End-to-End 3D Voxel Renderer for Multi-View Stereo Data Generation and Evaluation

Abstract Slides Poster Similar

FourierNet: Compact Mask Representation for Instance Segmentation Using Differentiable Shape Decoders

Hamd Ul Moqeet Riaz, Nuri Benbarka, Andreas Zell

Auto-TLDR; FourierNet: A Single shot, anchor-free, fully convolutional instance segmentation method that predicts a shape vector

Abstract Slides Poster Similar

Holistic Grid Fusion Based Stop Line Estimation

Runsheng Xu, Faezeh Tafazzoli, Li Zhang, Timo Rehfeld, Gunther Krehl, Arunava Seal

Auto-TLDR; Fused Multi-Sensory Data for Stop Lines Detection in Intersection Scenarios

BiLuNet: A Multi-Path Network for Semantic Segmentation on X-Ray Images

Van Luan Tran, Huei-Yung Lin, Rachel Liu, Chun-Han Tseng, Chun-Han Tseng

Auto-TLDR; BiLuNet: Multi-path Convolutional Neural Network for Semantic Segmentation of Lumbar vertebrae, sacrum,

Attention Based Coupled Framework for Road and Pothole Segmentation

Shaik Masihullah, Ritu Garg, Prerana Mukherjee, Anupama Ray

Auto-TLDR; Few Shot Learning for Road and Pothole Segmentation on KITTI and IDD

Abstract Slides Poster Similar

Aerial Road Segmentation in the Presence of Topological Label Noise

Corentin Henry, Friedrich Fraundorfer, Eleonora Vig

Auto-TLDR; Improving Road Segmentation with Noise-Aware U-Nets for Fine-Grained Topology delineation

Abstract Slides Poster Similar

Transitional Asymmetric Non-Local Neural Networks for Real-World Dirt Road Segmentation

Auto-TLDR; Transitional Asymmetric Non-Local Neural Networks for Semantic Segmentation on Dirt Roads

Abstract Slides Poster Similar

Generic Document Image Dewarping by Probabilistic Discretization of Vanishing Points

Gilles Simon, Salvatore Tabbone

Auto-TLDR; Robust Document Dewarping using vanishing points

Abstract Slides Poster Similar

EAGLE: Large-Scale Vehicle Detection Dataset in Real-World Scenarios Using Aerial Imagery

Seyed Majid Azimi, Reza Bahmanyar, Corentin Henry, Kurz Franz

Auto-TLDR; EAGLE: A Large-Scale Dataset for Multi-class Vehicle Detection with Object Orientation Information in Airborne Imagery

A Fine-Grained Dataset and Its Efficient Semantic Segmentation for Unstructured Driving Scenarios

Kai Andreas Metzger, Peter Mortimer, Hans J "Joe" Wuensche

Auto-TLDR; TAS500: A Semantic Segmentation Dataset for Autonomous Driving in Unstructured Environments

Abstract Slides Poster Similar

Automatically Gather Address Specific Dwelling Images Using Google Street View

Auto-TLDR; Automatic Address Specific Dwelling Image Collection Using Google Street View Data

Abstract Slides Poster Similar

Semantic Segmentation Refinement Using Entropy and Boundary-guided Monte Carlo Sampling and Directed Regional Search

Zitang Sun, Sei-Ichiro Kamata, Ruojing Wang, Weili Chen

Auto-TLDR; Directed Region Search and Refinement for Semantic Segmentation

Abstract Slides Poster Similar

Video Semantic Segmentation Using Deep Multi-View Representation Learning

Akrem Sellami, Salvatore Tabbone

Auto-TLDR; Deep Multi-view Representation Learning for Video Object Segmentation

Abstract Slides Poster Similar

FC-DCNN: A Densely Connected Neural Network for Stereo Estimation

Dominik Hirner, Friedrich Fraundorfer

Auto-TLDR; FC-DCNN: A Lightweight Network for Stereo Estimation

Abstract Slides Poster Similar

CASNet: Common Attribute Support Network for Image Instance and Panoptic Segmentation

Xiaolong Liu, Yuqing Hou, Anbang Yao, Yurong Chen, Keqiang Li

Auto-TLDR; Common Attribute Support Network for instance segmentation and panoptic segmentation

Abstract Slides Poster Similar

DE-Net: Dilated Encoder Network for Automated Tongue Segmentation

Hui Tang, Bin Wang, Jun Zhou, Yongsheng Gao

Auto-TLDR; Automated Tongue Image Segmentation using De-Net

Abstract Slides Poster Similar

Dynamic Guided Network for Monocular Depth Estimation

Xiaoxia Xing, Yinghao Cai, Yiping Yang, Dayong Wen

Auto-TLDR; DGNet: Dynamic Guidance Upsampling for Self-attention-Decoding for Monocular Depth Estimation

Abstract Slides Poster Similar

ID Documents Matching and Localization with Multi-Hypothesis Constraints

Guillaume Chiron, Nabil Ghanmi, Ahmad Montaser Awal

Auto-TLDR; Identity Document Localization in the Wild Using Multi-hypothesis Exploration

Abstract Slides Poster Similar

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

One Step Clustering Based on A-Contrario Framework for Detection of Alterations in Historical Violins

Alireza Rezaei, Sylvie Le Hégarat-Mascle, Emanuel Aldea, Piercarlo Dondi, Marco Malagodi

Auto-TLDR; A-Contrario Clustering for the Detection of Altered Violins using UVIFL Images

Abstract Slides Poster Similar

A Novel Region of Interest Extraction Layer for Instance Segmentation

Leonardo Rossi, Akbar Karimi, Andrea Prati

Auto-TLDR; Generic RoI Extractor for Two-Stage Neural Network for Instance Segmentation

Abstract Slides Poster Similar

Learning Defects in Old Movies from Manually Assisted Restoration

Arthur Renaudeau, Travis Seng, Axel Carlier, Jean-Denis Durou, Fabien Pierre, Francois Lauze, Jean-François Aujol

Auto-TLDR; U-Net: Detecting Defects in Old Movies by Inpainting Techniques

Abstract Slides Poster Similar

Multi-scale Processing of Noisy Images using Edge Preservation Losses

Auto-TLDR; Multi-scale U-net for Noisy Image Detection and Denoising

Abstract Slides Poster Similar

Edge-Aware Monocular Dense Depth Estimation with Morphology

Zhi Li, Xiaoyang Zhu, Haitao Yu, Qi Zhang, Yongshi Jiang

Auto-TLDR; Spatio-Temporally Smooth Dense Depth Maps Using Only a CPU

Abstract Slides Poster Similar

GSTO: Gated Scale-Transfer Operation for Multi-Scale Feature Learning in Semantic Segmentation

Zhuoying Wang, Yongtao Wang, Zhi Tang, Yangyan Li, Ying Chen, Haibin Ling, Weisi Lin

Auto-TLDR; Gated Scale-Transfer Operation for Semantic Segmentation

Abstract Slides Poster Similar

User-Independent Gaze Estimation by Extracting Pupil Parameter and Its Mapping to the Gaze Angle

Auto-TLDR; Gaze Point Estimation using Pupil Shape for Generalization

Abstract Slides Poster Similar

3D Pots Configuration System by Optimizing Over Geometric Constraints

Jae Eun Kim, Muhammad Zeeshan Arshad, Seong Jong Yoo, Je Hyeong Hong, Jinwook Kim, Young Min Kim

Auto-TLDR; Optimizing 3D Configurations for Stable Pottery Restoration from irregular and noisy evidence

Abstract Slides Poster Similar

Encoder-Decoder Based Convolutional Neural Networks with Multi-Scale-Aware Modules for Crowd Counting

Pongpisit Thanasutives, Ken-Ichi Fukui, Masayuki Numao, Boonserm Kijsirikul

Auto-TLDR; M-SFANet and M-SegNet for Crowd Counting Using Multi-Scale Fusion Networks

Abstract Slides Poster Similar

Enhanced Feature Pyramid Network for Semantic Segmentation

Mucong Ye, Ouyang Jinpeng, Ge Chen, Jing Zhang, Xiaogang Yu

Auto-TLDR; EFPN: Enhanced Feature Pyramid Network for Semantic Segmentation

Abstract Slides Poster Similar

Visual Localization for Autonomous Driving: Mapping the Accurate Location in the City Maze

Dongfang Liu, Yiming Cui, Xiaolei Guo, Wei Ding, Baijian Yang, Yingjie Chen

Auto-TLDR; Feature Voting for Robust Visual Localization in Urban Settings

Abstract Slides Poster Similar

Directional Graph Networks with Hard Weight Assignments

Miguel Dominguez, Raymond Ptucha

Auto-TLDR; Hard Directional Graph Networks for Point Cloud Analysis

Abstract Slides Poster Similar

Detection and Correspondence Matching of Corneal Reflections for Eye Tracking Using Deep Learning

Soumil Chugh, Braiden Brousseau, Jonathan Rose, Moshe Eizenman

Auto-TLDR; A Fully Convolutional Neural Network for Corneal Reflection Detection and Matching in Extended Reality Eye Tracking Systems

Abstract Slides Poster Similar