Walk the Lines: Object Contour Tracing CNN for Contour Completion of Ships

Auto-TLDR; Walk the Lines: A Convolutional Neural Network trained to follow object contours

Similar papers

Quantization in Relative Gradient Angle Domain for Building Polygon Estimation

Yuhao Chen, Yifan Wu, Linlin Xu, Alexander Wong

Auto-TLDR; Relative Gradient Angle Transform for Building Footprint Extraction from Remote Sensing Data

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

EdgeNet: Semantic Scene Completion from a Single RGB-D Image

Aloisio Dourado, Teofilo De Campos, Adrian Hilton, Hansung Kim

Auto-TLDR; Semantic Scene Completion using 3D Depth and RGB Information

Abstract Slides Poster Similar

RLST: A Reinforcement Learning Approach to Scene Text Detection Refinement

Xuan Peng, Zheng Huang, Kai Chen, Jie Guo, Weidong Qiu

Auto-TLDR; Saccadic Eye Movements and Peripheral Vision for Scene Text Detection using Reinforcement Learning

Abstract Slides Poster Similar

IPT: A Dataset for Identity Preserved Tracking in Closed Domains

Thomas Heitzinger, Martin Kampel

Auto-TLDR; Identity Preserved Tracking Using Depth Data for Privacy and Privacy

Abstract Slides Poster Similar

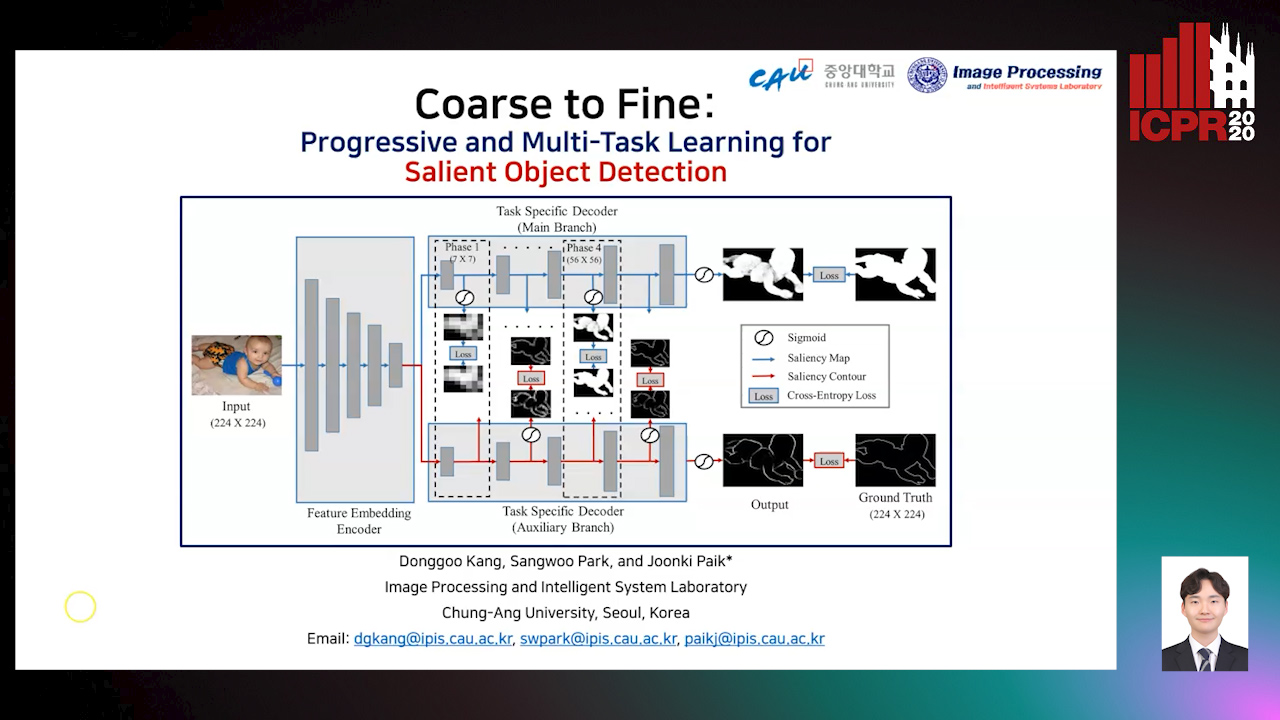

Coarse to Fine: Progressive and Multi-Task Learning for Salient Object Detection

Dong-Goo Kang, Sangwoo Park, Joonki Paik

Auto-TLDR; Progressive and mutl-task learning scheme for salient object detection

Abstract Slides Poster Similar

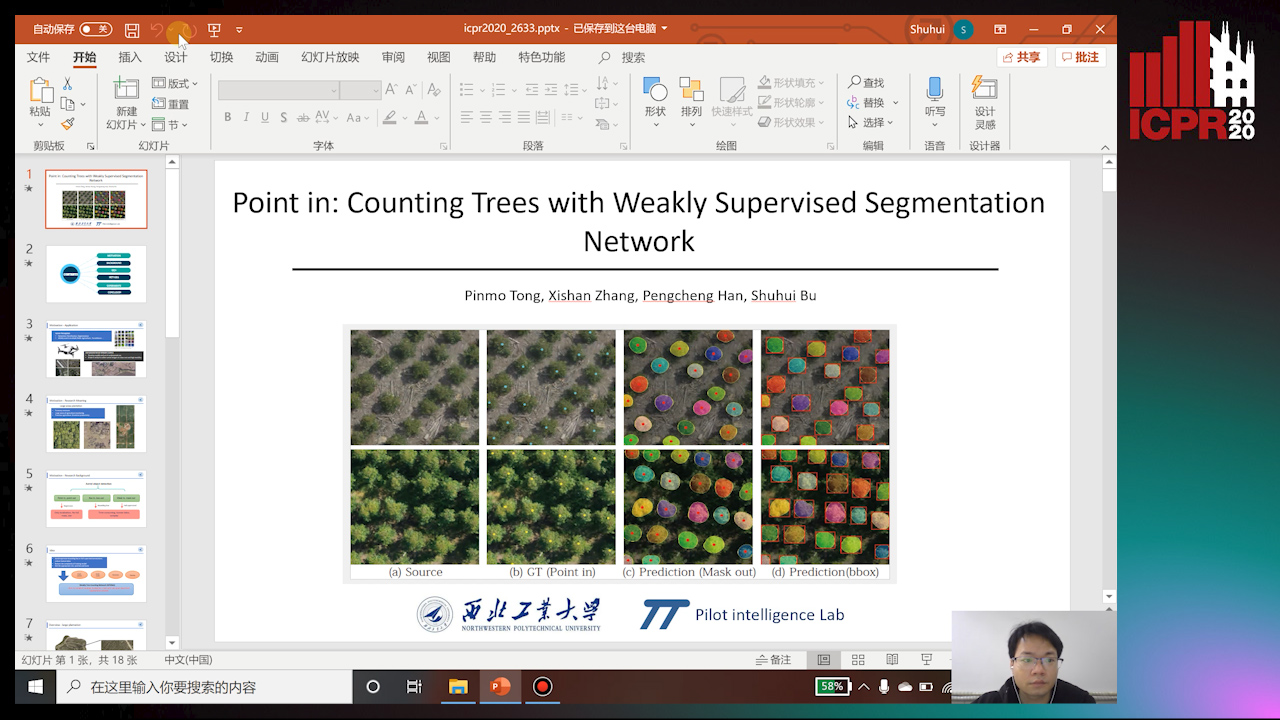

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

Early Wildfire Smoke Detection in Videos

Taanya Gupta, Hengyue Liu, Bir Bhanu

Auto-TLDR; Semi-supervised Spatio-Temporal Video Object Segmentation for Automatic Detection of Smoke in Videos during Forest Fire

Approach for Document Detection by Contours and Contrasts

Daniil Tropin, Sergey Ilyuhin, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; A countor-based method for arbitrary document detection on a mobile device

Abstract Slides Poster Similar

Domain Siamese CNNs for Sparse Multispectral Disparity Estimation

David-Alexandre Beaupre, Guillaume-Alexandre Bilodeau

Auto-TLDR; Multispectral Disparity Estimation between Thermal and Visible Images using Deep Neural Networks

Abstract Slides Poster Similar

A Fast and Accurate Object Detector for Handwritten Digit String Recognition

Jun Guo, Wenjing Wei, Yifeng Ma, Cong Peng

Auto-TLDR; ChipNet: An anchor-free object detector for handwritten digit string recognition

Abstract Slides Poster Similar

Directional Graph Networks with Hard Weight Assignments

Miguel Dominguez, Raymond Ptucha

Auto-TLDR; Hard Directional Graph Networks for Point Cloud Analysis

Abstract Slides Poster Similar

Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

William Prew, Toby Breckon, Magnus Bordewich, Ulrik Beierholm

Auto-TLDR; Improving grasping performance from monocularcolour images in an end-to-end CNN architecture with multi-task learning

Abstract Slides Poster Similar

Recovery of 2D and 3D Layout Information through an Advanced Image Stitching Algorithm Using Scanning Electron Microscope Images

Aayush Singla, Bernhard Lippmann, Helmut Graeb

Auto-TLDR; Image Stitching for True Geometrical Layout Recovery in Nanoscale Dimension

Abstract Slides Poster Similar

Global-Local Attention Network for Semantic Segmentation in Aerial Images

Minglong Li, Lianlei Shan, Weiqiang Wang

Auto-TLDR; GLANet: Global-Local Attention Network for Semantic Segmentation

Abstract Slides Poster Similar

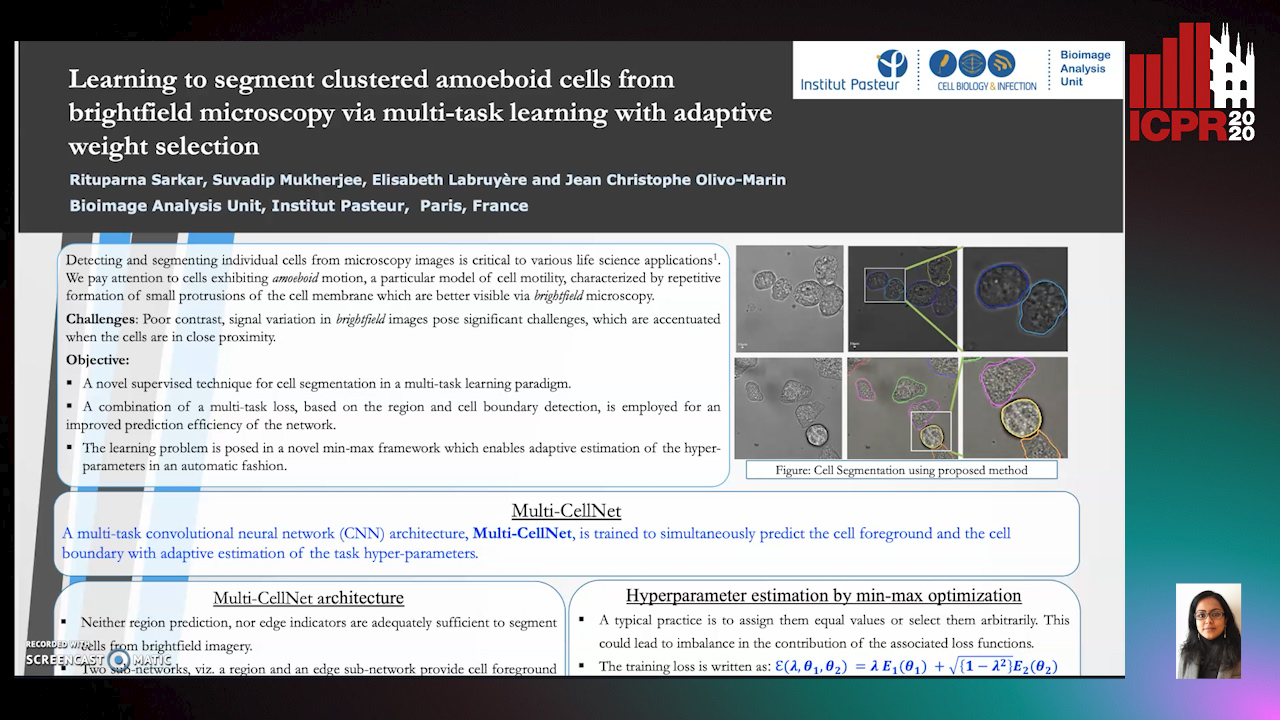

Learning to Segment Clustered Amoeboid Cells from Brightfield Microscopy Via Multi-Task Learning with Adaptive Weight Selection

Rituparna Sarkar, Suvadip Mukherjee, Elisabeth Labruyere, Jean-Christophe Olivo-Marin

Auto-TLDR; Supervised Cell Segmentation from Microscopy Images using Multi-task Learning in a Multi-Task Learning Paradigm

Automatically Gather Address Specific Dwelling Images Using Google Street View

Auto-TLDR; Automatic Address Specific Dwelling Image Collection Using Google Street View Data

Abstract Slides Poster Similar

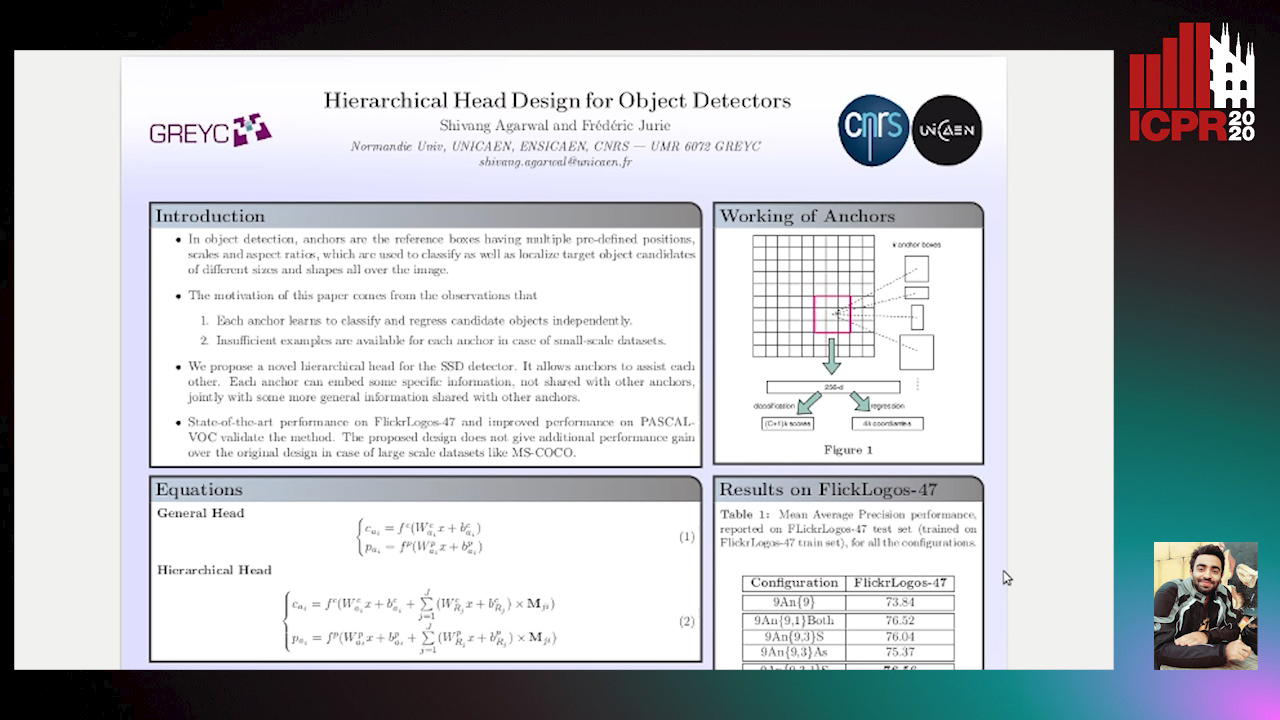

Hierarchical Head Design for Object Detectors

Shivang Agarwal, Frederic Jurie

Auto-TLDR; Hierarchical Anchor for SSD Detector

Abstract Slides Poster Similar

Handwritten Digit String Recognition Using Deep Autoencoder Based Segmentation and ResNet Based Recognition Approach

Anuran Chakraborty, Rajonya De, Samir Malakar, Friedhelm Schwenker, Ram Sarkar

Auto-TLDR; Handwritten Digit Strings Recognition Using Residual Network and Deep Autoencoder Based Segmentation

Abstract Slides Poster Similar

Machine-Learned Regularization and Polygonization of Building Segmentation Masks

Stefano Zorzi, Ksenia Bittner, Friedrich Fraundorfer

Auto-TLDR; Automatic Regularization and Polygonization of Building Segmentation masks using Generative Adversarial Network

Abstract Slides Poster Similar

Uncertainty Guided Recognition of Tiny Craters on the Moon

Thorsten Wilhelm, Christian Wöhler

Auto-TLDR; Accurately Detecting Tiny Craters in Remote Sensed Images Using Deep Neural Networks

Abstract Slides Poster Similar

Multi-View Object Detection Using Epipolar Constraints within Cluttered X-Ray Security Imagery

Brian Kostadinov Shalon Isaac-Medina, Chris G. Willcocks, Toby Breckon

Auto-TLDR; Exploiting Epipolar Constraints for Multi-View Object Detection in X-ray Security Images

Abstract Slides Poster Similar

Effective Deployment of CNNs for 3DoF Pose Estimation and Grasping in Industrial Settings

Daniele De Gregorio, Riccardo Zanella, Gianluca Palli, Luigi Di Stefano

Auto-TLDR; Automated Deep Learning for Robotic Grasping Applications

Abstract Slides Poster Similar

Lane Detection Based on Object Detection and Image-To-Image Translation

Hiroyuki Komori, Kazunori Onoguchi

Auto-TLDR; Lane Marking and Road Boundary Detection from Monocular Camera Images using Inverse Perspective Mapping

Abstract Slides Poster Similar

Superpixel-Based Refinement for Object Proposal Generation

Christian Wilms, Simone Frintrop

Auto-TLDR; Superpixel-based Refinement of AttentionMask for Object Segmentation

Abstract Slides Poster Similar

A Fine-Grained Dataset and Its Efficient Semantic Segmentation for Unstructured Driving Scenarios

Kai Andreas Metzger, Peter Mortimer, Hans J "Joe" Wuensche

Auto-TLDR; TAS500: A Semantic Segmentation Dataset for Autonomous Driving in Unstructured Environments

Abstract Slides Poster Similar

Learning Defects in Old Movies from Manually Assisted Restoration

Arthur Renaudeau, Travis Seng, Axel Carlier, Jean-Denis Durou, Fabien Pierre, Francois Lauze, Jean-François Aujol

Auto-TLDR; U-Net: Detecting Defects in Old Movies by Inpainting Techniques

Abstract Slides Poster Similar

Object Segmentation Tracking from Generic Video Cues

Amirhossein Kardoost, Sabine Müller, Joachim Weickert, Margret Keuper

Auto-TLDR; A Light-Weight Variational Framework for Video Object Segmentation in Videos

Abstract Slides Poster Similar

Semantic Segmentation Refinement Using Entropy and Boundary-guided Monte Carlo Sampling and Directed Regional Search

Zitang Sun, Sei-Ichiro Kamata, Ruojing Wang, Weili Chen

Auto-TLDR; Directed Region Search and Refinement for Semantic Segmentation

Abstract Slides Poster Similar

Siamese Dynamic Mask Estimation Network for Fast Video Object Segmentation

Dexiang Hong, Guorong Li, Kai Xu, Li Su, Qingming Huang

Auto-TLDR; Siamese Dynamic Mask Estimation for Video Object Segmentation

Abstract Slides Poster Similar

FC-DCNN: A Densely Connected Neural Network for Stereo Estimation

Dominik Hirner, Friedrich Fraundorfer

Auto-TLDR; FC-DCNN: A Lightweight Network for Stereo Estimation

Abstract Slides Poster Similar

Enhancing Semantic Segmentation of Aerial Images with Inhibitory Neurons

Ihsan Ullah, Sean Reilly, Michael Madden

Auto-TLDR; Lateral Inhibition in Deep Neural Networks for Object Recognition and Semantic Segmentation

Abstract Slides Poster Similar

BP-Net: Deep Learning-Based Superpixel Segmentation for RGB-D Image

Bin Zhang, Xuejing Kang, Anlong Ming

Auto-TLDR; A Deep Learning-based Superpixel Segmentation Algorithm for RGB-D Image

Abstract Slides Poster Similar

ActionSpotter: Deep Reinforcement Learning Framework for Temporal Action Spotting in Videos

Guillaume Vaudaux-Ruth, Adrien Chan-Hon-Tong, Catherine Achard

Auto-TLDR; ActionSpotter: A Reinforcement Learning Algorithm for Action Spotting in Video

Abstract Slides Poster Similar

FourierNet: Compact Mask Representation for Instance Segmentation Using Differentiable Shape Decoders

Hamd Ul Moqeet Riaz, Nuri Benbarka, Andreas Zell

Auto-TLDR; FourierNet: A Single shot, anchor-free, fully convolutional instance segmentation method that predicts a shape vector

Abstract Slides Poster Similar

A Versatile Crack Inspection Portable System Based on Classifier Ensemble and Controlled Illumination

Milind Gajanan Padalkar, Carlos Beltran-Gonzalez, Matteo Bustreo, Alessio Del Bue, Vittorio Murino

Auto-TLDR; Lighting Conditions for Crack Detection in Ceramic Tile

Abstract Slides Poster Similar

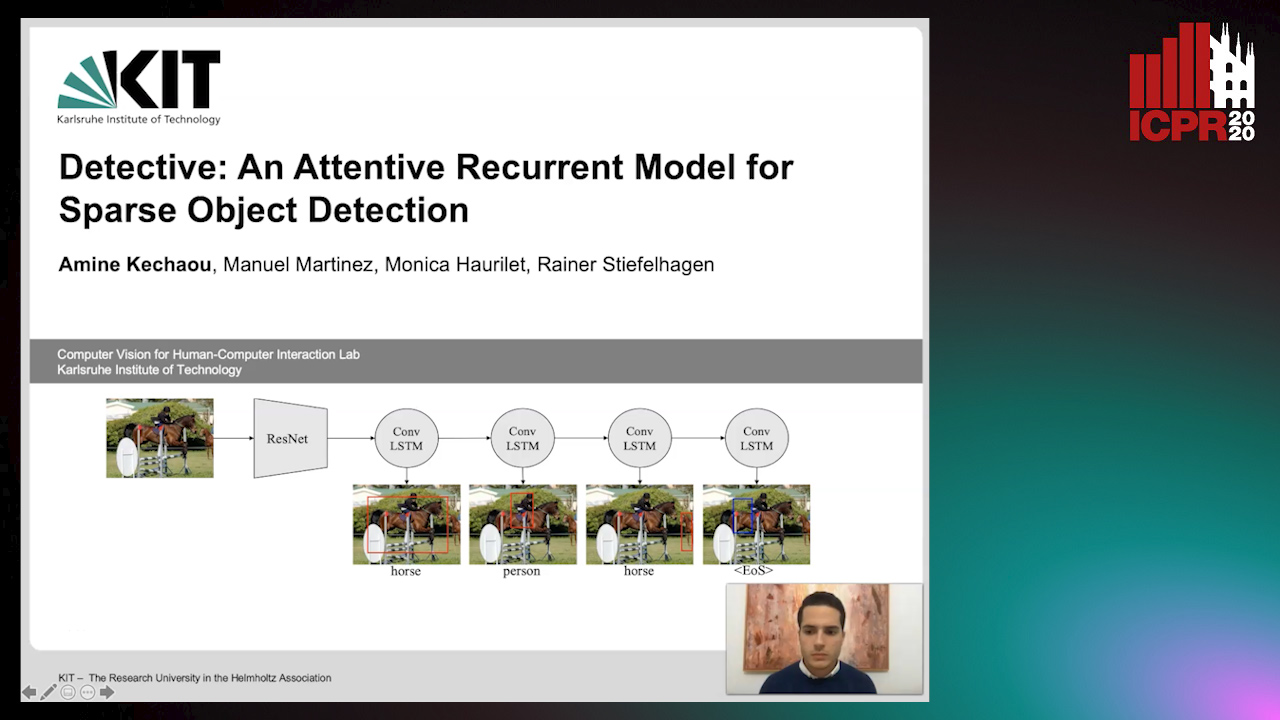

Detective: An Attentive Recurrent Model for Sparse Object Detection

Amine Kechaou, Manuel Martinez, Monica Haurilet, Rainer Stiefelhagen

Auto-TLDR; Detective: An attentive object detector that identifies objects in images in a sequential manner

Abstract Slides Poster Similar

DualBox: Generating BBox Pair with Strong Correspondence Via Occlusion Pattern Clustering and Proposal Refinement

Zheng Ge, Chuyu Hu, Xin Huang, Baiqiao Qiu, Osamu Yoshie

Auto-TLDR; R2NMS: Combining Full and Visible Body Bounding Box for Dense Pedestrian Detection

Abstract Slides Poster Similar

OmniFlowNet: A Perspective Neural Network Adaptation for Optical Flow Estimation in Omnidirectional Images

Charles-Olivier Artizzu, Haozhou Zhang, Guillaume Allibert, Cédric Demonceaux

Auto-TLDR; OmniFlowNet: A Convolutional Neural Network for Omnidirectional Optical Flow Estimation

Abstract Slides Poster Similar

Polarimetric Image Augmentation

Marc Blanchon, Fabrice Meriaudeau, Olivier Morel, Ralph Seulin, Desire Sidibe

Auto-TLDR; Polarimetric Augmentation for Deep Learning in Robotics Applications

Aggregating Object Features Based on Attention Weights for Fine-Grained Image Retrieval

Hongli Lin, Yongqi Song, Zixuan Zeng, Weisheng Wang

Auto-TLDR; DSAW: Unsupervised Dual-selection for Fine-Grained Image Retrieval

FeatureNMS: Non-Maximum Suppression by Learning Feature Embeddings

Auto-TLDR; FeatureNMS: Non-Maximum Suppression for Multiple Object Detection

Abstract Slides Poster Similar

Force Banner for the Recognition of Spatial Relations

Robin Deléarde, Camille Kurtz, Laurent Wendling, Philippe Dejean

Auto-TLDR; Spatial Relation Recognition using Force Banners

CASNet: Common Attribute Support Network for Image Instance and Panoptic Segmentation

Xiaolong Liu, Yuqing Hou, Anbang Yao, Yurong Chen, Keqiang Li

Auto-TLDR; Common Attribute Support Network for instance segmentation and panoptic segmentation

Abstract Slides Poster Similar

Image Sequence Based Cyclist Action Recognition Using Multi-Stream 3D Convolution

Stefan Zernetsch, Steven Schreck, Viktor Kress, Konrad Doll, Bernhard Sick

Auto-TLDR; 3D-ConvNet: A Multi-stream 3D Convolutional Neural Network for Detecting Cyclists in Real World Traffic Situations

Abstract Slides Poster Similar

SECI-GAN: Semantic and Edge Completion for Dynamic Objects Removal

Francesco Pinto, Andrea Romanoni, Matteo Matteucci, Phil Torr

Auto-TLDR; SECI-GAN: Semantic and Edge Conditioned Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

BiLuNet: A Multi-Path Network for Semantic Segmentation on X-Ray Images

Van Luan Tran, Huei-Yung Lin, Rachel Liu, Chun-Han Tseng, Chun-Han Tseng

Auto-TLDR; BiLuNet: Multi-path Convolutional Neural Network for Semantic Segmentation of Lumbar vertebrae, sacrum,

Level Three Synthetic Fingerprint Generation

Andre Wyzykowski, Mauricio Pamplona Segundo, Rubisley Lemes

Auto-TLDR; Synthesis of High-Resolution Fingerprints with Pore Detection Using CycleGAN

Abstract Slides Poster Similar