Deep Superpixel Cut for Unsupervised Image Segmentation

Auto-TLDR; Deep Superpixel Cut for Deep Unsupervised Image Segmentation

Similar papers

BP-Net: Deep Learning-Based Superpixel Segmentation for RGB-D Image

Bin Zhang, Xuejing Kang, Anlong Ming

Auto-TLDR; A Deep Learning-based Superpixel Segmentation Algorithm for RGB-D Image

Abstract Slides Poster Similar

Content-Sensitive Superpixels Based on Adaptive Regrowth

Auto-TLDR; Adaptive Regrowth for Content-Sensitive Superpixels

Abstract Slides Poster Similar

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

DE-Net: Dilated Encoder Network for Automated Tongue Segmentation

Hui Tang, Bin Wang, Jun Zhou, Yongsheng Gao

Auto-TLDR; Automated Tongue Image Segmentation using De-Net

Abstract Slides Poster Similar

Superpixel-Based Refinement for Object Proposal Generation

Christian Wilms, Simone Frintrop

Auto-TLDR; Superpixel-based Refinement of AttentionMask for Object Segmentation

Abstract Slides Poster Similar

Variational Deep Embedding Clustering by Augmented Mutual Information Maximization

Qiang Ji, Yanfeng Sun, Yongli Hu, Baocai Yin

Auto-TLDR; Clustering by Augmented Mutual Information maximization for Deep Embedding

Abstract Slides Poster Similar

Generalized Shortest Path-Based Superpixels for Accurate Segmentation of Spherical Images

Rémi Giraud, Rodrigo Borba Pinheiro, Yannick Berthoumieu

Auto-TLDR; SPS: Spherical Shortest Path-based Superpixels

Abstract Slides Poster Similar

Video Semantic Segmentation Using Deep Multi-View Representation Learning

Akrem Sellami, Salvatore Tabbone

Auto-TLDR; Deep Multi-view Representation Learning for Video Object Segmentation

Abstract Slides Poster Similar

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

Feature-Aware Unsupervised Learning with Joint Variational Attention and Automatic Clustering

Wang Ru, Lin Li, Peipei Wang, Liu Peiyu

Auto-TLDR; Deep Variational Attention Encoder-Decoder for Clustering

Abstract Slides Poster Similar

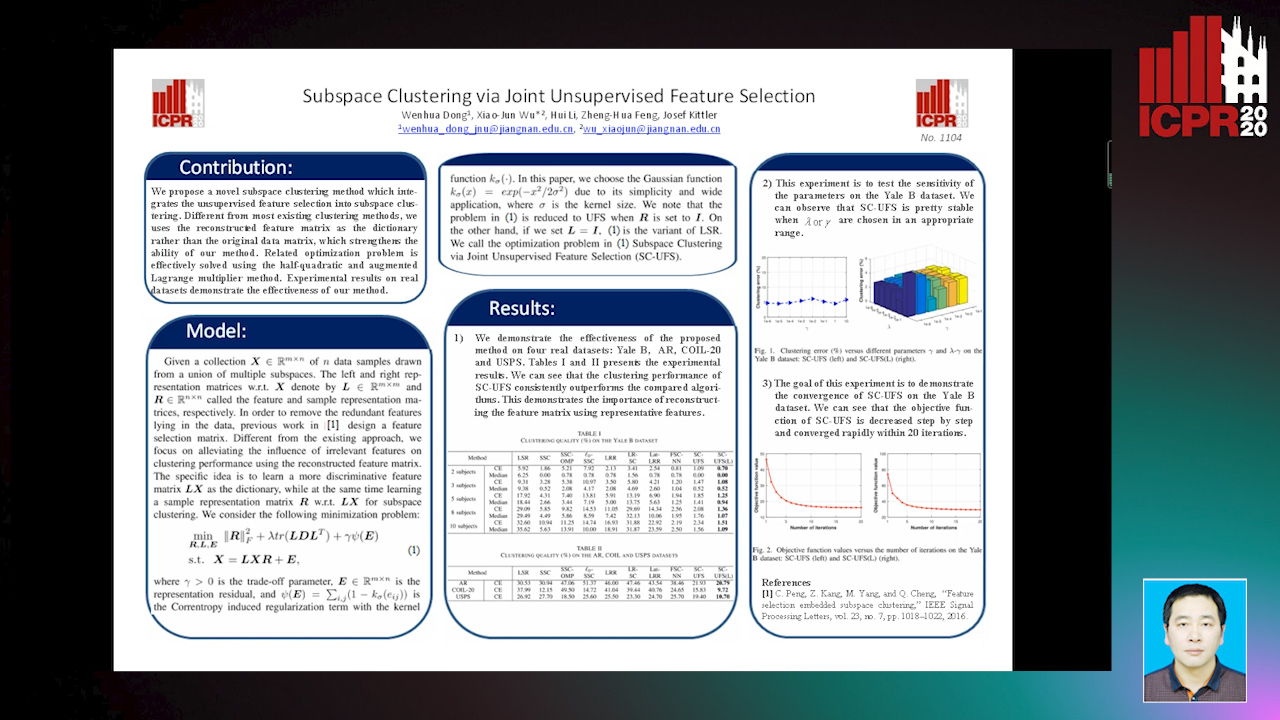

Subspace Clustering Via Joint Unsupervised Feature Selection

Wenhua Dong, Xiaojun Wu, Hui Li, Zhenhua Feng, Josef Kittler

Auto-TLDR; Unsupervised Feature Selection for Subspace Clustering

Sparse-Dense Subspace Clustering

Shuai Yang, Wenqi Zhu, Yuesheng Zhu

Auto-TLDR; Sparse-Dense Subspace Clustering with Piecewise Correlation Estimation

Abstract Slides Poster Similar

3D Semantic Labeling of Photogrammetry Meshes Based on Active Learning

Mengqi Rong, Shuhan Shen, Zhanyi Hu

Auto-TLDR; 3D Semantic Expression of Urban Scenes Based on Active Learning

Abstract Slides Poster Similar

Adaptive Image Compression Using GAN Based Semantic-Perceptual Residual Compensation

Ruojing Wang, Zitang Sun, Sei-Ichiro Kamata, Weili Chen

Auto-TLDR; Adaptive Image Compression using GAN based Semantic-Perceptual Residual Compensation

Abstract Slides Poster Similar

End-To-End Multi-Task Learning for Lung Nodule Segmentation and Diagnosis

Wei Chen, Qiuli Wang, Dan Yang, Xiaohong Zhang, Chen Liu, Yucong Li

Auto-TLDR; A novel multi-task framework for lung nodule diagnosis based on deep learning and medical features

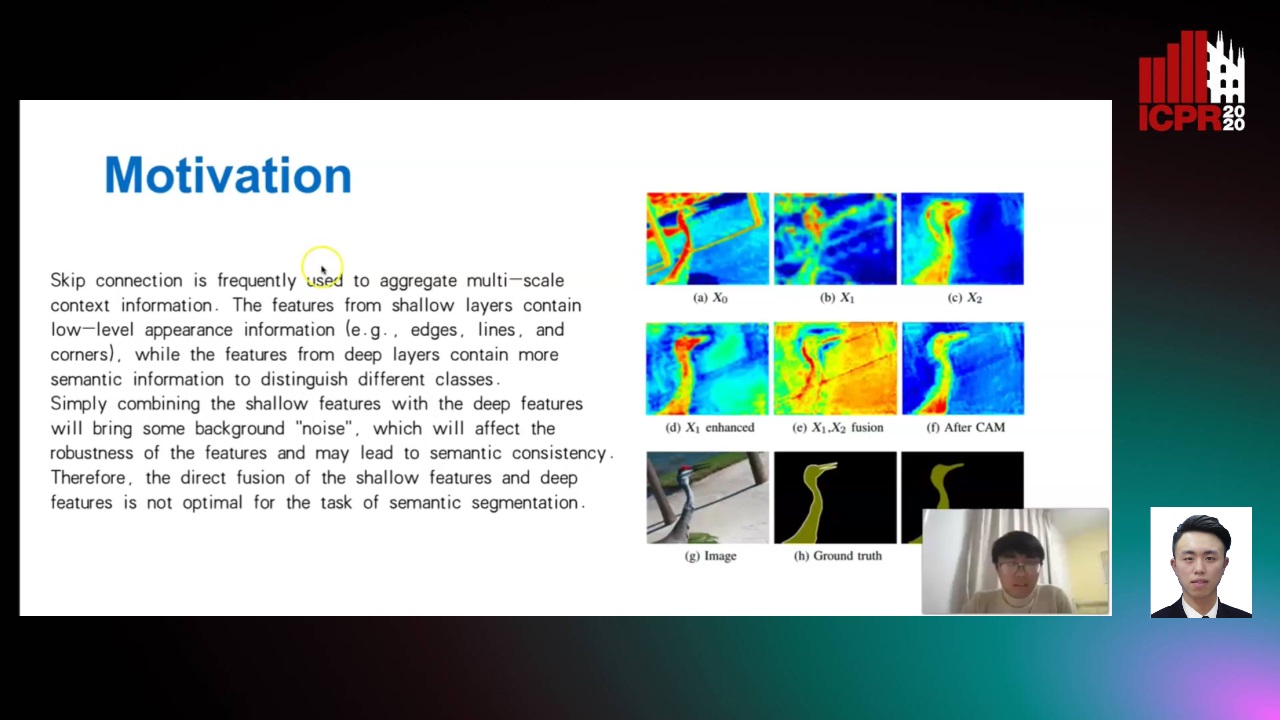

Enhanced Feature Pyramid Network for Semantic Segmentation

Mucong Ye, Ouyang Jinpeng, Ge Chen, Jing Zhang, Xiaogang Yu

Auto-TLDR; EFPN: Enhanced Feature Pyramid Network for Semantic Segmentation

Abstract Slides Poster Similar

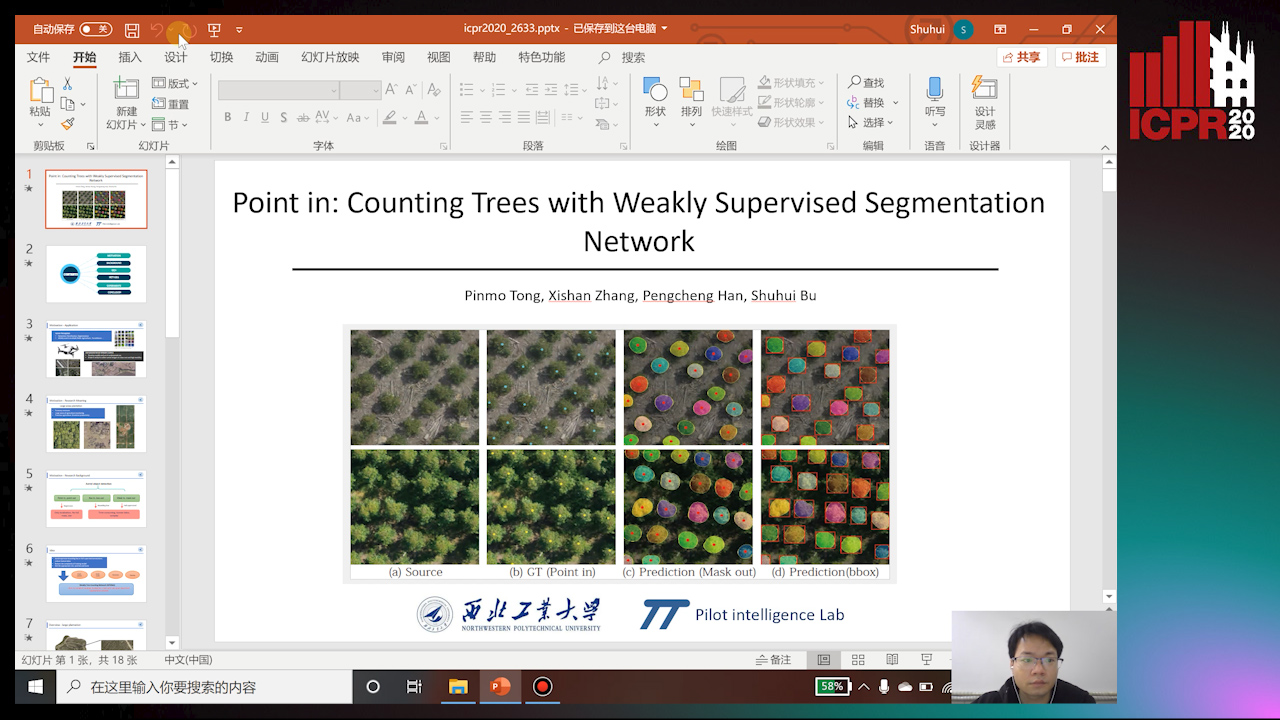

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

Constrained Spectral Clustering Network with Self-Training

Xinyue Liu, Shichong Yang, Linlin Zong

Auto-TLDR; Constrained Spectral Clustering Network: A Constrained Deep spectral clustering network

Abstract Slides Poster Similar

Progressive Scene Segmentation Based on Self-Attention Mechanism

Yunyi Pan, Yuan Gan, Kun Liu, Yan Zhang

Auto-TLDR; Two-Stage Semantic Scene Segmentation with Self-Attention

Abstract Slides Poster Similar

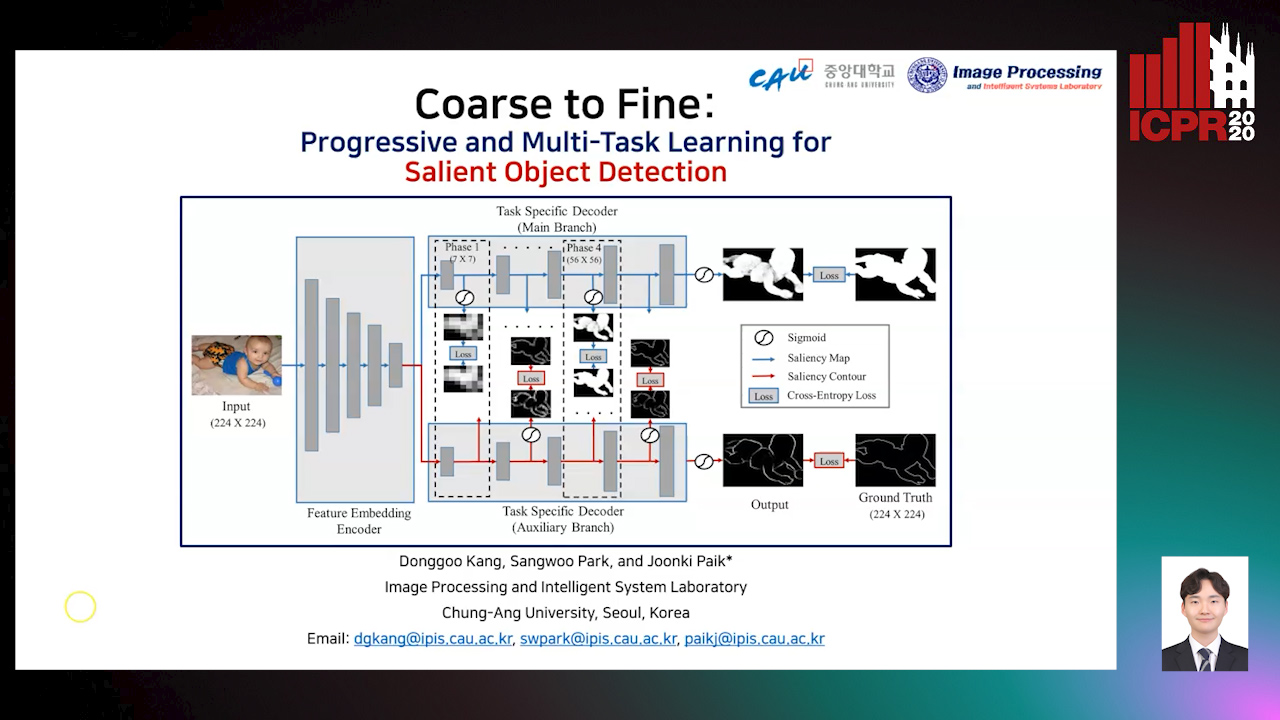

Coarse to Fine: Progressive and Multi-Task Learning for Salient Object Detection

Dong-Goo Kang, Sangwoo Park, Joonki Paik

Auto-TLDR; Progressive and mutl-task learning scheme for salient object detection

Abstract Slides Poster Similar

Ancient Document Layout Analysis: Autoencoders Meet Sparse Coding

Homa Davoudi, Marco Fiorucci, Arianna Traviglia

Auto-TLDR; Unsupervised Unsupervised Representation Learning for Document Layout Analysis

Abstract Slides Poster Similar

Siamese Graph Convolution Network for Face Sketch Recognition

Liang Fan, Xianfang Sun, Paul Rosin

Auto-TLDR; A novel Siamese graph convolution network for face sketch recognition

Abstract Slides Poster Similar

Two-Stage Adaptive Object Scene Flow Using Hybrid CNN-CRF Model

Congcong Li, Haoyu Ma, Qingmin Liao

Auto-TLDR; Adaptive object scene flow estimation using a hybrid CNN-CRF model and adaptive iteration

Abstract Slides Poster Similar

Enhancing Deep Semantic Segmentation of RGB-D Data with Entangled Forests

Matteo Terreran, Elia Bonetto, Stefano Ghidoni

Auto-TLDR; FuseNet: A Lighter Deep Learning Model for Semantic Segmentation

Abstract Slides Poster Similar

CASNet: Common Attribute Support Network for Image Instance and Panoptic Segmentation

Xiaolong Liu, Yuqing Hou, Anbang Yao, Yurong Chen, Keqiang Li

Auto-TLDR; Common Attribute Support Network for instance segmentation and panoptic segmentation

Abstract Slides Poster Similar

Global-Local Attention Network for Semantic Segmentation in Aerial Images

Minglong Li, Lianlei Shan, Weiqiang Wang

Auto-TLDR; GLANet: Global-Local Attention Network for Semantic Segmentation

Abstract Slides Poster Similar

Triplet-Path Dilated Network for Detection and Segmentation of General Pathological Images

Jiaqi Luo, Zhicheng Zhao, Fei Su, Limei Guo

Auto-TLDR; Triplet-path Network for One-Stage Object Detection and Segmentation in Pathological Images

Fused 3-Stage Image Segmentation for Pleural Effusion Cell Clusters

Sike Ma, Meng Zhao, Hao Wang, Fan Shi, Xuguo Sun, Shengyong Chen, Hong-Ning Dai

Auto-TLDR; Coarse Segmentation of Stained and Stained Unstained Cell Clusters in pleural effusion using 3-stage segmentation method

Abstract Slides Poster Similar

Semantic Segmentation Refinement Using Entropy and Boundary-guided Monte Carlo Sampling and Directed Regional Search

Zitang Sun, Sei-Ichiro Kamata, Ruojing Wang, Weili Chen

Auto-TLDR; Directed Region Search and Refinement for Semantic Segmentation

Abstract Slides Poster Similar

Self-Paced Bottom-Up Clustering Network with Side Information for Person Re-Identification

Mingkun Li, Chun-Guang Li, Ruo-Pei Guo, Jun Guo

Auto-TLDR; Self-Paced Bottom-up Clustering Network with Side Information for Unsupervised Person Re-identification

Abstract Slides Poster Similar

Progressive Cluster Purification for Unsupervised Feature Learning

Yifei Zhang, Chang Liu, Yu Zhou, Wei Wang, Weiping Wang, Qixiang Ye

Auto-TLDR; Progressive Cluster Purification for Unsupervised Feature Learning

Abstract Slides Poster Similar

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

Multi-Modal Deep Clustering: Unsupervised Partitioning of Images

Auto-TLDR; Multi-Modal Deep Clustering for Unlabeled Images

Abstract Slides Poster Similar

N2D: (Not Too) Deep Clustering Via Clustering the Local Manifold of an Autoencoded Embedding

Ryan Mcconville, Raul Santos-Rodriguez, Robert Piechocki, Ian Craddock

Auto-TLDR; Local Manifold Learning for Deep Clustering on Autoencoded Embeddings

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Semantic Segmentation of Breast Ultrasound Image with Pyramid Fuzzy Uncertainty Reduction and Direction Connectedness Feature

Kuan Huang, Yingtao Zhang, Heng-Da Cheng, Ping Xing, Boyu Zhang

Auto-TLDR; Uncertainty-Based Deep Learning for Breast Ultrasound Image Segmentation

Abstract Slides Poster Similar

Deeply-Fused Attentive Network for Stereo Matching

Zuliu Yang, Xindong Ai, Weida Yang, Yong Zhao, Qifei Dai, Fuchi Li

Auto-TLDR; DF-Net: Deep Learning-based Network for Stereo Matching

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

DA-RefineNet: Dual-Inputs Attention RefineNet for Whole Slide Image Segmentation

Ziqiang Li, Rentuo Tao, Qianrun Wu, Bin Li

Auto-TLDR; DA-RefineNet: A dual-inputs attention network for whole slide image segmentation

Abstract Slides Poster Similar

Walk the Lines: Object Contour Tracing CNN for Contour Completion of Ships

Auto-TLDR; Walk the Lines: A Convolutional Neural Network trained to follow object contours

Abstract Slides Poster Similar

Joint Semantic-Instance Segmentation of 3D Point Clouds: Instance Separation and Semantic Fusion

Auto-TLDR; Joint Semantic Segmentation and Instance Separation of 3D Point Clouds

Abstract Slides Poster Similar

More Correlations Better Performance: Fully Associative Networks for Multi-Label Image Classification

Auto-TLDR; Fully Associative Network for Fully Exploiting Correlation Information in Multi-Label Classification

Abstract Slides Poster Similar

Real-Time Semantic Segmentation Via Region and Pixel Context Network

Yajun Li, Yazhou Liu, Quansen Sun

Auto-TLDR; A Dual Context Network for Real-Time Semantic Segmentation

Abstract Slides Poster Similar

Progressive Unsupervised Domain Adaptation for Image-Based Person Re-Identification

Mingliang Yang, Da Huang, Jing Zhao

Auto-TLDR; Progressive Unsupervised Domain Adaptation for Person Re-Identification

Abstract Slides Poster Similar

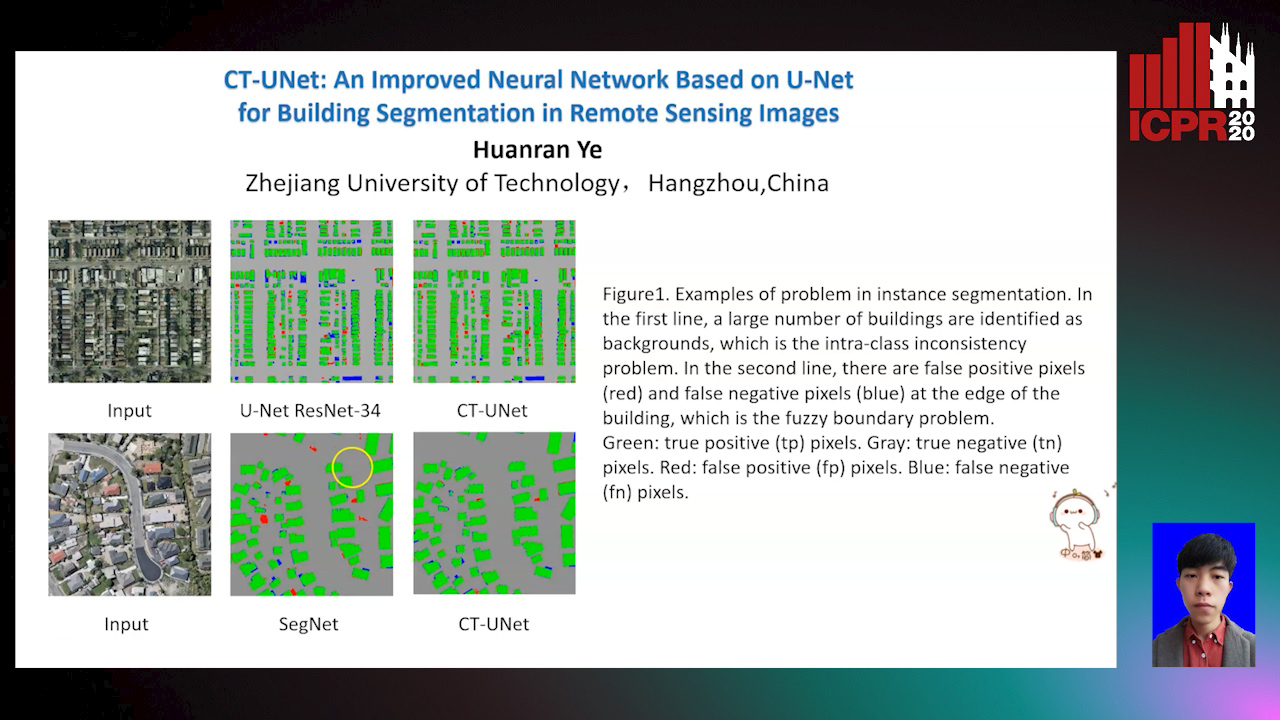

CT-UNet: An Improved Neural Network Based on U-Net for Building Segmentation in Remote Sensing Images

Huanran Ye, Sheng Liu, Kun Jin, Haohao Cheng

Auto-TLDR; Context-Transfer-UNet: A UNet-based Network for Building Segmentation in Remote Sensing Images

Abstract Slides Poster Similar

Deep Space Probing for Point Cloud Analysis

Yirong Yang, Bin Fan, Yongcheng Liu, Hua Lin, Jiyong Zhang, Xin Liu, 蔡鑫宇 蔡鑫宇, Shiming Xiang, Chunhong Pan

Auto-TLDR; SPCNN: Space Probing Convolutional Neural Network for Point Cloud Analysis

Abstract Slides Poster Similar

Real-Time Monocular Depth Estimation with Extremely Light-Weight Neural Network

Mian Jhong Chiu, Wei-Chen Chiu, Hua-Tsung Chen, Jen-Hui Chuang

Auto-TLDR; Real-Time Light-Weight Depth Prediction for Obstacle Avoidance and Environment Sensing with Deep Learning-based CNN

Abstract Slides Poster Similar