Delving in the Loss Landscape to Embed Robust Watermarks into Neural Networks

Enzo Tartaglione,

Marco Grangetto,

Davide Cavagnino,

Marco Botta

Auto-TLDR; Watermark Aware Training of Neural Networks

Similar papers

Removing Backdoor-Based Watermarks in Neural Networks with Limited Data

Xuankai Liu, Fengting Li, Bihan Wen, Qi Li

Auto-TLDR; WILD: A backdoor-based watermark removal framework using limited data

Abstract Slides Poster Similar

Adaptive Noise Injection for Training Stochastic Student Networks from Deterministic Teachers

Yi Xiang Marcus Tan, Yuval Elovici, Alexander Binder

Auto-TLDR; Adaptive Stochastic Networks for Adversarial Attacks

Beyond Cross-Entropy: Learning Highly Separable Feature Distributions for Robust and Accurate Classification

Arslan Ali, Andrea Migliorati, Tiziano Bianchi, Enrico Magli

Auto-TLDR; Gaussian class-conditional simplex loss for adversarial robust multiclass classifiers

Abstract Slides Poster Similar

Adversarially Training for Audio Classifiers

Raymel Alfonso Sallo, Mohammad Esmaeilpour, Patrick Cardinal

Auto-TLDR; Adversarially Training for Robust Neural Networks against Adversarial Attacks

Abstract Slides Poster Similar

Compression Strategies and Space-Conscious Representations for Deep Neural Networks

Giosuè Marinò, Gregorio Ghidoli, Marco Frasca, Dario Malchiodi

Auto-TLDR; Compression of Large Convolutional Neural Networks by Weight Pruning and Quantization

Abstract Slides Poster Similar

Exploiting Non-Linear Redundancy for Neural Model Compression

Muhammad Ahmed Shah, Raphael Olivier, Bhiksha Raj

Auto-TLDR; Compressing Deep Neural Networks with Linear Dependency

Abstract Slides Poster Similar

Large-Scale Historical Watermark Recognition: Dataset and a New Consistency-Based Approach

Xi Shen, Ilaria Pastrolin, Oumayma Bounou, Spyros Gidaris, Marc Smith, Olivier Poncet, Mathieu Aubry

Auto-TLDR; Historical Watermark Recognition with Fine-Grained Cross-Domain One-Shot Instance Recognition

Abstract Slides Poster Similar

How Does DCNN Make Decisions?

Yi Lin, Namin Wang, Xiaoqing Ma, Ziwei Li, Gang Bai

Auto-TLDR; Exploring Deep Convolutional Neural Network's Decision-Making Interpretability

Abstract Slides Poster Similar

Optimal Transport As a Defense against Adversarial Attacks

Quentin Bouniot, Romaric Audigier, Angélique Loesch

Auto-TLDR; Sinkhorn Adversarial Training with Optimal Transport Theory

Abstract Slides Poster Similar

Learning Sparse Deep Neural Networks Using Efficient Structured Projections on Convex Constraints for Green AI

Michel Barlaud, Frederic Guyard

Auto-TLDR; Constrained Deep Neural Network with Constrained Splitting Projection

Abstract Slides Poster Similar

A Delayed Elastic-Net Approach for Performing Adversarial Attacks

Brais Cancela, Veronica Bolon-Canedo, Amparo Alonso-Betanzos

Auto-TLDR; Robustness of ImageNet Pretrained Models against Adversarial Attacks

Abstract Slides Poster Similar

Neuron-Based Network Pruning Based on Majority Voting

Ali Alqahtani, Xianghua Xie, Ehab Essa, Mark W. Jones

Auto-TLDR; Large-Scale Neural Network Pruning using Majority Voting

Abstract Slides Poster Similar

Generalization Comparison of Deep Neural Networks Via Output Sensitivity

Mahsa Forouzesh, Farnood Salehi, Patrick Thiran

Auto-TLDR; Generalization of Deep Neural Networks using Sensitivity

Variational Inference with Latent Space Quantization for Adversarial Resilience

Vinay Kyatham, Deepak Mishra, Prathosh A.P.

Auto-TLDR; A Generalized Defense Mechanism for Adversarial Attacks on Data Manifolds

Abstract Slides Poster Similar

Speeding-Up Pruning for Artificial Neural Networks: Introducing Accelerated Iterative Magnitude Pruning

Marco Zullich, Eric Medvet, Felice Andrea Pellegrino, Alessio Ansuini

Auto-TLDR; Iterative Pruning of Artificial Neural Networks with Overparametrization

Abstract Slides Poster Similar

Rethinking of Deep Models Parameters with Respect to Data Distribution

Shitala Prasad, Dongyun Lin, Yiqun Li, Sheng Dong, Zaw Min Oo

Auto-TLDR; A progressive stepwise training strategy for deep neural networks

Abstract Slides Poster Similar

Attack Agnostic Adversarial Defense via Visual Imperceptible Bound

Saheb Chhabra, Akshay Agarwal, Richa Singh, Mayank Vatsa

Auto-TLDR; Robust Adversarial Defense with Visual Imperceptible Bound

Abstract Slides Poster Similar

Fine-Tuning Convolutional Neural Networks: A Comprehensive Guide and Benchmark Analysis for Glaucoma Screening

Amed Mvoulana, Rostom Kachouri, Mohamed Akil

Auto-TLDR; Fine-tuning Convolutional Neural Networks for Glaucoma Screening

Abstract Slides Poster Similar

Defense Mechanism against Adversarial Attacks Using Density-Based Representation of Images

Yen-Ting Huang, Wen-Hung Liao, Chen-Wei Huang

Auto-TLDR; Adversarial Attacks Reduction Using Input Recharacterization

Abstract Slides Poster Similar

Explain2Attack: Text Adversarial Attacks via Cross-Domain Interpretability

Mahmoud Hossam, Le Trung, He Zhao, Dinh Phung

Auto-TLDR; Transfer2Attack: A Black-box Adversarial Attack on Text Classification

Abstract Slides Poster Similar

Verifying the Causes of Adversarial Examples

Honglin Li, Yifei Fan, Frieder Ganz, Tony Yezzi, Payam Barnaghi

Auto-TLDR; Exploring the Causes of Adversarial Examples in Neural Networks

Abstract Slides Poster Similar

Norm Loss: An Efficient yet Effective Regularization Method for Deep Neural Networks

Theodoros Georgiou, Sebastian Schmitt, Thomas Baeck, Wei Chen, Michael Lew

Auto-TLDR; Weight Soft-Regularization with Oblique Manifold for Convolutional Neural Network Training

Abstract Slides Poster Similar

Joint Compressive Autoencoders for Full-Image-To-Image Hiding

Xiyao Liu, Ziping Ma, Xingbei Guo, Jialu Hou, Lei Wang, Gerald Schaefer, Hui Fang

Auto-TLDR; J-CAE: Joint Compressive Autoencoder for Image Hiding

Abstract Slides Poster Similar

MaxDropout: Deep Neural Network Regularization Based on Maximum Output Values

Claudio Filipi Gonçalves Santos, Danilo Colombo, Mateus Roder, Joao Paulo Papa

Auto-TLDR; MaxDropout: A Regularizer for Deep Neural Networks

Abstract Slides Poster Similar

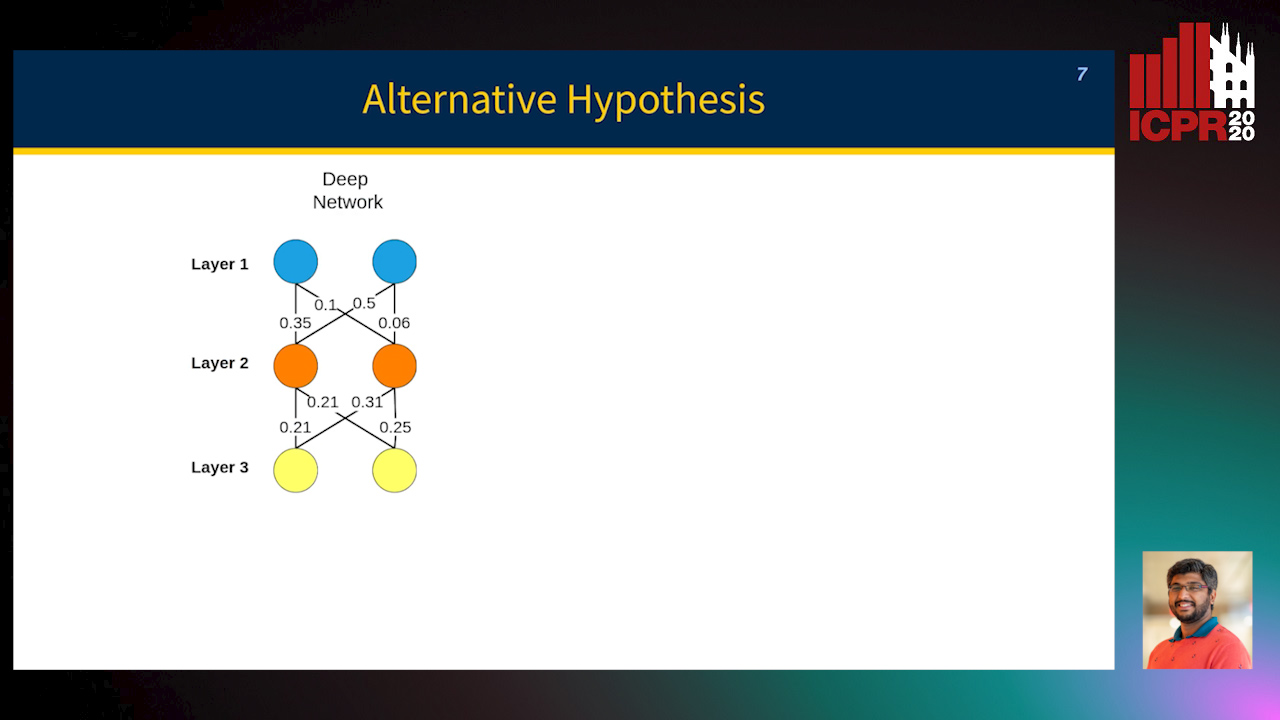

Towards Explaining Adversarial Examples Phenomenon in Artificial Neural Networks

Ramin Barati, Reza Safabakhsh, Mohammad Rahmati

Auto-TLDR; Convolutional Neural Networks and Adversarial Training from the Perspective of convergence

Abstract Slides Poster Similar

Efficient Online Subclass Knowledge Distillation for Image Classification

Maria Tzelepi, Nikolaos Passalis, Anastasios Tefas

Auto-TLDR; OSKD: Online Subclass Knowledge Distillation

Abstract Slides Poster Similar

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

ResNet-Like Architecture with Low Hardware Requirements

Elena Limonova, Daniil Alfonso, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; BM-ResNet: Bipolar Morphological ResNet for Image Classification

Abstract Slides Poster Similar

MINT: Deep Network Compression Via Mutual Information-Based Neuron Trimming

Madan Ravi Ganesh, Jason Corso, Salimeh Yasaei Sekeh

Auto-TLDR; Mutual Information-based Neuron Trimming for Deep Compression via Pruning

Abstract Slides Poster Similar

Multimodal Side-Tuning for Document Classification

Stefano Zingaro, Giuseppe Lisanti, Maurizio Gabbrielli

Auto-TLDR; Side-tuning for Multimodal Document Classification

Abstract Slides Poster Similar

Video Face Manipulation Detection through Ensemble of CNNs

Nicolo Bonettini, Edoardo Daniele Cannas, Sara Mandelli, Luca Bondi, Paolo Bestagini, Stefano Tubaro

Auto-TLDR; Face Manipulation Detection in Video Sequences Using Convolutional Neural Networks

F-Mixup: Attack CNNs from Fourier Perspective

Xiu-Chuan Li, Xu-Yao Zhang, Fei Yin, Cheng-Lin Liu

Auto-TLDR; F-Mixup: A novel black-box attack in frequency domain for deep neural networks

Abstract Slides Poster Similar

Activation Density Driven Efficient Pruning in Training

Timothy Foldy-Porto, Yeshwanth Venkatesha, Priyadarshini Panda

Auto-TLDR; Real-Time Neural Network Pruning with Compressed Networks

Abstract Slides Poster Similar

Learning to Prune in Training via Dynamic Channel Propagation

Shibo Shen, Rongpeng Li, Zhifeng Zhao, Honggang Zhang, Yugeng Zhou

Auto-TLDR; Dynamic Channel Propagation for Neural Network Pruning

Abstract Slides Poster Similar

Improving Model Accuracy for Imbalanced Image Classification Tasks by Adding a Final Batch Normalization Layer: An Empirical Study

Veysel Kocaman, Ofer M. Shir, Thomas Baeck

Auto-TLDR; Exploiting Batch Normalization before the Output Layer in Deep Learning for Minority Class Detection in Imbalanced Data Sets

Abstract Slides Poster Similar

Is the Meta-Learning Idea Able to Improve the Generalization of Deep Neural Networks on the Standard Supervised Learning?

Auto-TLDR; Meta-learning Based Training of Deep Neural Networks for Few-Shot Learning

Abstract Slides Poster Similar

Task-based Focal Loss for Adversarially Robust Meta-Learning

Yufan Hou, Lixin Zou, Weidong Liu

Auto-TLDR; Task-based Adversarial Focal Loss for Few-shot Meta-Learner

Abstract Slides Poster Similar

Can Data Placement Be Effective for Neural Networks Classification Tasks? Introducing the Orthogonal Loss

Brais Cancela, Veronica Bolon-Canedo, Amparo Alonso-Betanzos

Auto-TLDR; Spatial Placement for Neural Network Training Loss Functions

Abstract Slides Poster Similar

Softer Pruning, Incremental Regularization

Linhang Cai, Zhulin An, Yongjun Xu

Auto-TLDR; Asymptotic SofteR Filter Pruning for Deep Neural Network Pruning

Abstract Slides Poster Similar

Revisiting the Training of Very Deep Neural Networks without Skip Connections

Oyebade Kayode Oyedotun, Abd El Rahman Shabayek, Djamila Aouada, Bjorn Ottersten

Auto-TLDR; Optimization of Very Deep PlainNets without shortcut connections with 'vanishing and exploding units' activations'

Abstract Slides Poster Similar

Improving Gravitational Wave Detection with 2D Convolutional Neural Networks

Siyu Fan, Yisen Wang, Yuan Luo, Alexander Michael Schmitt, Shenghua Yu

Auto-TLDR; Two-dimensional Convolutional Neural Networks for Gravitational Wave Detection from Time Series with Background Noise

CCA: Exploring the Possibility of Contextual Camouflage Attack on Object Detection

Shengnan Hu, Yang Zhang, Sumit Laha, Ankit Sharma, Hassan Foroosh

Auto-TLDR; Contextual camouflage attack for object detection

Abstract Slides Poster Similar

Hcore-Init: Neural Network Initialization Based on Graph Degeneracy

Stratis Limnios, George Dasoulas, Dimitrios Thilikos, Michalis Vazirgiannis

Auto-TLDR; K-hypercore: Graph Mining for Deep Neural Networks

Abstract Slides Poster Similar

Confidence Calibration for Deep Renal Biopsy Immunofluorescence Image Classification

Federico Pollastri, Juan Maroñas, Federico Bolelli, Giulia Ligabue, Roberto Paredes, Riccardo Magistroni, Costantino Grana

Auto-TLDR; A Probabilistic Convolutional Neural Network for Immunofluorescence Classification in Renal Biopsy

Abstract Slides Poster Similar

Accuracy-Perturbation Curves for Evaluation of Adversarial Attack and Defence Methods

Auto-TLDR; Accuracy-perturbation Curve for Robustness Evaluation of Adversarial Examples

Abstract Slides Poster Similar

Adversarial Training for Aspect-Based Sentiment Analysis with BERT

Akbar Karimi, Andrea Prati, Leonardo Rossi

Auto-TLDR; Adversarial Training of BERT for Aspect-Based Sentiment Analysis

Abstract Slides Poster Similar

On the Use of Benford's Law to Detect GAN-Generated Images

Nicolo Bonettini, Paolo Bestagini, Simone Milani, Stefano Tubaro

Auto-TLDR; Using Benford's Law to Detect GAN-generated Images from Natural Images

Abstract Slides Poster Similar

Rethinking Experience Replay: A Bag of Tricks for Continual Learning

Pietro Buzzega, Matteo Boschini, Angelo Porrello, Simone Calderara

Auto-TLDR; Experience Replay for Continual Learning: A Practical Approach

Abstract Slides Poster Similar