Multimodal End-To-End Learning for Autonomous Steering in Adverse Road and Weather Conditions

Jyri Sakari Maanpää,

Josef Taher,

Petri Manninen,

Leo Pakola,

Iaroslav Melekhov,

Juha Hyyppä

Auto-TLDR; End-to-End Learning for Autonomous Steering in Adverse Road and Weather Conditions with Lidar Data

Similar papers

Holistic Grid Fusion Based Stop Line Estimation

Runsheng Xu, Faezeh Tafazzoli, Li Zhang, Timo Rehfeld, Gunther Krehl, Arunava Seal

Auto-TLDR; Fused Multi-Sensory Data for Stop Lines Detection in Intersection Scenarios

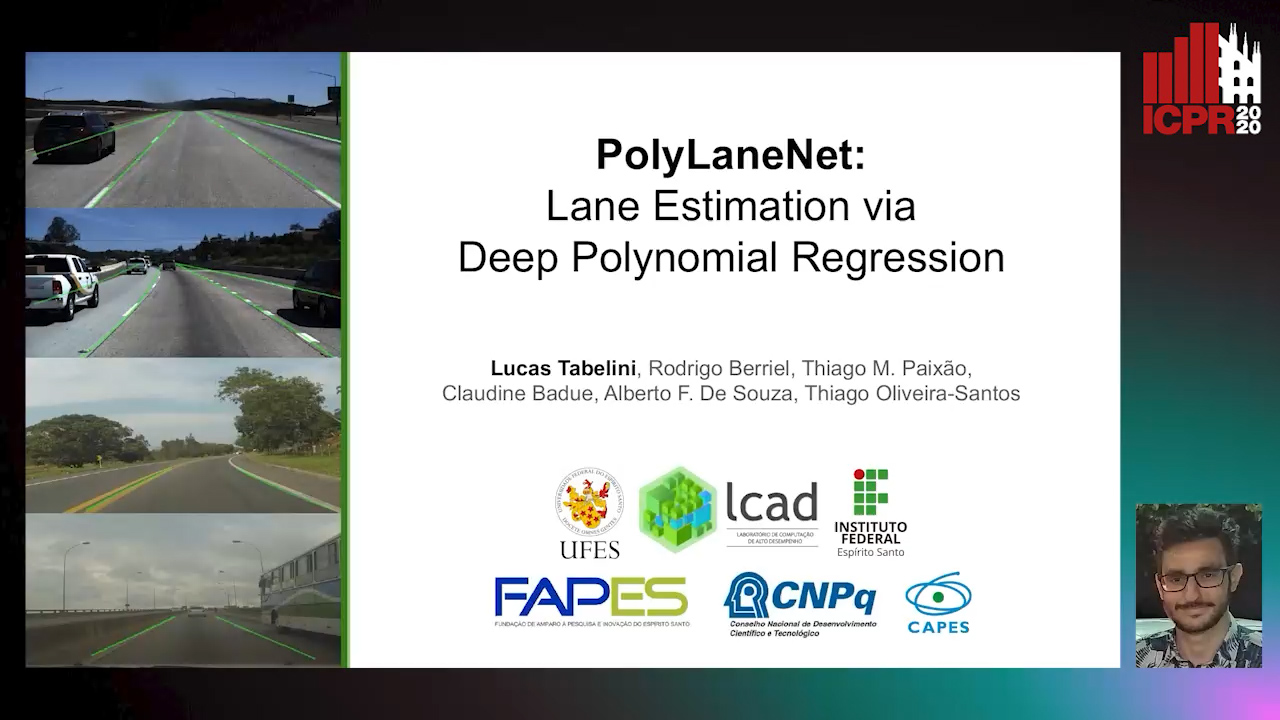

PolyLaneNet: Lane Estimation Via Deep Polynomial Regression

Talles Torres, Rodrigo Berriel, Thiago Paixão, Claudine Badue, Alberto F. De Souza, Thiago Oliveira-Santos

Auto-TLDR; Real-Time Lane Detection with Deep Polynomial Regression

Abstract Slides Poster Similar

Vehicle Lane Merge Visual Benchmark

Auto-TLDR; A Benchmark for Automated Cooperative Maneuvering Using Multi-view Video Streams and Ground Truth Vehicle Description

Abstract Slides Poster Similar

CARRADA Dataset: Camera and Automotive Radar with Range-Angle-Doppler Annotations

Arthur Ouaknine, Alasdair Newson, Julien Rebut, Florence Tupin, Patrick Pérez

Auto-TLDR; CARRADA: A dataset of synchronized camera and radar recordings with range-angle-Doppler annotations for autonomous driving

Abstract Slides Poster Similar

Real-Time End-To-End Lane ID Estimation Using Recurrent Networks

Ibrahim Halfaoui, Fahd Bouzaraa, Onay Urfalioglu

Auto-TLDR; Real-Time, Vision-Only Lane Identification Using Monocular Camera

Abstract Slides Poster Similar

Temporal Pulses Driven Spiking Neural Network for Time and Power Efficient Object Recognition in Autonomous Driving

Wei Wang, Shibo Zhou, Jingxi Li, Xiaohua Li, Junsong Yuan, Zhanpeng Jin

Auto-TLDR; Spiking Neural Network for Real-Time Object Recognition on Temporal LiDAR Pulses

Abstract Slides Poster Similar

A Bayesian Approach to Reinforcement Learning of Vision-Based Vehicular Control

Zahra Gharaee, Karl Holmquist, Linbo He, Michael Felsberg

Auto-TLDR; Bayesian Reinforcement Learning for Autonomous Driving

Abstract Slides Poster Similar

Ghost Target Detection in 3D Radar Data Using Point Cloud Based Deep Neural Network

Mahdi Chamseddine, Jason Rambach, Oliver Wasenmüler, Didier Stricker

Auto-TLDR; Point Based Deep Learning for Ghost Target Detection in 3D Radar Point Clouds

Abstract Slides Poster Similar

Attention Based Coupled Framework for Road and Pothole Segmentation

Shaik Masihullah, Ritu Garg, Prerana Mukherjee, Anupama Ray

Auto-TLDR; Few Shot Learning for Road and Pothole Segmentation on KITTI and IDD

Abstract Slides Poster Similar

RISEdb: A Novel Indoor Localization Dataset

Carlos Sanchez Belenguer, Erik Wolfart, Álvaro Casado Coscollá, Vitor Sequeira

Auto-TLDR; Indoor Localization Using LiDAR SLAM and Smartphones: A Benchmarking Dataset

Abstract Slides Poster Similar

Multiple Future Prediction Leveraging Synthetic Trajectories

Lorenzo Berlincioni, Federico Becattini, Lorenzo Seidenari, Alberto Del Bimbo

Auto-TLDR; Synthetic Trajectory Prediction using Markov Chains

Abstract Slides Poster Similar

RONELD: Robust Neural Network Output Enhancement for Active Lane Detection

Zhe Ming Chng, Joseph Mun Hung Lew, Jimmy Addison Lee

Auto-TLDR; Real-Time Robust Neural Network Output Enhancement for Active Lane Detection

Abstract Slides Poster Similar

NetCalib: A Novel Approach for LiDAR-Camera Auto-Calibration Based on Deep Learning

Shan Wu, Amnir Hadachi, Damien Vivet, Yadu Prabhakar

Auto-TLDR; Automatic Calibration of LiDAR and Cameras using Deep Neural Network

Abstract Slides Poster Similar

A Fine-Grained Dataset and Its Efficient Semantic Segmentation for Unstructured Driving Scenarios

Kai Andreas Metzger, Peter Mortimer, Hans J "Joe" Wuensche

Auto-TLDR; TAS500: A Semantic Segmentation Dataset for Autonomous Driving in Unstructured Environments

Abstract Slides Poster Similar

Extending Single Beam Lidar to Full Resolution by Fusing with Single Image Depth Estimation

Yawen Lu, Yuxing Wang, Devarth Parikh, Guoyu Lu

Auto-TLDR; Self-supervised LIDAR for Low-Cost Depth Estimation

Street-Map Based Validation of Semantic Segmentation in Autonomous Driving

Laura Von Rueden, Tim Wirtz, Fabian Hueger, Jan David Schneider, Nico Piatkowski, Christian Bauckhage

Auto-TLDR; Semantic Segmentation Mask Validation Using A-priori Knowledge from Street Maps

Abstract Slides Poster Similar

Visual Prediction of Driver Behavior in Shared Road Areas

Peter Gawronski, Darius Burschka

Auto-TLDR; Predicting Vehicle Behavior in Shared Road Segment Intersections Using Topological Knowledge

Abstract Slides Poster Similar

P2D: A Self-Supervised Method for Depth Estimation from Polarimetry

Marc Blanchon, Desire Sidibe, Olivier Morel, Ralph Seulin, Daniel Braun, Fabrice Meriaudeau

Auto-TLDR; Polarimetric Regularization for Monocular Depth Estimation

Abstract Slides Poster Similar

Surface Material Dataset for Robotics Applications (SMDRA): A Dataset with Friction Coefficient and RGB-D for Surface Segmentation

Donghun Noh, Hyunwoo Nam, Min Sung Ahn, Hosik Chae, Sangjoon Lee, Kyle Gillespie, Dennis Hong

Auto-TLDR; A Surface Material Dataset for Robotics Applications

Abstract Slides Poster Similar

Visual Localization for Autonomous Driving: Mapping the Accurate Location in the City Maze

Dongfang Liu, Yiming Cui, Xiaolei Guo, Wei Ding, Baijian Yang, Yingjie Chen

Auto-TLDR; Feature Voting for Robust Visual Localization in Urban Settings

Abstract Slides Poster Similar

Single-Modal Incremental Terrain Clustering from Self-Supervised Audio-Visual Feature Learning

Reina Ishikawa, Ryo Hachiuma, Akiyoshi Kurobe, Hideo Saito

Auto-TLDR; Multi-modal Variational Autoencoder for Terrain Type Clustering

Abstract Slides Poster Similar

Anomaly Detection, Localization and Classification for Railway Inspection

Riccardo Gasparini, Andrea D'Eusanio, Guido Borghi, Stefano Pini, Giuseppe Scaglione, Simone Calderara, Eugenio Fedeli, Rita Cucchiara

Auto-TLDR; Anomaly Detection and Localization using thermal images in the lowlight environment

Wireless Localisation in WiFi Using Novel Deep Architectures

Peizheng Li, Han Cui, Aftab Khan, Usman Raza, Robert Piechocki, Angela Doufexi, Tim Farnham

Auto-TLDR; Deep Neural Network for Indoor Localisation of WiFi Devices in Indoor Environments

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

Lane Detection Based on Object Detection and Image-To-Image Translation

Hiroyuki Komori, Kazunori Onoguchi

Auto-TLDR; Lane Marking and Road Boundary Detection from Monocular Camera Images using Inverse Perspective Mapping

Abstract Slides Poster Similar

Real-Time Drone Detection and Tracking with Visible, Thermal and Acoustic Sensors

Fredrik Svanström, Cristofer Englund, Fernando Alonso-Fernandez

Auto-TLDR; Automatic multi-sensor drone detection using sensor fusion

Abstract Slides Poster Similar

Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

William Prew, Toby Breckon, Magnus Bordewich, Ulrik Beierholm

Auto-TLDR; Improving grasping performance from monocularcolour images in an end-to-end CNN architecture with multi-task learning

Abstract Slides Poster Similar

Polarimetric Image Augmentation

Marc Blanchon, Fabrice Meriaudeau, Olivier Morel, Ralph Seulin, Desire Sidibe

Auto-TLDR; Polarimetric Augmentation for Deep Learning in Robotics Applications

Yolo+FPN: 2D and 3D Fused Object Detection with an RGB-D Camera

Auto-TLDR; Yolo+FPN: Combining 2D and 3D Object Detection for Real-Time Object Detection

Abstract Slides Poster Similar

Localization of Unmanned Aerial Vehicles in Corridor Environments Using Deep Learning

Ram Padhy, Shahzad Ahmad, Sachin Verma, Sambit Bakshi, Pankaj Kumar Sa

Auto-TLDR; A monocular vision assisted localization algorithm for indoor corridor environments

Abstract Slides Poster Similar

RWF-2000: An Open Large Scale Video Database for Violence Detection

Ming Cheng, Kunjing Cai, Ming Li

Auto-TLDR; Flow Gated Network for Violence Detection in Surveillance Cameras

Abstract Slides Poster Similar

Semantic Segmentation for Pedestrian Detection from Motion in Temporal Domain

Auto-TLDR; Motion Profile: Recognizing Pedestrians along with their Motion Directions in a Temporal Way

Abstract Slides Poster Similar

Loop-closure detection by LiDAR scan re-identification

Jukka Peltomäki, Xingyang Ni, Jussi Puura, Joni-Kristian Kamarainen, Heikki Juhani Huttunen

Auto-TLDR; Loop-Closing Detection from LiDAR Scans Using Convolutional Neural Networks

Abstract Slides Poster Similar

End-To-End Deep Learning Methods for Automated Damage Detection in Extreme Events at Various Scales

Yongsheng Bai, Alper Yilmaz, Halil Sezen

Auto-TLDR; Robust Mask R-CNN for Crack Detection in Extreme Events

Abstract Slides Poster Similar

Exploring Severe Occlusion: Multi-Person 3D Pose Estimation with Gated Convolution

Renshu Gu, Gaoang Wang, Jenq-Neng Hwang

Auto-TLDR; 3D Human Pose Estimation for Multi-Human Videos with Occlusion

Planar 3D Transfer Learning for End to End Unimodal MRI Unbalanced Data Segmentation

Martin Kolarik, Radim Burget, Carlos M. Travieso-Gonzalez, Jan Kocica

Auto-TLDR; Planar 3D Res-U-Net Network for Unbalanced 3D Image Segmentation using Fluid Attenuation Inversion Recover

Real-Time Monocular Depth Estimation with Extremely Light-Weight Neural Network

Mian Jhong Chiu, Wei-Chen Chiu, Hua-Tsung Chen, Jen-Hui Chuang

Auto-TLDR; Real-Time Light-Weight Depth Prediction for Obstacle Avoidance and Environment Sensing with Deep Learning-based CNN

Abstract Slides Poster Similar

Location Prediction in Real Homes of Older Adults based on K-Means in Low-Resolution Depth Videos

Simon Simonsson, Flávia Dias Casagrande, Evi Zouganeli

Auto-TLDR; Semi-supervised Learning for Location Recognition and Prediction in Smart Homes using Depth Video Cameras

Abstract Slides Poster Similar

A Two-Step Approach to Lidar-Camera Calibration

Yingna Su, Yaqing Ding, Jian Yang, Hui Kong

Auto-TLDR; Closed-Form Calibration of Lidar-camera System for Ego-motion Estimation and Scene Understanding

Abstract Slides Poster Similar

RefiNet: 3D Human Pose Refinement with Depth Maps

Andrea D'Eusanio, Stefano Pini, Guido Borghi, Roberto Vezzani, Rita Cucchiara

Auto-TLDR; RefiNet: A Multi-stage Framework for 3D Human Pose Estimation

Benchmarking Cameras for OpenVSLAM Indoors

Kevin Chappellet, Guillaume Caron, Fumio Kanehiro, Ken Sakurada, Abderrahmane Kheddar

Auto-TLDR; OpenVSLAM: Benchmarking Camera Types for Visual Simultaneous Localization and Mapping

Abstract Slides Poster Similar

AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Maximilian Kraus, Seyed Majid Azimi, Emec Ercelik, Reza Bahmanyar, Peter Reinartz, Alois Knoll

Auto-TLDR; AerialMPTNet: A novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features

Abstract Slides Poster Similar

User-Independent Gaze Estimation by Extracting Pupil Parameter and Its Mapping to the Gaze Angle

Auto-TLDR; Gaze Point Estimation using Pupil Shape for Generalization

Abstract Slides Poster Similar

Map-Based Temporally Consistent Geolocalization through Learning Motion Trajectories

Auto-TLDR; Exploiting Motion Trajectories for Geolocalization of Object on Topological Map using Recurrent Neural Network

Abstract Slides Poster Similar

Sensor-Independent Pedestrian Detection for Personal Mobility Vehicles in Walking Space Using Dataset Generated by Simulation

Takahiro Shimizu, Kenji Koide, Shuji Oishi, Masashi Yokozuka, Atsuhiko Banno, Motoki Shino

Auto-TLDR; CosPointPillars: A 3D Object Detection Method for Pedestrian Detection in Walking Spaces

Abstract Slides Poster Similar

Uncertainty-Sensitive Activity Recognition: A Reliability Benchmark and the CARING Models

Alina Roitberg, Monica Haurilet, Manuel Martinez, Rainer Stiefelhagen

Auto-TLDR; CARING: Calibrated Action Recognition with Input Guidance

Spatial Bias in Vision-Based Voice Activity Detection

Kalin Stefanov, Mohammad Adiban, Giampiero Salvi

Auto-TLDR; Spatial Bias in Vision-based Voice Activity Detection in Multiparty Human-Human Interactions

Removing Raindrops from a Single Image Using Synthetic Data

Yoshihito Kokubo, Shusaku Asada, Hirotaka Maruyama, Masaru Koide, Kohei Yamamoto, Yoshihisa Suetsugu

Auto-TLDR; Raindrop Removal Using Synthetic Raindrop Data

Abstract Slides Poster Similar