Localization of Unmanned Aerial Vehicles in Corridor Environments Using Deep Learning

Ram Padhy,

Shahzad Ahmad,

Sachin Verma,

Sambit Bakshi,

Pankaj Kumar Sa

Auto-TLDR; A monocular vision assisted localization algorithm for indoor corridor environments

Similar papers

Holistic Grid Fusion Based Stop Line Estimation

Runsheng Xu, Faezeh Tafazzoli, Li Zhang, Timo Rehfeld, Gunther Krehl, Arunava Seal

Auto-TLDR; Fused Multi-Sensory Data for Stop Lines Detection in Intersection Scenarios

Unconstrained Vision Guided UAV Based Safe Helicopter Landing

Arindam Sikdar, Abhimanyu Sahu, Debajit Sen, Rohit Mahajan, Ananda Chowdhury

Auto-TLDR; Autonomous Helicopter Landing in Hazardous Environments from Unmanned Aerial Images Using Constrained Graph Clustering

Abstract Slides Poster Similar

RISEdb: A Novel Indoor Localization Dataset

Carlos Sanchez Belenguer, Erik Wolfart, Álvaro Casado Coscollá, Vitor Sequeira

Auto-TLDR; Indoor Localization Using LiDAR SLAM and Smartphones: A Benchmarking Dataset

Abstract Slides Poster Similar

Benchmarking Cameras for OpenVSLAM Indoors

Kevin Chappellet, Guillaume Caron, Fumio Kanehiro, Ken Sakurada, Abderrahmane Kheddar

Auto-TLDR; OpenVSLAM: Benchmarking Camera Types for Visual Simultaneous Localization and Mapping

Abstract Slides Poster Similar

Extending Single Beam Lidar to Full Resolution by Fusing with Single Image Depth Estimation

Yawen Lu, Yuxing Wang, Devarth Parikh, Guoyu Lu

Auto-TLDR; Self-supervised LIDAR for Low-Cost Depth Estimation

Towards life-long mapping of dynamic environments using temporal persistence modeling

Georgios Tsamis, Ioannis Kostavelis, Dimitrios Giakoumis, Dimitrios Tzovaras

Auto-TLDR; Lifelong Mapping for Mobile Robot Navigation in Dynamic Environments

Abstract Slides Poster Similar

Map-Based Temporally Consistent Geolocalization through Learning Motion Trajectories

Auto-TLDR; Exploiting Motion Trajectories for Geolocalization of Object on Topological Map using Recurrent Neural Network

Abstract Slides Poster Similar

AV-SLAM: Autonomous Vehicle SLAM with Gravity Direction Initialization

Kaan Yilmaz, Baris Suslu, Sohini Roychowdhury, L. Srikar Muppirisetty

Auto-TLDR; VI-SLAM with AGI: A combination of three SLAM algorithms for autonomous vehicles

Abstract Slides Poster Similar

Object-Oriented Map Exploration and Construction Based on Auxiliary Task Aided DRL

Junzhe Xu, Jianhua Zhang, Shengyong Chen, Honghai Liu

Auto-TLDR; Auxiliary Task Aided Deep Reinforcement Learning for Environment Exploration by Autonomous Robots

P2D: A Self-Supervised Method for Depth Estimation from Polarimetry

Marc Blanchon, Desire Sidibe, Olivier Morel, Ralph Seulin, Daniel Braun, Fabrice Meriaudeau

Auto-TLDR; Polarimetric Regularization for Monocular Depth Estimation

Abstract Slides Poster Similar

Real-Time End-To-End Lane ID Estimation Using Recurrent Networks

Ibrahim Halfaoui, Fahd Bouzaraa, Onay Urfalioglu

Auto-TLDR; Real-Time, Vision-Only Lane Identification Using Monocular Camera

Abstract Slides Poster Similar

Loop-closure detection by LiDAR scan re-identification

Jukka Peltomäki, Xingyang Ni, Jussi Puura, Joni-Kristian Kamarainen, Heikki Juhani Huttunen

Auto-TLDR; Loop-Closing Detection from LiDAR Scans Using Convolutional Neural Networks

Abstract Slides Poster Similar

Surface Material Dataset for Robotics Applications (SMDRA): A Dataset with Friction Coefficient and RGB-D for Surface Segmentation

Donghun Noh, Hyunwoo Nam, Min Sung Ahn, Hosik Chae, Sangjoon Lee, Kyle Gillespie, Dennis Hong

Auto-TLDR; A Surface Material Dataset for Robotics Applications

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

Dynamic Resource-Aware Corner Detection for Bio-Inspired Vision Sensors

Sherif Abdelmonem Sayed Mohamed, Jawad Yasin, Mohammad-Hashem Haghbayan, Antonio Miele, Jukka Veikko Heikkonen, Hannu Tenhunen, Juha Plosila

Auto-TLDR; Three Layer Filtering-Harris Algorithm for Event-based Cameras in Real-Time

Polarimetric Image Augmentation

Marc Blanchon, Fabrice Meriaudeau, Olivier Morel, Ralph Seulin, Desire Sidibe

Auto-TLDR; Polarimetric Augmentation for Deep Learning in Robotics Applications

Minimal Solvers for Indoor UAV Positioning

Marcus Valtonen Örnhag, Patrik Persson, Mårten Wadenbäck, Kalle Åström, Anders Heyden

Auto-TLDR; Relative Pose Solvers for Visual Indoor UAV Navigation

Abstract Slides Poster Similar

OmniFlowNet: A Perspective Neural Network Adaptation for Optical Flow Estimation in Omnidirectional Images

Charles-Olivier Artizzu, Haozhou Zhang, Guillaume Allibert, Cédric Demonceaux

Auto-TLDR; OmniFlowNet: A Convolutional Neural Network for Omnidirectional Optical Flow Estimation

Abstract Slides Poster Similar

On Embodied Visual Navigation in Real Environments through Habitat

Marco Rosano, Antonino Furnari, Luigi Gulino, Giovanni Maria Farinella

Auto-TLDR; Learning Navigation Policies on Real World Observations using Real World Images and Sensor and Actuation Noise

Abstract Slides Poster Similar

NetCalib: A Novel Approach for LiDAR-Camera Auto-Calibration Based on Deep Learning

Shan Wu, Amnir Hadachi, Damien Vivet, Yadu Prabhakar

Auto-TLDR; Automatic Calibration of LiDAR and Cameras using Deep Neural Network

Abstract Slides Poster Similar

Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

William Prew, Toby Breckon, Magnus Bordewich, Ulrik Beierholm

Auto-TLDR; Improving grasping performance from monocularcolour images in an end-to-end CNN architecture with multi-task learning

Abstract Slides Poster Similar

Real-Time Monocular Depth Estimation with Extremely Light-Weight Neural Network

Mian Jhong Chiu, Wei-Chen Chiu, Hua-Tsung Chen, Jen-Hui Chuang

Auto-TLDR; Real-Time Light-Weight Depth Prediction for Obstacle Avoidance and Environment Sensing with Deep Learning-based CNN

Abstract Slides Poster Similar

Multiple Future Prediction Leveraging Synthetic Trajectories

Lorenzo Berlincioni, Federico Becattini, Lorenzo Seidenari, Alberto Del Bimbo

Auto-TLDR; Synthetic Trajectory Prediction using Markov Chains

Abstract Slides Poster Similar

Real-Time Drone Detection and Tracking with Visible, Thermal and Acoustic Sensors

Fredrik Svanström, Cristofer Englund, Fernando Alonso-Fernandez

Auto-TLDR; Automatic multi-sensor drone detection using sensor fusion

Abstract Slides Poster Similar

Multimodal End-To-End Learning for Autonomous Steering in Adverse Road and Weather Conditions

Jyri Sakari Maanpää, Josef Taher, Petri Manninen, Leo Pakola, Iaroslav Melekhov, Juha Hyyppä

Auto-TLDR; End-to-End Learning for Autonomous Steering in Adverse Road and Weather Conditions with Lidar Data

Abstract Slides Poster Similar

Vehicle Lane Merge Visual Benchmark

Auto-TLDR; A Benchmark for Automated Cooperative Maneuvering Using Multi-view Video Streams and Ground Truth Vehicle Description

Abstract Slides Poster Similar

Calibration and Absolute Pose Estimation of Trinocular Linear Camera Array for Smart City Applications

Martin Ahrnbom, Mikael Nilsson, Håkan Ardö, Kalle Åström, Oksana Yastremska-Kravchenko, Aliaksei Laureshyn

Auto-TLDR; Trinocular Linear Camera Array Calibration for Traffic Surveillance Applications

Abstract Slides Poster Similar

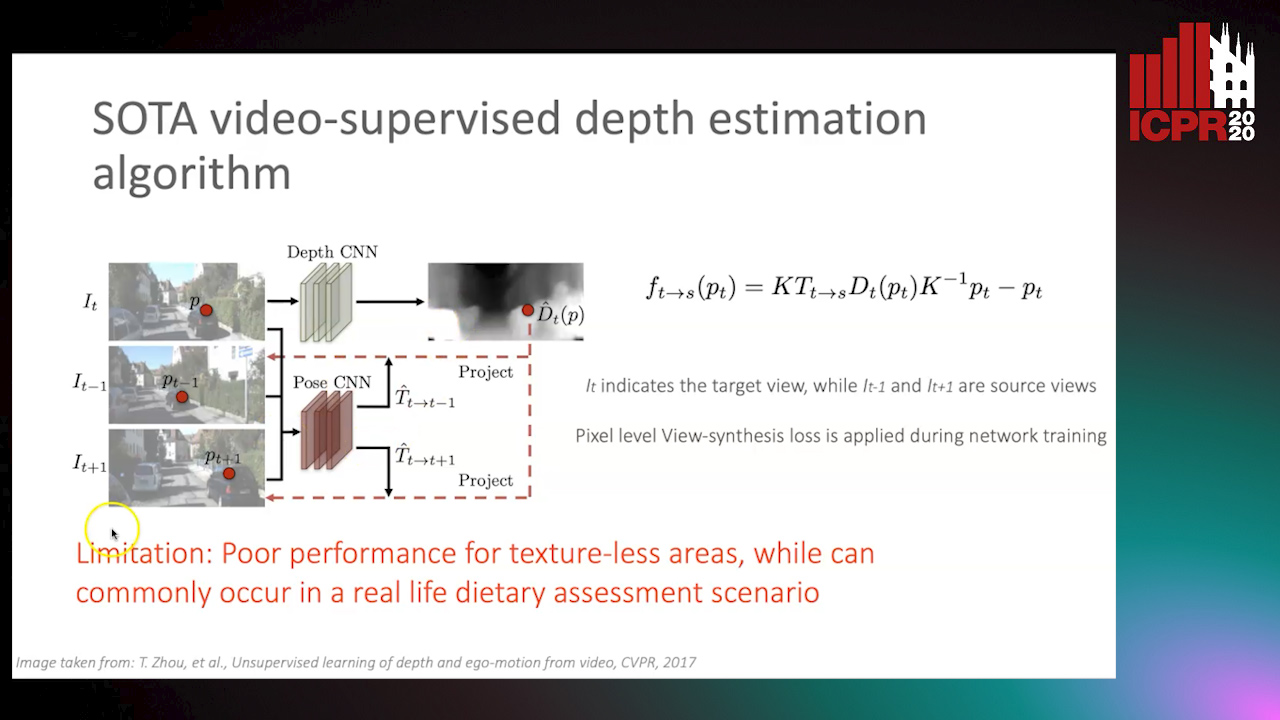

Partially Supervised Multi-Task Network for Single-View Dietary Assessment

Ya Lu, Thomai Stathopoulou, Stavroula Mougiakakou

Auto-TLDR; Food Volume Estimation from a Single Food Image via Geometric Understanding and Semantic Prediction

Abstract Slides Poster Similar

Derivation of Geometrically and Semantically Annotated UAV Datasets at Large Scales from 3D City Models

Sidi Wu, Lukas Liebel, Marco Körner

Auto-TLDR; Large-Scale Dataset of Synthetic UAV Imagery for Geometric and Semantic Annotation

Abstract Slides Poster Similar

Deep Next-Best-View Planner for Cross-Season Visual Route Classification

Auto-TLDR; Active Visual Place Recognition using Deep Convolutional Neural Network

Abstract Slides Poster Similar

Movement-Induced Priors for Deep Stereo

Yuxin Hou, Muhammad Kamran Janjua, Juho Kannala, Arno Solin

Auto-TLDR; Fusing Stereo Disparity Estimation with Movement-induced Prior Information

Abstract Slides Poster Similar

Generic Merging of Structure from Motion Maps with a Low Memory Footprint

Gabrielle Flood, David Gillsjö, Patrik Persson, Anders Heyden, Kalle Åström

Auto-TLDR; A Low-Memory Footprint Representation for Robust Map Merge

Abstract Slides Poster Similar

Visual Localization for Autonomous Driving: Mapping the Accurate Location in the City Maze

Dongfang Liu, Yiming Cui, Xiaolei Guo, Wei Ding, Baijian Yang, Yingjie Chen

Auto-TLDR; Feature Voting for Robust Visual Localization in Urban Settings

Abstract Slides Poster Similar

Learning to Segment Dynamic Objects Using SLAM Outliers

Dupont Romain, Mohamed Tamaazousti, Hervé Le Borgne

Auto-TLDR; Automatic Segmentation of Dynamic Objects Using SLAM Outliers Using Consensus Inversion

Abstract Slides Poster Similar

Anomaly Detection, Localization and Classification for Railway Inspection

Riccardo Gasparini, Andrea D'Eusanio, Guido Borghi, Stefano Pini, Giuseppe Scaglione, Simone Calderara, Eugenio Fedeli, Rita Cucchiara

Auto-TLDR; Anomaly Detection and Localization using thermal images in the lowlight environment

Early Wildfire Smoke Detection in Videos

Taanya Gupta, Hengyue Liu, Bir Bhanu

Auto-TLDR; Semi-supervised Spatio-Temporal Video Object Segmentation for Automatic Detection of Smoke in Videos during Forest Fire

A Two-Step Approach to Lidar-Camera Calibration

Yingna Su, Yaqing Ding, Jian Yang, Hui Kong

Auto-TLDR; Closed-Form Calibration of Lidar-camera System for Ego-motion Estimation and Scene Understanding

Abstract Slides Poster Similar

Object Segmentation Tracking from Generic Video Cues

Amirhossein Kardoost, Sabine Müller, Joachim Weickert, Margret Keuper

Auto-TLDR; A Light-Weight Variational Framework for Video Object Segmentation in Videos

Abstract Slides Poster Similar

SAILenv: Learning in Virtual Visual Environments Made Simple

Enrico Meloni, Luca Pasqualini, Matteo Tiezzi, Marco Gori, Stefano Melacci

Auto-TLDR; SAILenv: A Simple and Customized Platform for Visual Recognition in Virtual 3D Environment

Abstract Slides Poster Similar

Wireless Localisation in WiFi Using Novel Deep Architectures

Peizheng Li, Han Cui, Aftab Khan, Usman Raza, Robert Piechocki, Angela Doufexi, Tim Farnham

Auto-TLDR; Deep Neural Network for Indoor Localisation of WiFi Devices in Indoor Environments

Abstract Slides Poster Similar

Attention Based Coupled Framework for Road and Pothole Segmentation

Shaik Masihullah, Ritu Garg, Prerana Mukherjee, Anupama Ray

Auto-TLDR; Few Shot Learning for Road and Pothole Segmentation on KITTI and IDD

Abstract Slides Poster Similar

Two-Stage Adaptive Object Scene Flow Using Hybrid CNN-CRF Model

Congcong Li, Haoyu Ma, Qingmin Liao

Auto-TLDR; Adaptive object scene flow estimation using a hybrid CNN-CRF model and adaptive iteration

Abstract Slides Poster Similar

Distortion-Adaptive Grape Bunch Counting for Omnidirectional Images

Ryota Akai, Yuzuko Utsumi, Yuka Miwa, Masakazu Iwamura, Koichi Kise

Auto-TLDR; Object Counting for Omnidirectional Images Using Stereographic Projection

PA-FlowNet: Pose-Auxiliary Optical Flow Network for Spacecraft Relative Pose Estimation

Zhi Yu Chen, Po-Heng Chen, Kuan-Wen Chen, Chen-Yu Chan

Auto-TLDR; PA-FlowNet: An End-to-End Pose-auxiliary Optical Flow Network for Space Travel and Landing

Abstract Slides Poster Similar

Edge-Aware Monocular Dense Depth Estimation with Morphology

Zhi Li, Xiaoyang Zhu, Haitao Yu, Qi Zhang, Yongshi Jiang

Auto-TLDR; Spatio-Temporally Smooth Dense Depth Maps Using Only a CPU

Abstract Slides Poster Similar

Single-Modal Incremental Terrain Clustering from Self-Supervised Audio-Visual Feature Learning

Reina Ishikawa, Ryo Hachiuma, Akiyoshi Kurobe, Hideo Saito

Auto-TLDR; Multi-modal Variational Autoencoder for Terrain Type Clustering

Abstract Slides Poster Similar

User-Independent Gaze Estimation by Extracting Pupil Parameter and Its Mapping to the Gaze Angle

Auto-TLDR; Gaze Point Estimation using Pupil Shape for Generalization

Abstract Slides Poster Similar

Position-Aware and Symmetry Enhanced GAN for Radial Distortion Correction

Yongjie Shi, Xin Tong, Jingsi Wen, He Zhao, Xianghua Ying, Jinshi Hongbin Zha

Auto-TLDR; Generative Adversarial Network for Radial Distorted Image Correction

Abstract Slides Poster Similar