From Certain to Uncertain: Toward Optimal Solution for Offline Multiple Object Tracking

Kaikai Zhao,

Takashi Imaseki,

Hiroshi Mouri,

Einoshin Suzuki,

Tetsu Matsukawa

Auto-TLDR; Agglomerative Hierarchical Clustering with Ensemble of Tracking Experts for Object Tracking

Similar papers

Compact and Discriminative Multi-Object Tracking with Siamese CNNs

Claire Labit-Bonis, Jérôme Thomas, Frederic Lerasle

Auto-TLDR; Fast, Light-Weight and All-in-One Single Object Tracking for Multi-Target Management

Abstract Slides Poster Similar

SynDHN: Multi-Object Fish Tracker Trained on Synthetic Underwater Videos

Mygel Andrei Martija, Prospero Naval

Auto-TLDR; Underwater Multi-Object Tracking in the Wild with Deep Hungarian Network

Abstract Slides Poster Similar

IPT: A Dataset for Identity Preserved Tracking in Closed Domains

Thomas Heitzinger, Martin Kampel

Auto-TLDR; Identity Preserved Tracking Using Depth Data for Privacy and Privacy

Abstract Slides Poster Similar

An Adaptive Fusion Model Based on Kalman Filtering and LSTM for Fast Tracking of Road Signs

Chengliang Wang, Xin Xie, Chao Liao

Auto-TLDR; Fusion of ThunderNet and Region Growing Detector for Road Sign Detection and Tracking

Abstract Slides Poster Similar

Automated Whiteboard Lecture Video Summarization by Content Region Detection and Representation

Bhargava Urala Kota, Alexander Stone, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; A Framework for Summarizing Whiteboard Lecture Videos Using Feature Representations of Handwritten Content Regions

Model Decay in Long-Term Tracking

Efstratios Gavves, Ran Tao, Deepak Gupta, Arnold Smeulders

Auto-TLDR; Model Bias in Long-Term Tracking

Abstract Slides Poster Similar

Story Comparison for Estimating Field of View Overlap in a Video Collection

Thierry Malon, Sylvie Chambon, Alain Crouzil, Vincent Charvillat

Auto-TLDR; Finding Videos with Overlapping Fields of View Using Video Data

Vehicle Lane Merge Visual Benchmark

Auto-TLDR; A Benchmark for Automated Cooperative Maneuvering Using Multi-view Video Streams and Ground Truth Vehicle Description

Abstract Slides Poster Similar

Graph-Based Image Decoding for Multiplexed in Situ RNA Detection

Gabriele Partel, Carolina Wahlby

Auto-TLDR; A Graph-based Decoding Approach for Multiplexed In situ RNA Detection

Uncertainty Guided Recognition of Tiny Craters on the Moon

Thorsten Wilhelm, Christian Wöhler

Auto-TLDR; Accurately Detecting Tiny Craters in Remote Sensed Images Using Deep Neural Networks

Abstract Slides Poster Similar

AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Maximilian Kraus, Seyed Majid Azimi, Emec Ercelik, Reza Bahmanyar, Peter Reinartz, Alois Knoll

Auto-TLDR; AerialMPTNet: A novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features

Abstract Slides Poster Similar

FeatureNMS: Non-Maximum Suppression by Learning Feature Embeddings

Auto-TLDR; FeatureNMS: Non-Maximum Suppression for Multiple Object Detection

Abstract Slides Poster Similar

Correlation-Based ConvNet for Small Object Detection in Videos

Brais Bosquet, Manuel Mucientes, Victor Brea

Auto-TLDR; STDnet-ST: An End-to-End Spatio-Temporal Convolutional Neural Network for Small Object Detection in Video

Abstract Slides Poster Similar

Weakly Supervised Geodesic Segmentation of Egyptian Mummy CT Scans

Avik Hati, Matteo Bustreo, Diego Sona, Vittorio Murino, Alessio Del Bue

Auto-TLDR; A Weakly Supervised and Efficient Interactive Segmentation of Ancient Egyptian Mummies CT Scans Using Geodesic Distance Measure and GrabCut

Abstract Slides Poster Similar

Self-Paced Bottom-Up Clustering Network with Side Information for Person Re-Identification

Mingkun Li, Chun-Guang Li, Ruo-Pei Guo, Jun Guo

Auto-TLDR; Self-Paced Bottom-up Clustering Network with Side Information for Unsupervised Person Re-identification

Abstract Slides Poster Similar

SiamMT: Real-Time Arbitrary Multi-Object Tracking

Lorenzo Vaquero, Manuel Mucientes, Victor Brea

Auto-TLDR; SiamMT: A Deep-Learning-based Arbitrary Multi-Object Tracking System for Video

Abstract Slides Poster Similar

ClusterFace: Joint Clustering and Classification for Set-Based Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Joint Clustering and Classification for Face Recognition in the Wild

Abstract Slides Poster Similar

Generic Merging of Structure from Motion Maps with a Low Memory Footprint

Gabrielle Flood, David Gillsjö, Patrik Persson, Anders Heyden, Kalle Åström

Auto-TLDR; A Low-Memory Footprint Representation for Robust Map Merge

Abstract Slides Poster Similar

Robust Visual Object Tracking with Two-Stream Residual Convolutional Networks

Ning Zhang, Jingen Liu, Ke Wang, Dan Zeng, Tao Mei

Auto-TLDR; Two-Stream Residual Convolutional Network for Visual Tracking

Abstract Slides Poster Similar

Utilising Visual Attention Cues for Vehicle Detection and Tracking

Feiyan Hu, Venkatesh Gurram Munirathnam, Noel E O'Connor, Alan Smeaton, Suzanne Little

Auto-TLDR; Visual Attention for Object Detection and Tracking in Driver-Assistance Systems

Abstract Slides Poster Similar

Online Object Recognition Using CNN-Based Algorithm on High-Speed Camera Imaging

Shigeaki Namiki, Keiko Yokoyama, Shoji Yachida, Takashi Shibata, Hiroyoshi Miyano, Masatoshi Ishikawa

Auto-TLDR; Real-Time Object Recognition with High-Speed Camera Imaging with Population Data Clearing and Data Ensemble

Abstract Slides Poster Similar

Open-World Group Retrieval with Ambiguity Removal: A Benchmark

Ling Mei, Jian-Huang Lai, Zhanxiang Feng, Xiaohua Xie

Auto-TLDR; P2GSM-AR: Re-identifying changing groups of people under the open-world and group-ambiguity scenarios

Abstract Slides Poster Similar

Multi-View Object Detection Using Epipolar Constraints within Cluttered X-Ray Security Imagery

Brian Kostadinov Shalon Isaac-Medina, Chris G. Willcocks, Toby Breckon

Auto-TLDR; Exploiting Epipolar Constraints for Multi-View Object Detection in X-ray Security Images

Abstract Slides Poster Similar

Tracking Fast Moving Objects by Segmentation Network

Auto-TLDR; Fast Moving Objects Tracking by Segmentation Using Deep Learning

Abstract Slides Poster Similar

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Reducing False Positives in Object Tracking with Siamese Network

Takuya Ogawa, Takashi Shibata, Shoji Yachida, Toshinori Hosoi

Auto-TLDR; Robust Long-Term Object Tracking with Adaptive Search based on Motion Models

Abstract Slides Poster Similar

Convolutional Feature Transfer via Camera-Specific Discriminative Pooling for Person Re-Identification

Tetsu Matsukawa, Einoshin Suzuki

Auto-TLDR; A small-scale CNN feature transfer method for person re-identification

Abstract Slides Poster Similar

Siamese Fully Convolutional Tracker with Motion Correction

Mathew Francis, Prithwijit Guha

Auto-TLDR; A Siamese Ensemble for Visual Tracking with Appearance and Motion Components

Abstract Slides Poster Similar

3D Pots Configuration System by Optimizing Over Geometric Constraints

Jae Eun Kim, Muhammad Zeeshan Arshad, Seong Jong Yoo, Je Hyeong Hong, Jinwook Kim, Young Min Kim

Auto-TLDR; Optimizing 3D Configurations for Stable Pottery Restoration from irregular and noisy evidence

Abstract Slides Poster Similar

DAL: A Deep Depth-Aware Long-Term Tracker

Yanlin Qian, Song Yan, Alan Lukežič, Matej Kristan, Joni-Kristian Kamarainen, Jiri Matas

Auto-TLDR; Deep Depth-Aware Long-Term RGBD Tracking with Deep Discriminative Correlation Filter

Abstract Slides Poster Similar

VTT: Long-Term Visual Tracking with Transformers

Tianling Bian, Yang Hua, Tao Song, Zhengui Xue, Ruhui Ma, Neil Robertson, Haibing Guan

Auto-TLDR; Visual Tracking Transformer with transformers for long-term visual tracking

One Step Clustering Based on A-Contrario Framework for Detection of Alterations in Historical Violins

Alireza Rezaei, Sylvie Le Hégarat-Mascle, Emanuel Aldea, Piercarlo Dondi, Marco Malagodi

Auto-TLDR; A-Contrario Clustering for the Detection of Altered Violins using UVIFL Images

Abstract Slides Poster Similar

Visual Object Tracking in Drone Images with Deep Reinforcement Learning

Auto-TLDR; A Deep Reinforcement Learning based Single Object Tracker for Drone Applications

Abstract Slides Poster Similar

An Empirical Analysis of Visual Features for Multiple Object Tracking in Urban Scenes

Mehdi Miah, Justine Pepin, Nicolas Saunier, Guillaume-Alexandre Bilodeau

Auto-TLDR; Evaluating Appearance Features for Multiple Object Tracking in Urban Scenes

Abstract Slides Poster Similar

A Novel Random Forest Dissimilarity Measure for Multi-View Learning

Hongliu Cao, Simon Bernard, Robert Sabourin, Laurent Heutte

Auto-TLDR; Multi-view Learning with Random Forest Relation Measure and Instance Hardness

Abstract Slides Poster Similar

Not 3D Re-ID: Simple Single Stream 2D Convolution for Robust Video Re-Identification

Auto-TLDR; ResNet50-IBN for Video-based Person Re-Identification using Single Stream 2D Convolution Network

Abstract Slides Poster Similar

RSINet: Rotation-Scale Invariant Network for Online Visual Tracking

Yang Fang, Geunsik Jo, Chang-Hee Lee

Auto-TLDR; RSINet: Rotation-Scale Invariant Network for Adaptive Tracking

Abstract Slides Poster Similar

Anime Sketch Colorization by Component-Based Matching Using Deep Appearance Features and Graph Representation

Thien Do, Pham Van, Anh Nguyen, Trung Dang, Quoc Nguyen, Bach Hoang, Giao Nguyen

Auto-TLDR; Combining Deep Learning and Graph Representation for Sketch Colorization

Abstract Slides Poster Similar

SSDL: Self-Supervised Domain Learning for Improved Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Self-supervised Domain Learning for Face Recognition in unconstrained environments

Abstract Slides Poster Similar

How to Define a Rejection Class Based on Model Learning?

Sarah Laroui, Xavier Descombes, Aurelia Vernay, Florent Villiers, Francois Villalba, Eric Debreuve

Auto-TLDR; An innovative learning strategy for supervised classification that is able, by design, to reject a sample as not belonging to any of the known classes

Abstract Slides Poster Similar

ACRM: Attention Cascade R-CNN with Mix-NMS for Metallic Surface Defect Detection

Junting Fang, Xiaoyang Tan, Yuhui Wang

Auto-TLDR; Attention Cascade R-CNN with Mix Non-Maximum Suppression for Robust Metal Defect Detection

Abstract Slides Poster Similar

Gabriella: An Online System for Real-Time Activity Detection in Untrimmed Security Videos

Mamshad Nayeem Rizve, Ugur Demir, Praveen Praveen Tirupattur, Aayush Jung Rana, Kevin Duarte, Ishan Rajendrakumar Dave, Yogesh Rawat, Mubarak Shah

Auto-TLDR; Gabriella: A Real-Time Online System for Activity Detection in Surveillance Videos

An Adaptive Video-To-Video Face Identification System Based on Self-Training

Eric Lopez-Lopez, Carlos V. Regueiro, Xosé M. Pardo

Auto-TLDR; Adaptive Video-to-Video Face Recognition using Dynamic Ensembles of SVM's

Abstract Slides Poster Similar

Learning Defects in Old Movies from Manually Assisted Restoration

Arthur Renaudeau, Travis Seng, Axel Carlier, Jean-Denis Durou, Fabien Pierre, Francois Lauze, Jean-François Aujol

Auto-TLDR; U-Net: Detecting Defects in Old Movies by Inpainting Techniques

Abstract Slides Poster Similar

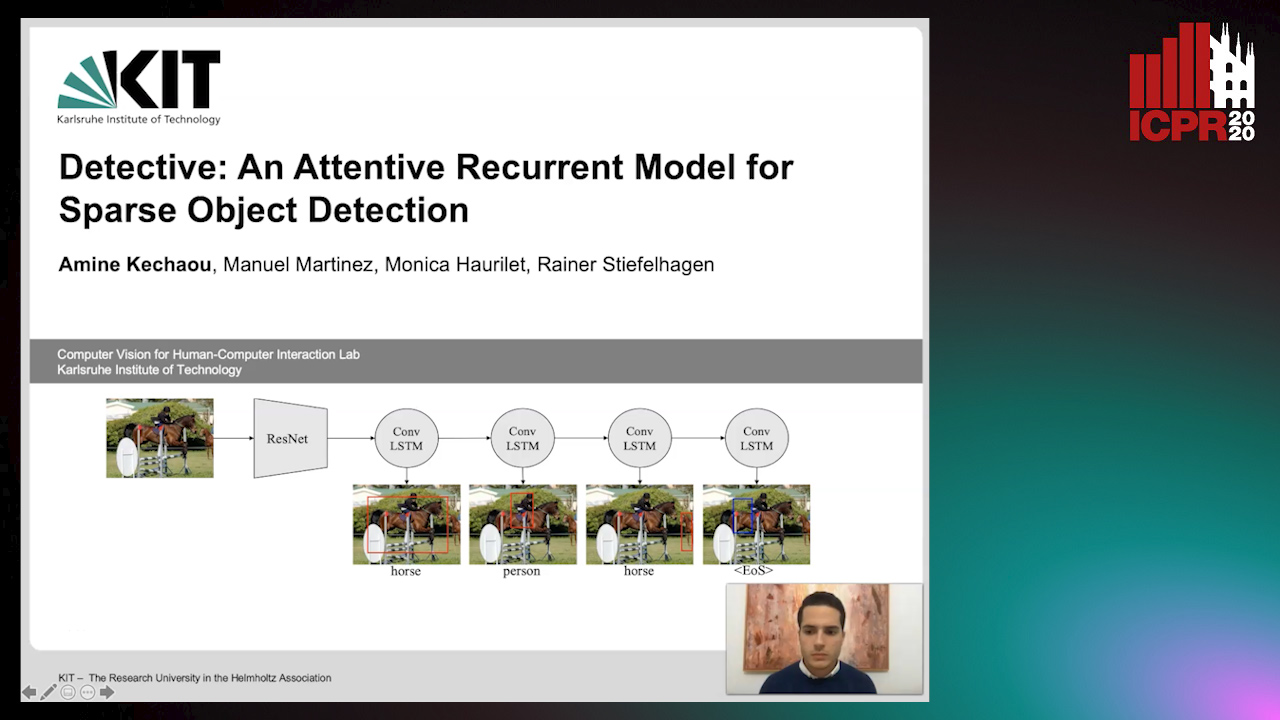

Detective: An Attentive Recurrent Model for Sparse Object Detection

Amine Kechaou, Manuel Martinez, Monica Haurilet, Rainer Stiefelhagen

Auto-TLDR; Detective: An attentive object detector that identifies objects in images in a sequential manner

Abstract Slides Poster Similar

A New Geodesic-Based Feature for Characterization of 3D Shapes: Application to Soft Tissue Organ Temporal Deformations

Karim Makki, Amine Bohi, Augustin Ogier, Marc-Emmanuel Bellemare

Auto-TLDR; Spatio-Temporal Feature Descriptors for 3D Shape Characterization from Point Clouds

Abstract Slides Poster Similar

Better Prior Knowledge Improves Human-Pose-Based Extrinsic Camera Calibration

Olivier Moliner, Sangxia Huang, Kalle Åström

Auto-TLDR; Improving Human-pose-based Extrinsic Calibration for Multi-Camera Systems

Abstract Slides Poster Similar

Tackling Occlusion in Siamese Tracking with Structured Dropouts

Deepak Gupta, Efstratios Gavves, Arnold Smeulders

Auto-TLDR; Structured Dropout for Occlusion in latent space

Abstract Slides Poster Similar