Graph Approximations to Geodesics on Metric Graphs

Robin Vandaele,

Yvan Saeys,

Tijl De Bie

Auto-TLDR; Topological Pattern Recognition of Metric Graphs Using Proximity Graphs

Similar papers

Probabilistic Word Embeddings in Kinematic Space

Adarsh Jamadandi, Rishabh Tigadoli, Ramesh Ashok Tabib, Uma Mudenagudi

Auto-TLDR; Kinematic Space for Hierarchical Representation Learning

Abstract Slides Poster Similar

Improved Time-Series Clustering with UMAP Dimension Reduction Method

Clément Pealat, Vincent Cheutet, Guillaume Bouleux

Auto-TLDR; Time Series Clustering with UMAP as a Pre-processing Step

Abstract Slides Poster Similar

A Hybrid Metric Based on Persistent Homology and Its Application to Signal Classification

Austin Lawson, Yu-Min Chung, William Cruse

Auto-TLDR; Topological Data Analysis with Persistence Curves

Interpolation in Auto Encoders with Bridge Processes

Carl Ringqvist, Henrik Hult, Judith Butepage, Hedvig Kjellstrom

Auto-TLDR; Stochastic interpolations from auto encoders trained on flattened sequences

Abstract Slides Poster Similar

On the Global Self-attention Mechanism for Graph Convolutional Networks

Auto-TLDR; Global Self-Attention Mechanism for Graph Convolutional Networks

Graph Signal Active Contours

Auto-TLDR; Adaptation of Active Contour Without Edges for Graph Signal Processing

A New Geodesic-Based Feature for Characterization of 3D Shapes: Application to Soft Tissue Organ Temporal Deformations

Karim Makki, Amine Bohi, Augustin Ogier, Marc-Emmanuel Bellemare

Auto-TLDR; Spatio-Temporal Feature Descriptors for 3D Shape Characterization from Point Clouds

Abstract Slides Poster Similar

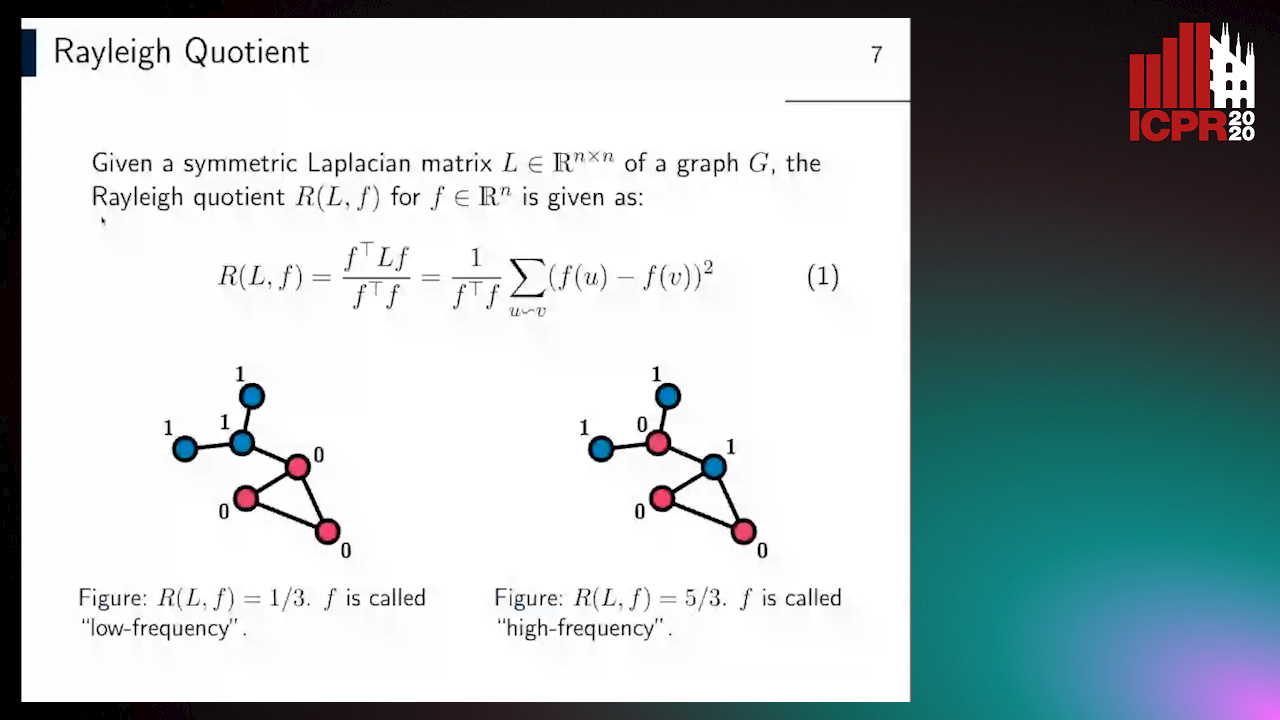

Revisiting Graph Neural Networks: Graph Filtering Perspective

Hoang Nguyen-Thai, Takanori Maehara, Tsuyoshi Murata

Auto-TLDR; Two-Layers Graph Convolutional Network with Graph Filters Neural Network

Abstract Slides Poster Similar

RNN Training along Locally Optimal Trajectories via Frank-Wolfe Algorithm

Yun Yue, Ming Li, Venkatesh Saligrama, Ziming Zhang

Auto-TLDR; Frank-Wolfe Algorithm for Efficient Training of RNNs

Abstract Slides Poster Similar

Auto Encoding Explanatory Examples with Stochastic Paths

Cesar Ali Ojeda Marin, Ramses J. Sanchez, Kostadin Cvejoski, Bogdan Georgiev

Auto-TLDR; Semantic Stochastic Path: Explaining a Classifier's Decision Making Process using latent codes

Abstract Slides Poster Similar

Map-Based Temporally Consistent Geolocalization through Learning Motion Trajectories

Auto-TLDR; Exploiting Motion Trajectories for Geolocalization of Object on Topological Map using Recurrent Neural Network

Abstract Slides Poster Similar

Generalized Conics: Properties and Applications

Aysylu Gabdulkhakova, Walter Kropatsch

Auto-TLDR; A Generalized Conic Representation for Distance Fields

Abstract Slides Poster Similar

N2D: (Not Too) Deep Clustering Via Clustering the Local Manifold of an Autoencoded Embedding

Ryan Mcconville, Raul Santos-Rodriguez, Robert Piechocki, Ian Craddock

Auto-TLDR; Local Manifold Learning for Deep Clustering on Autoencoded Embeddings

Uniform and Non-Uniform Sampling Methods for Sub-Linear Time K-Means Clustering

Auto-TLDR; Sub-linear Time Clustering with Constant Approximation Ratio for K-Means Problem

Abstract Slides Poster Similar

Supervised Feature Embedding for Classification by Learning Rank-Based Neighborhoods

Ghazaal Sheikhi, Hakan Altincay

Auto-TLDR; Supervised Feature Embedding with Representation Learning of Rank-based Neighborhoods

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

A Novel Random Forest Dissimilarity Measure for Multi-View Learning

Hongliu Cao, Simon Bernard, Robert Sabourin, Laurent Heutte

Auto-TLDR; Multi-view Learning with Random Forest Relation Measure and Instance Hardness

Abstract Slides Poster Similar

A Randomized Algorithm for Sparse Recovery

Huiyuan Yu, Maggie Cheng, Yingdong Lu

Auto-TLDR; A Constrained Graph Optimization Algorithm for Sparse Signal Recovery

Cluster-Size Constrained Network Partitioning

Maksim Mironov, Konstantin Avrachenkov

Auto-TLDR; Unsupervised Graph Clustering with Stochastic Block Model

Abstract Slides Poster Similar

Generalized Shortest Path-Based Superpixels for Accurate Segmentation of Spherical Images

Rémi Giraud, Rodrigo Borba Pinheiro, Yannick Berthoumieu

Auto-TLDR; SPS: Spherical Shortest Path-based Superpixels

Abstract Slides Poster Similar

Double Manifolds Regularized Non-Negative Matrix Factorization for Data Representation

Jipeng Guo, Shuai Yin, Yanfeng Sun, Yongli Hu

Auto-TLDR; Double Manifolds Regularized Non-negative Matrix Factorization for Clustering

Abstract Slides Poster Similar

Sketch-Based Community Detection Via Representative Node Sampling

Mahlagha Sedghi, Andre Beckus, George Atia

Auto-TLDR; Sketch-based Clustering of Community Detection Using a Small Sketch

Abstract Slides Poster Similar

Aggregating Dependent Gaussian Experts in Local Approximation

Auto-TLDR; A novel approach for aggregating the Gaussian experts by detecting strong violations of conditional independence

Abstract Slides Poster Similar

Classification and Feature Selection Using a Primal-Dual Method and Projections on Structured Constraints

Michel Barlaud, Antonin Chambolle, Jean_Baptiste Caillau

Auto-TLDR; A Constrained Primal-dual Method for Structured Feature Selection on High Dimensional Data

Abstract Slides Poster Similar

Exact and Convergent Iterative Methods to Compute the Orthogonal Point-To-Ellipse Distance

Siyu Guo, Pingping Hu, Zhigang Ling, He Wen, Min Liu, Lu Tang

Auto-TLDR; Convergent iterative algorithm for orthogonal distance based ellipse fitting

Abstract Slides Poster Similar

Learning Sign-Constrained Support Vector Machines

Kenya Tajima, Kouhei Tsuchida, Esmeraldo Ronnie Rey Zara, Naoya Ohta, Tsuyoshi Kato

Auto-TLDR; Constrained Sign Constraints for Learning Linear Support Vector Machine

Kernel-based Graph Convolutional Networks

Auto-TLDR; Spatial Graph Convolutional Networks in Recurrent Kernel Hilbert Space

Abstract Slides Poster Similar

TreeRNN: Topology-Preserving Deep Graph Embedding and Learning

Yecheng Lyu, Ming Li, Xinming Huang, Ulkuhan Guler, Patrick Schaumont, Ziming Zhang

Auto-TLDR; TreeRNN: Recurrent Neural Network for General Graph Classification

Abstract Slides Poster Similar

Learning Connectivity with Graph Convolutional Networks

Auto-TLDR; Learning Graph Convolutional Networks Using Topological Properties of Graphs

Abstract Slides Poster Similar

Killing Four Birds with One Gaussian Process: The Relation between Different Test-Time Attacks

Kathrin Grosse, Michael Thomas Smith, Michael Backes

Auto-TLDR; Security of Gaussian Process Classifiers against Attack Algorithms

Abstract Slides Poster Similar

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures

Graph Discovery for Visual Test Generation

Neil Hallonquist, Laurent Younes, Donald Geman

Auto-TLDR; Visual Question Answering over Graphs: A Probabilistic Framework for VQA

Abstract Slides Poster Similar

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Encoding Brain Networks through Geodesic Clustering of Functional Connectivity for Multiple Sclerosis Classification

Muhammad Abubakar Yamin, Valsasina Paola, Michael Dayan, Sebastiano Vascon, Tessadori Jacopo, Filippi Massimo, Vittorio Murino, A Rocca Maria, Diego Sona

Auto-TLDR; Geodesic Clustering of Connectivity Matrices for Multiple Sclerosis Classification

Abstract Slides Poster Similar

Interactive Style Space of Deep Features and Style Innovation

Auto-TLDR; Interactive Style Space of Convolutional Neural Network Features

Abstract Slides Poster Similar

On Learning Random Forests for Random Forest Clustering

Manuele Bicego, Francisco Escolano

Auto-TLDR; Learning Random Forests for Clustering

Abstract Slides Poster Similar

Low Rank Representation on Product Grassmann Manifolds for Multi-viewSubspace Clustering

Jipeng Guo, Yanfeng Sun, Junbin Gao, Yongli Hu, Baocai Yin

Auto-TLDR; Low Rank Representation on Product Grassmann Manifold for Multi-View Data Clustering

Abstract Slides Poster Similar

Q-SNE: Visualizing Data Using Q-Gaussian Distributed Stochastic Neighbor Embedding

Motoshi Abe, Junichi Miyao, Takio Kurita

Auto-TLDR; Q-Gaussian distributed stochastic neighbor embedding for 2-dimensional mapping and classification

Abstract Slides Poster Similar

Nearest Neighbor Classification Based on Activation Space of Convolutional Neural Network

Xinbo Ju, Shuo Shao, Huan Long, Weizhe Wang

Auto-TLDR; Convolutional Neural Network with Convex Hull Based Classifier

Matching of Matching-Graphs – a Novel Approach for Graph Classification

Auto-TLDR; Stable Graph Matching Information for Pattern Recognition

Abstract Slides Poster Similar

GraphBGS: Background Subtraction Via Recovery of Graph Signals

Jhony Heriberto Giraldo Zuluaga, Thierry Bouwmans

Auto-TLDR; Graph BackGround Subtraction using Graph Signals

Abstract Slides Poster Similar

Learning Stable Deep Predictive Coding Networks with Weight Norm Supervision

Auto-TLDR; Stability of Predictive Coding Network with Weight Norm Supervision

Abstract Slides Poster Similar

Locality-Promoting Representation Learning

Auto-TLDR; Locality-promoting Regularization for Neural Networks

Abstract Slides Poster Similar

Robust Skeletonization for Plant Root Structure Reconstruction from MRI

Auto-TLDR; Structural reconstruction of plant roots from MRI using semantic root vs shoot segmentation and 3D skeletonization

Abstract Slides Poster Similar

Learning Graph Matching Substitution Weights Based on a Linear Regression

Shaima Algabli, Francesc Serratosa

Auto-TLDR; Learning the weights on local attributes of attributed graphs

Abstract Slides Poster Similar

Low-Cost Lipschitz-Independent Adaptive Importance Sampling of Stochastic Gradients

Huikang Liu, Xiaolu Wang, Jiajin Li, Man-Cho Anthony So

Auto-TLDR; Adaptive Importance Sampling for Stochastic Gradient Descent

Generic Document Image Dewarping by Probabilistic Discretization of Vanishing Points

Gilles Simon, Salvatore Tabbone

Auto-TLDR; Robust Document Dewarping using vanishing points

Abstract Slides Poster Similar

On Morphological Hierarchies for Image Sequences

Caglayan Tuna, Alain Giros, François Merciol, Sébastien Lefèvre

Auto-TLDR; Comparison of Hierarchies for Image Sequences

Abstract Slides Poster Similar